That unreliability is concerning. Glad mouser worked out! (They are only place getting rpi’s from is simple).I cancelled my original order, it continue to shift the date. I ordered on Monday from Mouser (EU) and it got shipped out on Monday and it arrived todayI will install it tonight in my second NUC13.

BTW - i did a bit more testing, but NUC8 TB3 <-> NUC13 TB4 is not really super reliable, so i will give up on that. Only stick to NUC13.

Intel Nuc 13 Pro Thunderbolt Ring Network Ceph Cluster

- Thread starter l-koeln

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I wonder if whatever is at the root of this is also the reason your USB passthrough was unreliable?but NUC8 TB3 <-> NUC13 TB4 is not really super reliable

One more finding.

I find the following scenarios super reliable:

However for this scenario alone things are not so pretty:

in this scenario the only fix is to pull the TB cable on the affect port

this probably isn't a big deal in a non-replicated storage environment.

but this can be problematic for ceph

luckily this doesn't seem to affect the hard power pull which are the unattended scenarios i worry about.

(r/thunderbolt post - shot in the dark at getting help)

I find the following scenarios super reliable:

- pulling one of the three TB cables

- Rebooting any node

- hard failure of any node (pull the power cord)

However for this scenario alone things are not so pretty:

- shutdown a node (gracefully)

in this scenario the only fix is to pull the TB cable on the affect port

this probably isn't a big deal in a non-replicated storage environment.

but this can be problematic for ceph

luckily this doesn't seem to affect the hard power pull which are the unattended scenarios i worry about.

(r/thunderbolt post - shot in the dark at getting help)

Last edited:

No, not related. I tested via via USB passthrough and "usbip". With my Z-Wave I had too many drops/retries with passthrough and with "usbip" zero issues. That is why I moved fully to "usbip" (it is also more flexible).I wonder if whatever is at the root of this is also the reason your USB passthrough was unreliable?

Using two NUC13s everything was working great. ~22Gbps. After a recent reboot, interface en05 is completely missing on both hosts. I have no idea why. I have stripped all of my thunderbolt configurations out, rebooted, reapplied configs and still nothing.

Attachments

Try unplugging your cables and plugging them back in.... there is a bug in the linux kernel.....

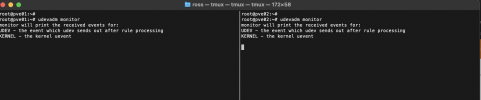

(do this with the power on - you can also monitor with

check

(do this with the power on - you can also monitor with

udevadm monitorcheck

dmesg | grep thunderbolt and see if you have errros

Last edited:

I get no activity when runningTry unplugging your cables and plugging them back in.... there is a bug in the linux kernel.....

(do this with the power on - you can also monitor withudevadm monitor

checkdmesg | grep thunderboltand see if you have errros

udevadm monitor and unplugging and plugging back in. However, I do get

root@pve02:/sys/bus/thunderbolt/devices# dmesg | grep -i thunderbolt

[ 2.858908] ACPI: bus type thunderbolt registered

which I think means the kernel recognizes the presence of a Thunderbolt bus type

you are using TB cables and not generic USBC cables right?

Also make sure you are FIRMLY inserting them (not to hard, but make sure they click in - i had a few cases where it hadn't clicked in.

also if you only have 1 thunderbolt entry in dmesg you have issues....

a successful udevadm monitor you will see the following when you plug the cable in AND there is something powered the other end of the cable, also you modified your mod probe file right, on all machines?

Also make sure you are FIRMLY inserting them (not to hard, but make sure they click in - i had a few cases where it hadn't clicked in.

also if you only have 1 thunderbolt entry in dmesg you have issues....

a successful udevadm monitor you will see the following when you plug the cable in AND there is something powered the other end of the cable, also you modified your mod probe file right, on all machines?

Code:

KERNEL[79853.365220] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/usb4_port1/0-0:1.1 (thunderbolt)

UDEV [79853.373667] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/usb4_port1/0-0:1.1 (thunderbolt)

KERNEL[79853.376883] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/usb4_port1/0-0:1.1/nvm_active0 (nvmem)

KERNEL[79853.376888] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/usb4_port1/0-0:1.1/nvm_non_active0 (nvmem)

UDEV [79853.377514] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/usb4_port1/0-0:1.1/nvm_active0 (nvmem)

UDEV [79853.378188] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/usb4_port1/0-0:1.1/nvm_non_active0 (nvmem)

KERNEL[79857.693309] change /0-1 (thunderbolt)

UDEV [79857.698335] change /0-1 (thunderbolt)

KERNEL[79858.706479] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1 (thunderbolt)

KERNEL[79858.706494] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0 (thunderbolt)

KERNEL[79858.706500] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0/net/thunderbolt0 (net)

KERNEL[79858.706504] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0/net/thunderbolt0/queues/rx-0 (queues)

KERNEL[79858.706507] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0/net/thunderbolt0/queues/tx-0 (queues)

KERNEL[79858.706513] bind /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0 (thunderbolt)

UDEV [79858.707162] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1 (thunderbolt)

UDEV [79858.707402] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0 (thunderbolt)

KERNEL[79858.708145] move /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0/net/en05 (net)

UDEV [79858.739306] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0/net/en05 (net)

UDEV [79858.739725] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0/net/thunderbolt0/queues/rx-0 (queues)

UDEV [79858.740615] add /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0/net/thunderbolt0/queues/tx-0 (queues)

UDEV [79858.740953] bind /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0 (thunderbolt)

UDEV [79858.984890] move /devices/pci0000:00/0000:00:0d.2/domain0/0-0/0-1/0-1.0/net/en05 (net)

Last edited:

this is what dmesg llooks like with a successful boot with no retimer issues:

if you don't see the later things then you can't expect any of your config scripts or anything to work

only the rename is done from the system link file you created

Make sure you check both nodes - you also will only get one line itme item on a new node if the old node has the channel bonding issue (so run the udevadm montor and/or dmesg commands on BOTH after plugging in cables)

Code:

[ 5.272775] ACPI: bus type thunderbolt registered

[ 7.210494] thunderbolt 0-0:1.1: new retimer found, vendor=0x8087 device=0x15ee

[ 20.601994] thunderbolt 0-1: new host found, vendor=0x8086 device=0x1

[ 20.601998] thunderbolt 0-1: Intel Corp. pve3

[ 20.608645] thunderbolt-net 0-1.0 en05: renamed from thunderbolt0if you don't see the later things then you can't expect any of your config scripts or anything to work

only the rename is done from the system link file you created

Make sure you check both nodes - you also will only get one line itme item on a new node if the old node has the channel bonding issue (so run the udevadm montor and/or dmesg commands on BOTH after plugging in cables)

Last edited:

I am using this Thunderbolt 4 cable. I have checked the physical connection. Ran udevadm monitor on both nodes and nothing. I am at a complete loss, since this happened at the same exact time on both hosts. The only thing I can think of is that I installed Tailscale on both nodes prior to the last reboot. The TB4 connection was working post Tailscale install, just not after the next reboot. I have no idea if thats actually related or not. I am tempted to pull node2 out of the cluster, reinstall, and see if I have the same issuesthis is what dmesg llooks like with a successful boot with no retimer issues:

Code:[ 5.272775] ACPI: bus type thunderbolt registered [ 7.210494] thunderbolt 0-0:1.1: new retimer found, vendor=0x8087 device=0x15ee [ 20.601994] thunderbolt 0-1: new host found, vendor=0x8086 device=0x1 [ 20.601998] thunderbolt 0-1: Intel Corp. pve3 [ 20.608645] thunderbolt-net 0-1.0 en05: renamed from thunderbolt0

if you don't see the later things then you can't expect any of your config scripts or anything to work

only the rename is done from the system link file you created

Make sure you check both nodes - you also will only get one line itme item on a new node if the old node has the channel bonding issue (so run the udevadm montor and/or dmesg commands on BOTH after plugging in cables)

EDIT - I forgot to mention that. I have not modified any mod probe file

Attachments

Last edited:

Well thats an issue....EDIT - I forgot to mention that. I have not modified any mod probe file

take another look at step 2 on this list https://gist.github.com/scyto/76e94832927a89d977ea989da157e9dc

me too, seriously make sure it really is plugged in.... i had an issue like yours until i realized i need to be just a little firmer - it should feel like a positive clickam using this Thunderbolt 4 cable.

to capture it here too, the post linux 6.5.2 fixes I got from the thunderbolt maintainer fixed:

1. IPv6 TCP not working

2. my boot time thunderbolt negotiation issues that would cause thunderbolt-net to fail (this patch may also fix many other classes of issue...)

https://forum.proxmox.com/threads/t...ed-request-link-state-change-aborting.133104/

1. IPv6 TCP not working

2. my boot time thunderbolt negotiation issues that would cause thunderbolt-net to fail (this patch may also fix many other classes of issue...)

https://forum.proxmox.com/threads/t...ed-request-link-state-change-aborting.133104/

Hi everyone,

thank you @scyto for your work to bring this to where it is now!

Inspired by your github writeup I bought three NUC13 and successfully built my new 3 node homelab ceph cluster.

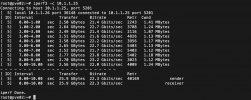

I have one connection (pve01 outgoing) which only reaches ~14Gbit, pve01 incomming and all the other connections are reaching ~21Gbit ... any thoughts on this?

best regards

thank you @scyto for your work to bring this to where it is now!

Inspired by your github writeup I bought three NUC13 and successfully built my new 3 node homelab ceph cluster.

I have one connection (pve01 outgoing) which only reaches ~14Gbit, pve01 incomming and all the other connections are reaching ~21Gbit ... any thoughts on this?

best regards

So I found the difference ....

for several pass-through tests I set

.

For any reason, this lowers the thunderbolt-net throughput.

I reverted this and now have stable ~21Gbit over the thunderbolt net in all directions with any node.

for several pass-through tests I set

Code:

intel_iommu=onFor any reason, this lowers the thunderbolt-net throughput.

I reverted this and now have stable ~21Gbit over the thunderbolt net in all directions with any node.

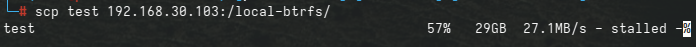

How's your performance when you scp a >50GB File over the thunderbolt link?

mine starts at aroung 750MB/s and drops after around 7-10GB and is constantly stalling.

Edit:

also found this by myself ... its the crappy OS SSD (Transcend MTS430S)

Tested again with a Samsung OEM Datacenter SSD PM893 i successfully copied 50GB at 500MB/s

mine starts at aroung 750MB/s and drops after around 7-10GB and is constantly stalling.

Edit:

also found this by myself ... its the crappy OS SSD (Transcend MTS430S)

Tested again with a Samsung OEM Datacenter SSD PM893 i successfully copied 50GB at 500MB/s

Last edited:

D

Deleted member 205422

Guest

So I do not mean to start anything here, but I have this on NUC11 which is TB3 only and I also get 18-19-ish bandwidth.

Shouldn't this be more like 32Gbps for PCIe throughput on TB4? Does anyone know why it is not?

Shouldn't this be more like 32Gbps for PCIe throughput on TB4? Does anyone know why it is not?