Hello!

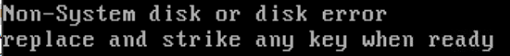

I'm trying to install Proxmox VE 8 on my HP Proliant DL380E G8 14LFF with HP P420 Smart Array RAID Controller, but it doesn't recognize the RAID controller.

I'm getting the error "No hard disk found!" Can you help me? Any idea of what I might be doing wrong?

Thank you,

Regards.

I'm trying to install Proxmox VE 8 on my HP Proliant DL380E G8 14LFF with HP P420 Smart Array RAID Controller, but it doesn't recognize the RAID controller.

I'm getting the error "No hard disk found!" Can you help me? Any idea of what I might be doing wrong?

Thank you,

Regards.