Hi all,

I'm using a Proxmox Backup Server instance as a backup pool of a PVE cluster.

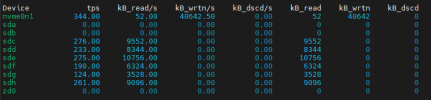

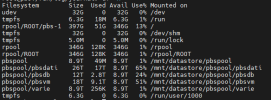

Despite the PBS pool is mounted on a RAID5 4x16TB HDD, and the 256 nvme has only OS on it, it seems the nvme keeps doing a very very huge traffic (645TB over less than 1year period):

the command iostat 1 suggests a real-time usage of about 20MB/s of constant write speed used by proxmox-bakcup-proxy and systemd-journald processes.

The Proxmox Backup Server verison is 2.4.6.

Do someone have similar experience and could provide help in troubleshooting this? At the moment, we're buying another nvme unit but if we don't solve the problem this unit will die in another year.

Thanks in advance.

I'm using a Proxmox Backup Server instance as a backup pool of a PVE cluster.

Despite the PBS pool is mounted on a RAID5 4x16TB HDD, and the 256 nvme has only OS on it, it seems the nvme keeps doing a very very huge traffic (645TB over less than 1year period):

the command iostat 1 suggests a real-time usage of about 20MB/s of constant write speed used by proxmox-bakcup-proxy and systemd-journald processes.

The Proxmox Backup Server verison is 2.4.6.

Do someone have similar experience and could provide help in troubleshooting this? At the moment, we're buying another nvme unit but if we don't solve the problem this unit will die in another year.

Thanks in advance.

Last edited: