Hi.

I have a backup vm (veeam) with a variety of disks, which one of them is located on an NFS share.

It was about 20 TB large, residing on a NAS. I decided to expand the pool on the NAS, in order to be able to expand the disk. I expanded it from about 20TB to 41TB.

When i got about to expand the qcow disk in pve, it failed with a timeout, but the disk was actually resized when I look at the qcow2 size in the VM config in the GUI.

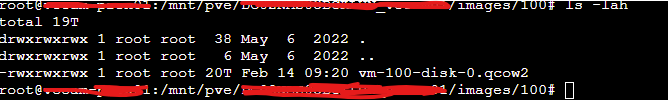

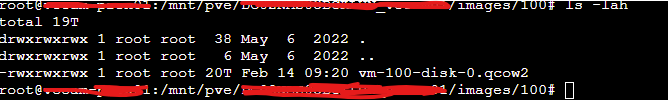

When I look in the folder at the NAS (and the /mnt/pve folder), the qcow2 file, however, is only the original size.

So. the GUI shows a larger size than ls -lah in the /mnt folder. The VM is happy to extend the partition on this qcow2 file to way beyond 20TB

I feel that this might be a problem when reaching 20 TB in the VM - is that correct?

Is there any waay I can rectify it, or should I not worry?

I've restarted the VM, detached the disk and attached it again. Still reads the larger size in pve and the smaller size on the qcow2 file on the NAS.

VM defintion (the trouble disk is.. Trouble_disk. ):

):

I have a backup vm (veeam) with a variety of disks, which one of them is located on an NFS share.

It was about 20 TB large, residing on a NAS. I decided to expand the pool on the NAS, in order to be able to expand the disk. I expanded it from about 20TB to 41TB.

When i got about to expand the qcow disk in pve, it failed with a timeout, but the disk was actually resized when I look at the qcow2 size in the VM config in the GUI.

When I look in the folder at the NAS (and the /mnt/pve folder), the qcow2 file, however, is only the original size.

So. the GUI shows a larger size than ls -lah in the /mnt folder. The VM is happy to extend the partition on this qcow2 file to way beyond 20TB

I feel that this might be a problem when reaching 20 TB in the VM - is that correct?

Is there any waay I can rectify it, or should I not worry?

I've restarted the VM, detached the disk and attached it again. Still reads the larger size in pve and the smaller size on the qcow2 file on the NAS.

VM defintion (the trouble disk is.. Trouble_disk.

agent: 1balloon: 0boot: order=virtio0;ide0cores: 12cpu: hosthotplug: disk,network,usbide0: none,media=cdrommachine: pc-i440fx-9.0memory: 81920name: VEEAM01numa: 0onboot: 1ostype: win10scsihw: virtio-scsi-pcismbios1: uuid=******************************sockets: 1startup: order=1tags: internalvirtio0: *************_LUN01:vm-100-disk-1,size=170Gvirtio1: ***********:vm-100-disk-0,backup=0,size=14Tvirtio2: Trouble_disk:100/vm-100-disk-0.qcow2,backup=0,size=34000Gvirtio3: ***************_LUN01:vm-100-disk-0,backup=0,size=40000Gvmgenid: ***************************

Last edited: