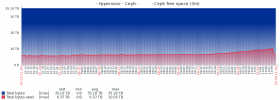

We upgraded several clusters to PVE7 + Ceph Pacific 16.2.5 a couple of weeks back. We received zero performance or stability reports but did observe storage utilisation increasing consistently. After upgrading the Ceph Pacific packages to 16.2.6 on Thursday long running snaptrim operations have started running, appearing to cleanup data that was being leaked whilst running 16.2.5:

We do incremental backups by comparing a rolling snapshot of the source RBD images so I would presume a problem on the prior release with regards to how deleted snapshots are cleaned up.

We did however stumble with one of the storage nodes, where a failed RAM module shrunk the available RAM to 12 GiB. Ceph OSDs will by default allocate 4 GiB of memory for each process, with 6 OSDs this exhausts the available memory and the OOM killer then takes action, resulting in OSDs occasionally flapping.

We had subsequently limited the available memory per OSD with the following Ceph config option. This had worked well for Nautilus, Octopus and Pacific 16.2.5, herewith the structure:

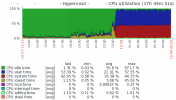

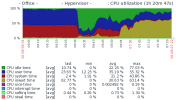

After upgrading we started observing alerts about certain OSDs being laggy and write requests taking 5-14 seconds to complete. OSD processes were running at 100% CPU utilisation, as visible in the following graph:

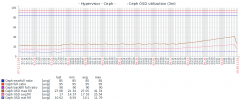

My Google-Fo led me down a rabbit hole of disabling the kernel buffer cache for BlueFS I/O requests. This immediately dropped the CPU utilisation but introduced high I/O wait times:

Disabling BlueFS caching:

Reviewing 'iotop -o' showed 700+ MB/s where each of the 6 OSDs were consistently reading at 110+ MB/s each. We then realised that there were many snaptrims active, resulting in unusual OSD activity as the maps were updated. The underlying problem was however that the OSD memory limit resulted in the process not being able to store the object map in memory (now perhaps also larger than normal due to 16.2.5 not cleaning up deleted snapshot objects), the result is that it then reads sections of the compressed maps directly from the OSDs. When there is available system memory some is used by the buffer cache so it can feed these requests from the buffer cache, resulting in high CPU as it then constantly uncompresses the data. Turning off caching then simply had the result that the bottleneck becoming the physical discs, due to it still constantly reading the maps from storage.

The correct remediation was for us to turn caching back on (performance may be better for NVMe and SSD drives with this off) and then increasing the memory allocation for the OSD processes to 2 GiB each. This allows the OSD process to fit the object map in to memory, remediating the need for it to constantly read from storage.

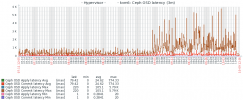

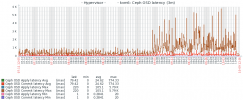

Latency on 16.2.6 also appears to not just be back to Octopus levels, after running 16.2.5 a while, but outperform it. The latency graph below is from another cluster (no OSD memory limits) where it ran Ceph Octopus from 07-11 through until 09-04, upgraded to Ceph Pacific 16.2.5 on 09-05 and Ceph Pacific 16.2.6 on 10-08.

We do incremental backups by comparing a rolling snapshot of the source RBD images so I would presume a problem on the prior release with regards to how deleted snapshots are cleaned up.

We did however stumble with one of the storage nodes, where a failed RAM module shrunk the available RAM to 12 GiB. Ceph OSDs will by default allocate 4 GiB of memory for each process, with 6 OSDs this exhausts the available memory and the OOM killer then takes action, resulting in OSDs occasionally flapping.

We had subsequently limited the available memory per OSD with the following Ceph config option. This had worked well for Nautilus, Octopus and Pacific 16.2.5, herewith the structure:

Code:

/etc/ceph/ceph.conf:

[osd.XX]

osd_memory_target = 1073741824After upgrading we started observing alerts about certain OSDs being laggy and write requests taking 5-14 seconds to complete. OSD processes were running at 100% CPU utilisation, as visible in the following graph:

My Google-Fo led me down a rabbit hole of disabling the kernel buffer cache for BlueFS I/O requests. This immediately dropped the CPU utilisation but introduced high I/O wait times:

Disabling BlueFS caching:

ceph daemon osd.$OSD config set bluefs_buffered_io false

ceph daemon osd.$OSD config show | grep bluefs_buffered_io;Reviewing 'iotop -o' showed 700+ MB/s where each of the 6 OSDs were consistently reading at 110+ MB/s each. We then realised that there were many snaptrims active, resulting in unusual OSD activity as the maps were updated. The underlying problem was however that the OSD memory limit resulted in the process not being able to store the object map in memory (now perhaps also larger than normal due to 16.2.5 not cleaning up deleted snapshot objects), the result is that it then reads sections of the compressed maps directly from the OSDs. When there is available system memory some is used by the buffer cache so it can feed these requests from the buffer cache, resulting in high CPU as it then constantly uncompresses the data. Turning off caching then simply had the result that the bottleneck becoming the physical discs, due to it still constantly reading the maps from storage.

The correct remediation was for us to turn caching back on (performance may be better for NVMe and SSD drives with this off) and then increasing the memory allocation for the OSD processes to 2 GiB each. This allows the OSD process to fit the object map in to memory, remediating the need for it to constantly read from storage.

Latency on 16.2.6 also appears to not just be back to Octopus levels, after running 16.2.5 a while, but outperform it. The latency graph below is from another cluster (no OSD memory limits) where it ran Ceph Octopus from 07-11 through until 09-04, upgraded to Ceph Pacific 16.2.5 on 09-05 and Ceph Pacific 16.2.6 on 10-08.

Last edited: