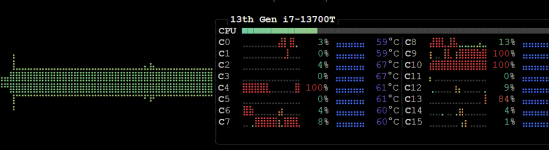

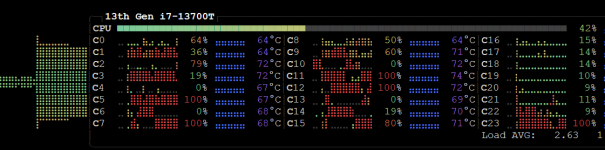

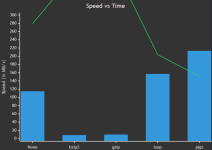

Local dd "effective speed of ~1.1 Gb/s." - it's Gbit (dd wouldn't give Gbit) or GByte ? That's the speed of 1 hdd or 1 slow nvme and assumed small for a esxi server with a couple of vm's.

It's gigabit. I just did the math myself,

dd just outputs total chunks and total time. The disk in question was 50 GiB and the

dd process took 7 minutes and 53 seconds which gives an effective speed of around 0.85 Gb/s if my/Wolfram Alpha's math is right.

What happens if you dd null or random over to the PVE host /dev/null? See if it maxes out the NIC. Then we can at least eliminate it's an issue with transfer method and more an issue with storage.

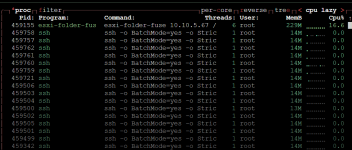

I'm thinking at the moment on how netcat could be implemented. There's a few issues that may or not be a problem. Right now the import process is creating a "local" filesytem with FUSE and it seems to be sequentially reading the data off the FUSE mount to do the import. However, if we were to do something like netcat and dd we could only retrieve data sequentially...

Trying to think how it can be streamed and injected at the same time, instead of using a local storage spot to put it temporarily. Which would be impossible to deal with on larger VMs. And there's no good way to resume an import etc etc...

Would it be smart to use the FUSE filesystem as is to let Proxmox know what's available to be imported and then as it requested the chunks, just feed it the data from the dd pipe?

Edit:

Something like this?

- Detect when Proxmox calls qemu-img convert

- Instead of reading from FUSE mount, spawn netcat transfer

- Feed the netcat stream directly to qemu-img convert stdin

I

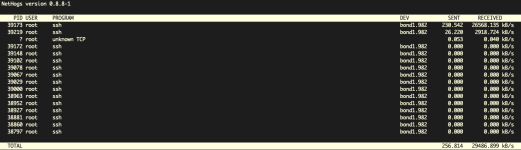

dd-ed over 10 GiB of zeroes and got a basically sustained rate of ~250MiB/s

Code:

[root@my-esx:~] dd if=/dev/zero bs=16M count=640 | nc 192.168.0.150 8000

640+0 records in

640+0 records out

Code:

root@pve:~# nc -l -p 8000 -s 192.168.0.150 | pv | dd bs=16M of=/dev/null

10.0GiB 0:00:38 [ 264MiB/s] [ <=> ]

0+1353822 records in

0+1353822 records out

10737418240 bytes (11 GB, 10 GiB) copied, 38.6578 s, 278 MB/s

I think that is additional evidence that I may be getting bottlenecked on my storage. I'm reading the VMDKs off of a SAN attached by fibre channel, so I don't think I'm maxing out per-disk throughput, but I could be wrong on that front.

In terms of using

nc as part of the importer process, I was thinking of doing something similar by hand if I can't get this working. Basically, just instantiate the VM configs and then pull the disks over manually, in one way or another.

The more I think about it, the more I'm convinced that long term the best solution would be to be able to use the ESXi importer tool without having to define the ESXi storage in Proxmox. If, for example, I could mount an NFS share or something that has my VMs stored on it and attach that to Proxmox, then be able to point the

Import option at the .VMX instead of the .VMDK (which is, as far as I can tell, only an option when you're looking at the ESXi storage type. When I copied over the VM into local storage and defined it as an

Import storage, it only showed me the VMDKs, not the VMX-es. If Proxmox were to support creating VMs from .VMX files on any type of storage tagged as holding the

Import type, it would give more flexibility in terms of the backhaul protocol without needing to do a whole bunch of trickery to get the data out of ESX directly.

That being said, I do think that currently the idea of using

nc as the data backend and just having the FUSE mount expose the available VMs is a smart approach.