I had the same problem today, but a manual creation of the monitor with the ceph built in commands did not help.

It is described here

https://docs.ceph.com/en/latest/rados/operations/add-or-rm-mons/#adding-a-monitor-manual ... but in my case the result was the same as creating the monitor with the proxmox tools.

After several hours I want to share my solution... I hope it helps others tro save some time ;-)

The short version: the solution was to inject a monmap into the stale monitor:

https://docs.ceph.com/en/latest/rad...ing-mon/#recovering-a-monitor-s-broken-monmap

The long version:

1.) Remove all of the stale monitors components and data manually:

pveceph mon destroy ceph-mon@myhost ... results in an error described above "no such monitor id "!

Code:

# stop and disable and remove service

service ceph-mon@myhost stop

systemctl disable ceph-mon@myhost

systemctl daemon-reload

# delete the datadir of the monitor

rm -r /var/lib/ceph/mon/ceph-myhost

#adjust /etc/ceph/ceph.conf manually

# delete IP of stale monitor

mon_host = xx.0.99.83 xx.0.99.82 xx.0.99.84

=> mon_host = xx.0.99.83 xx.0.99.82

# delete the section

[mon.myhost]

public_addr = xx.0.99.84

2.)

After that I was able to add the monitor again with gui or cmdline

Code:

# on the missing monitor host

pveceph createmon

But the monitor could not join the cluster... I tried it again and again, in the logs (var/log/ceph/

ceph-mon@myhost.log there

Code:

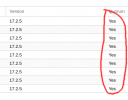

2021-05-11 16:37:47.465 7fdf7c47d700 -1 mon.myhost@-1(probing) e13 get_health_metrics reporting 11 slow ops, oldest is log(1 entries from seq 1 at 2021-05-11 16:33:35.201786)

2021-05-11 16:37:50.321 7fdf7e481700 1 mon.myhost@-1(probing) e13 handle_auth_request failed to assign global_id

2021-05-11 16:37:50.373 7fdf7e481700 1 mon.myhost@-1(probing) e13 handle_auth_request failed to assign global_id

A nice feature is to query the admin port of the stale monitor and query the monitor status direct from the monitor, this helps for a better understanding...

Code:

#get the admin socket patch

ceph-conf --name mon.myhost --show-config-value admin_socket

# possible commands of the monitor

ceph --admin-daemon /var/run/ceph/ceph-mon.myhost.asok help

# query monitor status

ceph --admin-daemon /var/run/ceph/ceph-mon.myhost.asok mon_status

Details can be found here

https://docs.ceph.com/en/latest/rados/troubleshooting/troubleshooting-mon/#understanding-mon-status

3.)

The only working solution for me to bring the monitor back in the cluster again was to "Inject a monmap into the monitor" as described here

https://docs.ceph.com/en/latest/rad...ing-mon/#recovering-a-monitor-s-broken-monmap

With a manual permission fix!

Code:

# login to a working monitor host, stop the service and extract the map

service ceph-mon@myworkinghost stop

ceph-mon -i myworkinghost --extract-monmap /tmp/monmap

2021-05-11 16:44:04.119 7f6087bd6400 -1 wrote monmap to /tmp/monmap

#start the service again and transfer it to the stalehost

service ceph-mon@myworkinghost start

scp /tmp/monmap mystalehost:/tmp/monmap

# on the stale monhost, stop the monitor and inject tthe mapstoppen und injecten

service ceph-mon@mystalehost stop

ceph-mon -i mystalehost --inject-monmap /tmp/monmap

# after that the access rights must be set manually fopr the user "ceph" (there where file permission denied errors, becuase after the injection some files belonged to root)

chown ceph.ceph /var/lib/ceph/mon/ceph-mystalehost/store.db/*

# and start again

service ceph-mon@mystalehost start