Hi,

So, if I understand, these are the steps to take.

# Add hp Public keys

https://downloads.linux.hpe.com/SDR/hpPublicKey2048.pub

https://downloads.linux.hpe.com/SDR/hpPublicKey2048_key1.pub

https://downloads.linux.hpe.com/SDR/hpePublicKey2048_key1.pub

# Add HPE repo - apt_repository:

http://downloads.linux.hpe.com/SDR/repo/mcp {{ ansible_facts['lsb']['codename'] }}/current non-free

# Install all packages

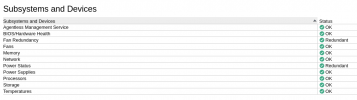

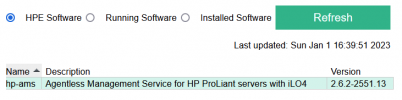

ssa ssacli ssaducli storcli amsd

The keys seem to be deprecated.

# wget -q -O - https://downloads.linux.hpe.com/SDR/hpPublicKey2048.pub | apt-key add -

Warning: apt-key is deprecated. Manage keyring files in trusted.gpg.d instead (see apt-key(8)).

gpg: no valid OpenPGP data found.

So, if I understand, these are the steps to take.

# Add hp Public keys

https://downloads.linux.hpe.com/SDR/hpPublicKey2048.pub

https://downloads.linux.hpe.com/SDR/hpPublicKey2048_key1.pub

https://downloads.linux.hpe.com/SDR/hpePublicKey2048_key1.pub

# Add HPE repo - apt_repository:

http://downloads.linux.hpe.com/SDR/repo/mcp {{ ansible_facts['lsb']['codename'] }}/current non-free

# Install all packages

ssa ssacli ssaducli storcli amsd

The keys seem to be deprecated.

# wget -q -O - https://downloads.linux.hpe.com/SDR/hpPublicKey2048.pub | apt-key add -

Warning: apt-key is deprecated. Manage keyring files in trusted.gpg.d instead (see apt-key(8)).

gpg: no valid OpenPGP data found.

Last edited: