Step 1: Identify your disks

First, you want to confirm the device names of both your SATA and NVMe SSDs.

- Log into the Proxmox web UI (usually via https://your-ip:8006).

- Open a terminal or SSH into your Proxmox node.

- Run the following:

bash

CopyEdit

<span><span>lsblk<br></span></span>

You'll see something like:

graphql

CopyEdit

<span><span>NAME SIZE </span><span><span>TYPE</span></span><span> MOUNTPOINT<br>sda </span><span><span>500</span></span><span>G disk </span><span><span># Your SATA SSD</span></span><span><br>└─sda3 </span><span><span>500</span></span><span>G part /<br>nvme0n1 </span><span><span>1.0</span></span><span>T disk </span><span><span># Your NVMe SSD (raw)</span></span><span><br></span></span>

Confirm which disk is which based on size and current usage.

Step 2: Format the NVMe SSD

Now you’ll prepare the NVMe SSD to be used as storage for VMs and containers.

WARNING: This will wipe

everything on the NVMe drive!

- In the Proxmox Web UI, go to Datacenter > Node (your server) > Disks.

- Find your NVMe disk (nvme0n1), click on it.

- Click Wipe Disk to remove old partitions.

- Then click Initialize Disk with GPT.

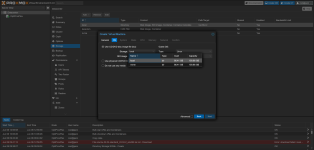

Step 3: Create a storage volume on the NVMe

- Still under Disks, go to LVM or Directory, depending on your preference. Let's use Directory for simplicity.

- Click Create: Directory.

- Disk: Select your NVMe drive (e.g., /dev/nvme0n1).

- Filesystem: ext4 (recommended)

- Mount point: e.g., /mnt/nvme-vmstore

- Click Create

Now the filesystem is mounted and ready.

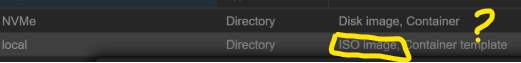

Step 4: Add the NVMe directory as storage

- Go to Datacenter > Storage.

- Click Add > Directory.

- Fill in the form:

- ID: nvme-vmstore (or whatever you like)

- Directory: /mnt/nvme-vmstore

- Content: Check Disk image, Container, Backup if you want.

- Nodes: Leave default

- Click Add

Now you can use the NVMe SSD for VM disks and containers.

Step 5: Configure SATA SSD for ISO/CT Templates

Your Proxmox is already installed on SATA, so we just configure that storage accordingly.

- Still in Datacenter > Storage, find your default storage (likely local or local-lvm).

- If it's local (typically /var/lib/vz), click on it.

- Click Edit and adjust:

- Uncheck Disk image, Container, Backup

- Leave checked: ISO image, Container template, maybe Snippets

- Click OK

This ensures only ISOs and templates are stored there.

Step 6: Create and Use VMs/CTs on the NVMe

Now when you create a new VM or LXC container:

- In the disk/storage dropdown, select your nvme-vmstore

- That ensures VM disks are stored on your fast NVMe drive.