Hi everyone,

I'd like to resize my window 10 VM's disk space.

It is now 80GB and I want to reduce it to 30 GB.

I have already shrinked the volume in the windows 10 guest with its own partition tool and the disk is now only 26,68 GB.

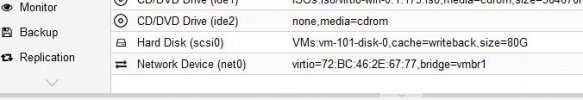

My windows 10 vm setup:

Could you please help me figure it out?

Thanks

I'd like to resize my window 10 VM's disk space.

It is now 80GB and I want to reduce it to 30 GB.

I have already shrinked the volume in the windows 10 guest with its own partition tool and the disk is now only 26,68 GB.

My windows 10 vm setup:

Code:

root@pve:~# qm config 101

agent: 1

balloon: 1024

bootdisk: scsi0

cores: 2

ide1: ISOs:iso/virtio-win-0.1.173.iso,media=cdrom,size=384670K

ide2: none,media=cdrom

memory: 3072

name: Windows10

net0: virtio=72:BC:46:2E:67:77,bridge=vmbr1

numa: 0

ostype: win10

scsi0: VMs:vm-101-disk-0,cache=writeback,size=80G

scsihw: virtio-scsi-pci

smbios1: uuid=3300f60c-c594-4b17-8381-8998b9952eef

sockets: 1

vmgenid: 18059270-8e19-46c7-848d-185a4668981cCould you please help me figure it out?

Thanks