Hi everyone,

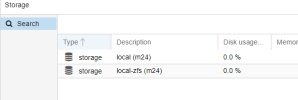

I've been using Proxmox on an OVH bare metal server with two SSDs.

I recently did some testing in my home lab and the most important feature for me is the proxmox-boot-tool which allowed my system to boot from the second drive when I intentionally corrupted the first drive's boot sector.

It worked very smartly and it was super easy recovering from everything using the docs.

However, the OVH Proxmox installer doesn't offer the default ZFS1 mirror setup. The custom installation wizard allows partition customization, but I don't know how it will play out. I tried using the Proxmox ISO installer via IPMI, but it's super slow, likely due to latency, and I don't want to keep the server down for an extended period.

Additionally, setting up the specifics of OVH manually, such as network and boot configurations, can be problematic; I would avoid it if possible.

Has anyone managed to do a clean Proxmox install with a ZFS1 mirror setup on OVH? If so, could you please share the steps or provide any guidance? I'm looking for a way to achieve the same setup I have in my home lab on my OVH server, whether through the default ZFS1 setup or by using the custom partitioning option.

Thanks in advance for your help!

I've been using Proxmox on an OVH bare metal server with two SSDs.

I recently did some testing in my home lab and the most important feature for me is the proxmox-boot-tool which allowed my system to boot from the second drive when I intentionally corrupted the first drive's boot sector.

It worked very smartly and it was super easy recovering from everything using the docs.

However, the OVH Proxmox installer doesn't offer the default ZFS1 mirror setup. The custom installation wizard allows partition customization, but I don't know how it will play out. I tried using the Proxmox ISO installer via IPMI, but it's super slow, likely due to latency, and I don't want to keep the server down for an extended period.

Additionally, setting up the specifics of OVH manually, such as network and boot configurations, can be problematic; I would avoid it if possible.

Has anyone managed to do a clean Proxmox install with a ZFS1 mirror setup on OVH? If so, could you please share the steps or provide any guidance? I'm looking for a way to achieve the same setup I have in my home lab on my OVH server, whether through the default ZFS1 setup or by using the custom partitioning option.

Thanks in advance for your help!