I have a 8-GPU server running Proxmox. The GPUs themselves work great using:

But running nvidia-smi topo -m on a VM gives this:

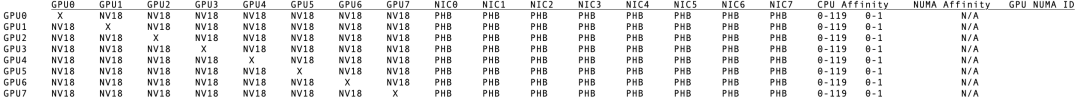

Where PHB means "Connection traversing PCIe as well as a PCIe Host Bridge (typically the CPU)", which means the data goes through the CPU, not directly using the PCI switch. Communication speed confirms this. I'd like to see "PIX = Connection traversing at most a single PCIe bridge".

It's not clear how to do this, create a VFIO group and put all the devices there? But what's next, how to tell Proxmox to pass the entire group instead of individual GPUs?

Or maybe it's too much for Proxmox and I need to use KVM directly?

How do I tell Proxmox to dump /usr/bin/kvm calls to stdout or stderr when I run "qm start"?

Kristen

Code:

qm set 315 --hostpci0 4f:00,pcie=1

qm set 315 --hostpci1 52:00,pcie=1

qm set 315 --hostpci2 56:00,pcie=1

qm set 315 --hostpci3 57:00,pcie=1

qm set 315 --hostpci4 ce:00,pcie=1

qm set 315 --hostpci5 d1:00,pcie=1

qm set 315 --hostpci6 d5:00,pcie=1

qm set 315 --hostpci7 d6:00,pcie=1But running nvidia-smi topo -m on a VM gives this:

Code:

GPU0 GPU1 GPU2 GPU3 GPU4 GPU5 GPU6 GPU7 mlx5_0 CPU Affinity NUMA Affinity

GPU0 X NV4 PHB PHB PHB PHB PHB PHB PHB 0-31 N/A

GPU1 NV4 X PHB PHB PHB PHB PHB PHB PHB 0-31 N/A

GPU2 PHB PHB X NV4 PHB PHB PHB PHB PHB 0-31 N/A

GPU3 PHB PHB NV4 X PHB PHB PHB PHB PHB 0-31 N/A

GPU4 PHB PHB PHB PHB X NV4 PHB PHB PHB 0-31 N/A

GPU5 PHB PHB PHB PHB NV4 X PHB PHB PHB 0-31 N/A

GPU6 PHB PHB PHB PHB PHB PHB X NV4 PHB 0-31 N/

GPU7 PHB PHB PHB PHB PHB PHB NV4 X PHB 0-31 N/A

mlx5_0 PHB PHB PHB PHB PHB PHB PHB PHB XWhere PHB means "Connection traversing PCIe as well as a PCIe Host Bridge (typically the CPU)", which means the data goes through the CPU, not directly using the PCI switch. Communication speed confirms this. I'd like to see "PIX = Connection traversing at most a single PCIe bridge".

It's not clear how to do this, create a VFIO group and put all the devices there? But what's next, how to tell Proxmox to pass the entire group instead of individual GPUs?

Or maybe it's too much for Proxmox and I need to use KVM directly?

How do I tell Proxmox to dump /usr/bin/kvm calls to stdout or stderr when I run "qm start"?

Kristen