I'm new to Proxmox and not sure how to further investigate this fault. Any ideas would be appreciated

Fault

Recent changes

System hardware summary

Network configuration

Main network

lan: Gateway / DNS / DHCP / 192.168.11.1/24 (via pfsense)

Proxmox: 192.168.11.50/24, VLAN not used

VoIP network

3CX: 192.168.12.55/24, VLAN not used

pfsense: VLAN aware, Main on VLAN 11, VoIP VAN 12,

Netgear managed switch used to connect devices & servers

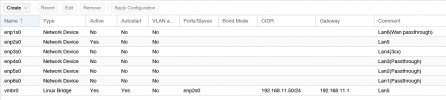

Configuration settings

/etc/network/interfaces

/etc/hosts

systemctl status networking.service

ip addr

Fault

- Proxmox hypervisor has no network access. Ping 192.168.11.1 gateway has 100% packet loss, chrony fails to access any time servers, update servers are unable to refresh.

- Lan Web access to Proxmox hypervistor works

- VMs on Proxmox with pass through Nic all function OK

Recent changes

- Proxmox fresh install on hardware not long after 7.0 was released

- Enabled pve-no-subscription repository yesterday and upgraded to debain and Proxmox to current version -> fault started

- Noted host file had old pve IP so updated to correct IP & subnet. Ensured gateway & DNS also current -> No change in fault

- Checked modifications to Proxmox hypervistor intact. Changes reapplied -> no change

- Restart Proxmox hypervistor -> no change

System hardware summary

- Proxmox a single board computer with 6 Intel NIC

- One Nic has a bridge in Proxmox and is used for Management console and Proxmox internet access via Lan gateway through pfsense.

- pfsense runs on a VM with 4 NIC passed through (WAN and 3 LAN)

- 3cx runs as a VM with 1 NIC passed through (externally connected to VoIP lan with pfsense gateway)

Network configuration

Main network

lan: Gateway / DNS / DHCP / 192.168.11.1/24 (via pfsense)

Proxmox: 192.168.11.50/24, VLAN not used

VoIP network

3CX: 192.168.12.55/24, VLAN not used

pfsense: VLAN aware, Main on VLAN 11, VoIP VAN 12,

Netgear managed switch used to connect devices & servers

Configuration settings

/etc/network/interfaces

Code:

auto lo

iface lo inet loopback

iface enp2s0 inet manual

#Lan5

iface enp1s0 inet manual

#Lan6(Wan passthrough)

iface enp3s0 inet manual

#Lan4(3cx)

iface enp4s0 inet manual

#Lan3(Passthrough)

iface enp5s0 inet manual

#Lan2(Passthrough)

iface enp6s0 inet manual

#Lan1(Passthrough)

auto vmbr0

iface vmbr0 inet static

address 192.168.11.50/24

gateway 192.168.11.1

bridge-ports enp2s0

bridge-stp off

bridge-fd 0

#Lan5/etc/hosts

Code:

127.0.0.1 localhost.localdomain localhost

192.168.11.50 pve.home.arpa pve

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

ff02::3 ip6-allhostssystemctl status networking.service

Code:

● networking.service - Network initialization

Loaded: loaded (/lib/systemd/system/networking.service; enabled; vendor preset: enabled)

Active: active (exited) since Sun 2021-11-21 12:13:02 ACDT; 45min ago

Docs: man:interfaces(5)

man:ifup(8)

man:ifdown(8)

Process: 1266 ExecStart=/usr/share/ifupdown2/sbin/start-networking start (code=exited, status=0/SUCCESS)

Main PID: 1266 (code=exited, status=0/SUCCESS)

CPU: 365ms

Nov 21 12:13:01 pve systemd[1]: Starting Network initialization...

Nov 21 12:13:02 pve networking[1266]: networking: Configuring network interfaces

Nov 21 12:13:02 pve systemd[1]: Finished Network initialization.ip addr

Code:

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

3: enp2s0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master vmbr0 state UP group default qlen 1000

link/ether 00:f4:21:68:27:50 brd ff:ff:ff:ff:ff:ff

8: vmbr0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:f4:21:68:27:50 brd ff:ff:ff:ff:ff:ff

inet 192.168.11.50/24 scope global vmbr0

valid_lft forever preferred_lft forever

inet6 fe80::2f4:21ff:fe68:2750/64 scope link

valid_lft forever preferred_lft forever

Code:

root@pve:~# cat /etc/default/pveproxy

cat: /etc/default/pveproxy: No such file or directory

Code:

ss -tlpn

Code:

State Recv-Q Send-Q Local Address:Port Peer Address: Port Process

LISTEN 0 4096 0.0.0.0:111 0.0.0.0:* "users:((""rpcbind"",pid=1295,fd=4),(""systemd"",pid=1,fd=35))"

LISTEN 0 4096 127.0.0.1:85 0.0.0.0:* "users:((""pvedaemon worke"",pid=1787,fd=6),(""pvedaemon worke"",pid=1786,fd=6),(""pvedaemon worke"",pid=1784,fd=6),(""pvedaemon"",pid=1783,fd=6))"

LISTEN 0 128 0.0.0.0:22 0.0.0.0:* "users:((""sshd"",pid=1496,fd=3))"

LISTEN 0 100 127.0.0.1:25 0.0.0.0:* "users:((""master"",pid=1729,fd=13))"

LISTEN 0 4096 127.0.0.1:61000 0.0.0.0:* "users:((""kvm"",pid=1920,fd=15))"

LISTEN 0 4096 127.0.0.1:61001 0.0.0.0:* "users:((""kvm"",pid=3131,fd=15))"

LISTEN 0 4096 [::]:111 [::]:* "users:((""rpcbind"",pid=1295,fd=6),(""systemd"",pid=1,fd=37))"

LISTEN 0 128 [::]:22 [::]:* "users:((""sshd"",pid=1496,fd=4))"

LISTEN 0 4096 *:3128 *:* "users:((""spiceproxy work"",pid=1808,fd=6),(""spiceproxy"",pid=1807,fd=6))"

LISTEN 0 100 [::1]:25 [::]:* "users:((""master"",pid=1729,fd=14))"

LISTEN 0 4096 *:8006 *:* "users:((""pveproxy worker"",pid=408946,fd=6),(""pveproxy worker"",pid=405516,fd=6),(""pveproxy worker"",pid=403942,fd=6),(""pveproxy"",pid=1801,fd=6))"Attachments

Last edited: