I have an interesting one that I am hoping someone can point me in the right direction on.

I have a cluster of three pve hosts on vlan1, and some guests on both vlan1 and vlan2. MTU is set to 9000 across the board. Guest VM's on vlan1 can communicate "correctly" with the host PVE machines, ping correctly using a manual packet size (ping -s 8972 -M do 192.168.0.92). However, guests on vlan2 cannot communicate with the pve hosts on vlan1, nor any guests, nor external hosts on vlan1.

So I have whittled this down to a inter-vlan problem. I switched from standard linux bridges to OVS, just to see if that helped, It did not.

Running a unifi setup with Jumbo Frames enabled across all switches in the background.

Here's my /etc/network/interfaces from one PVE host (ignore the 10.10.* interface, those are for a separate 100g corosync network between pve hosts):

Example results from a guest VM on vlan2 (10.0.0.15). First to another vlan2 guest, then to the pve host that it's on, once with jumbo frames, once without:

Guest VM's are running virtio network interfaces, tagged with appropriate vlan tags.

No SDN usage here.

Any guidance on where to look next would be appreciated!

I have a cluster of three pve hosts on vlan1, and some guests on both vlan1 and vlan2. MTU is set to 9000 across the board. Guest VM's on vlan1 can communicate "correctly" with the host PVE machines, ping correctly using a manual packet size (ping -s 8972 -M do 192.168.0.92). However, guests on vlan2 cannot communicate with the pve hosts on vlan1, nor any guests, nor external hosts on vlan1.

So I have whittled this down to a inter-vlan problem. I switched from standard linux bridges to OVS, just to see if that helped, It did not.

Running a unifi setup with Jumbo Frames enabled across all switches in the background.

Here's my /etc/network/interfaces from one PVE host (ignore the 10.10.* interface, those are for a separate 100g corosync network between pve hosts):

Code:

auto lo

iface lo inet loopback

iface enp1s0 inet manual

auto enp65s0

iface enp65s0 inet static

address 10.10.0.1/24

mtu 9000

#Mellanox Eth0 corosync

iface enp65s0d1 inet manual

#Mellanox Eth1 corosync

auto enp66s0f0

iface enp66s0f0 inet manual

ovs_type OVSPort

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options vlan_mode=native-untagged

#Intel X520 SFP+

iface enp66s0f1 inet manual

#Intel X520 SFP+

auto ovsport_host

iface ovsport_host inet static

address 192.168.0.92/24

gateway 192.168.0.1

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options tag=1

auto ovsport_vlan2

iface ovsport_vlan2 inet manual

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_mtu 9000

ovs_options tag=2

auto vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports enp66s0f0 ovsport_host ovsport_vlan2

ovs_mtu 9000

source /etc/network/interfaces.d/*Example results from a guest VM on vlan2 (10.0.0.15). First to another vlan2 guest, then to the pve host that it's on, once with jumbo frames, once without:

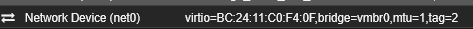

Guest VM's are running virtio network interfaces, tagged with appropriate vlan tags.

No SDN usage here.

Any guidance on where to look next would be appreciated!

Last edited: