I manage a decent-sized Proxmox private cloud for a client consisting of two sites:

Site A (Prod):

PVE 7.4-3

250 VMs (Windows 10, 6GB RAM allocated)

3x Dell R630 w/768GB RAM each

Site B (DR):

PVE 8.3.4 (latest as of Mar 2025)

0 VMs

3x Dell R630 w/768GB RAM each

Recently, we migrated all VM's from Site A to Site B for a DR exercise using Proxmox Backup Server and it worked very well (aside from some slowness with PBS, but that's another thread). The issue is:

At Site A, Host #1 has around 110VM's on it and it's using around 369GB RAM. Looking at the VM status, I see that most of the VM's are using less than half of the allocated 6GB RAM, so I assume that the VirtIO balloon driver is releasing the RAM back to PVE.

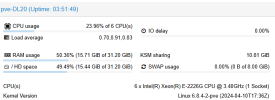

After the VMs from Site A Host 1 were copied to Site B Host 1, the memory usage doubled to over 680GB used as Site B, and then the swap filled up. Looking at the status of the individual VM's, I again see very low RAM usage from the VM's themselves, but significantly increased host memory usage.

Why would the same number of VM's on the same hardware use double the RAM on PVE 8.3.4 vs. the older 7.4-3? The only thing I can think of is I would need to potentially update the VirtIO drivers in each VM...does this make sense?

Site A (Prod):

PVE 7.4-3

250 VMs (Windows 10, 6GB RAM allocated)

3x Dell R630 w/768GB RAM each

Site B (DR):

PVE 8.3.4 (latest as of Mar 2025)

0 VMs

3x Dell R630 w/768GB RAM each

Recently, we migrated all VM's from Site A to Site B for a DR exercise using Proxmox Backup Server and it worked very well (aside from some slowness with PBS, but that's another thread). The issue is:

At Site A, Host #1 has around 110VM's on it and it's using around 369GB RAM. Looking at the VM status, I see that most of the VM's are using less than half of the allocated 6GB RAM, so I assume that the VirtIO balloon driver is releasing the RAM back to PVE.

After the VMs from Site A Host 1 were copied to Site B Host 1, the memory usage doubled to over 680GB used as Site B, and then the swap filled up. Looking at the status of the individual VM's, I again see very low RAM usage from the VM's themselves, but significantly increased host memory usage.

Why would the same number of VM's on the same hardware use double the RAM on PVE 8.3.4 vs. the older 7.4-3? The only thing I can think of is I would need to potentially update the VirtIO drivers in each VM...does this make sense?