High SSD wear after a few days

- Thread starter eddi1984

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

@matpuk your test sounds like comparing tomato with potato

So what have you tested?

your PVE: contains SSD only

your NAS: has SSDs and HDDs

so you are not testing the same things!

It would be comparable if your NAS and your PVE would run only on SSDs

@ITT solving problems with killing it with hardware is one option, the better is to solve the root of the issue, which @matpuk is trying to solve.

IMHO @matpuk does a better engineering approach

PVE: 64Gb RAM, 2xSSD 128Gb zfs mirror (system + CT templates + ISOs only). VMs and CTs are stored on NAS via 10Gbe network.

NAS: 32Gb RAM, 2xSSD 128Gb zfs mirror (system only) + 4xHDD for data storage

So what have you tested?

your PVE: contains SSD only

your NAS: has SSDs and HDDs

so you are not testing the same things!

It would be comparable if your NAS and your PVE would run only on SSDs

@ITT solving problems with killing it with hardware is one option, the better is to solve the root of the issue, which @matpuk is trying to solve.

IMHO @matpuk does a better engineering approach

Last edited:

@matpuk your test sounds like comparing tomato with potato

So what have you tested?

your PVE: contains SSD only

your NAS: has SSDs and HDDs

so you are not testing the same things!

It would be comparable if your NAS and your PVE would run only on SSDs

@ITT solving problems with killing it with hardware is one option, the better is to solve the root of the issue, which @matpuk is trying to solve.

IMHO @matpuk does a better engineering approach

I'm comparing load to system partitions. I tried to explain that my PVE system do not store CTs and VMs on SSDs and NAS uses HDDs to store PVE data too. So both systems use ZFS mirror on similar HW to store system files only. And they have very different SSDs usage in the end.

In previous posts above someone mentioned that ZFS adds overhead. It's ok. But 100Gb difference in two weeks of idleing?

Both systems use Debian. Both systems collect metrics like cpu usage, disks usage and temp and so on. Both systems provide web UI and write system logs.

So, both of them writes to system partitions. And I think they can be compared in this case.

Logic tells me that I need to start looking at running PVE services.

The problem is how SSDs work. PVE isn't writing much data, but it is doing some very small writes every minute because of clustering. SSDs can`t handle small writes well, as they internally need to erase and rewrite huge block sizes. So writing very few data will cause massive wear. Copy-on-Write filesystems like ZFS will make this even worse. Especially ZFS with its sync writes to the journal, where not all writes can't be optimized in RAM to reduce wear when buying cheap SSDs without powerloss protection.

And yes, you can disable several services so PVE will write less to the SSDs.

And yes, you can disable several services so PVE will write less to the SSDs.

systemctl disable corosync pve-ha-crm pve-ha-lrm for example would be a good start in case you aren't clustering.

Last edited:

No, this is the internal database of PVE. In a cluster it is written more often, but in a single system it also written a lot. This is one of the main wear causes in PVE.After playing with iotop, I have a candidate who is writing 24/7 to a system partition every couple of seconds - pmxcfs (https://pve.proxmox.com/wiki/Proxmox_Cluster_File_System_(pmxcfs))!

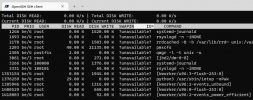

Thanks for confirmation. I've just got the same result after running iotop 60minutes on idle system:No, this is the internal database of PVE. In a cluster it is written more often, but in a single system it also written a lot. This is one of the main wear causes in PVE.

20Mb total of small writes every second by pmxcfs...

Well. Thanks everyone. I think I will go with 2x2.5" HDD way instead of cheap SSDs. Enterprise grade SSDs are way to costly.

I've just discovered the issue myself after two weeks of running my pilot PVE and TrueNAS (Debian based) installs.

Why there is so HUGE difference for just system partitions?

On TrueNAS (Scale), where is your System Dataset [1] located? And where your Syslog [2]?

Afaik per default the Syslog is on the System Dataset and that in turn is not on the boot-pool (your SSDs), if there is at least one data-pool (your HDDs) present.

So if your System Dataset including the Syslog is on your HDD-pool, the conditions for your comparison are simply wrong.

[1] https://www.truenas.com/docs/scale/...cedsettingsscreen/#system-dataset-pool-widget

[2] https://www.truenas.com/docs/scale/scaletutorials/systemsettings/advanced/managesyslogsscale

[0] https://www.truenas.com/community/t...-dataset-pool-and-its-default-placement.99010

I'm around 64kB/s for pmxcfs on a 20 nodes clusters with 1000 vms.Thanks for confirmation. I've just got the same result after running iotop 60minutes on idle system:

View attachment 43611

20Mb total of small writes every second by pmxcfs...

Well. Thanks everyone. I think I will go with 2x2.5" HDD way instead of cheap SSDs. Enterprise grade SSDs are way to costly.

Are you sure that your 20MB is not the cumulative over the 60 minutes ?

Last edited:

Yes. It is cumulative value over 60 minutes. Sorry for my English.I'm around 64kB/s for pmxcfs on a 20 nodes clusters with 1000 vms.

Are you sure that your 20MB is not the cumulative over the 60 minutes ?

This is going to be ~0.5Gb per day, ~15Gb per month, ~200Gb per year. Let's multiply it by ZFS+Internal SSD overhead factor of 9-12 (Correct me if I'm wrong) and we will get something like 2-3 TBW per year. Even for cheap 60TBW SSDs this means that they will last at least 5-7 years (I hope, ha-ha. Just because I've got one of them died 2 days ago after 2 weeks of idleing...).

Thanks everyone for your valuable input. Looks like I was paniking too early.

Here, TrueNAS also killed 3 of the 4 cheap consumer system disks this year (2x Intenso High Performance, 1x Crucial BX. All three 120GB TLC mit DRAM. That crucial wasn't even a year old). So best you don't buy these cheap SSDs at all when using ZFS, no matter what OS you use.

Last edited:

Also don´t use ZFS on cheap SSD´s.Here, TrueNAS also killed 3 of the 4 cheap consumer system disks this year. So best you don't buy these cheap SSDs at all when using ZFS, no matter what OS you use.

Without ZFS, cheap SSD´s works well

Well, then I definitely should go with 2.5" HDDsHere, TrueNAS also killed 3 of the 4 cheap consumer system disks this year (2x 120GB TLC Intenso, 1x 120GB TLC Crucial. That crucial wasn't even a year old). So best you don't buy these cheap SSDs at all when using ZFS, no matter what OS you use.

You also sometimes find good prices for enterprise SSDs. I think it's not allowed to advertise stuff here, so I won't post links, but I just bought some spare boot disks on German ebay. New and sealed from a retailer. 100GB Enterprise MLC SSD with 3.737 TB TBW (or 20 DWPD over 5 years). 25€ incl. shipping. Spinning rust wouldn't be cheaper. There is still one left for the lucky one finding itWell, then I definitely should go with 2.5" HDDs

Last edited:

Yes, I would also recommend that. Or used Samsung Enterprise SSDs. 120 GB should also be fine. I use then for many, many years without any problems and very low wear.You also sometimes find good prices for enterprise SSDs.