During backup, the backup process itself takes up all the disk resources of the virtual machine. The performance: max-workers=1 parameter in /etc/vzdump.conf has no effect on the situation. When a backup is running, processes that need access to the VM disk begin to go into standby mode. iostat shows that inside the virtual machine from normal values of %iowait 0.1% increases to 50-70%

We also tried changing the values of ionice, bwlimit. This also doesn't change the situation.

File system - LVM-thin volume is used, which is created on Raid 10 mdadm. The raid itself is assembled from 8 NVME disks.

During backup on the physical machine itself, everything is fine with IO. The problem is observed only inside the virtual machine.

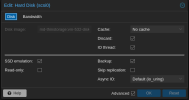

I attach the virtual machine disk settings in the picture.

We use the latest version of pve-qemu-kvm

ii pve-qemu-kvm 8.0.2-6

Has anyone encountered and solved a similar problem? Thank you.

We also tried changing the values of ionice, bwlimit. This also doesn't change the situation.

File system - LVM-thin volume is used, which is created on Raid 10 mdadm. The raid itself is assembled from 8 NVME disks.

During backup on the physical machine itself, everything is fine with IO. The problem is observed only inside the virtual machine.

I attach the virtual machine disk settings in the picture.

We use the latest version of pve-qemu-kvm

ii pve-qemu-kvm 8.0.2-6

Has anyone encountered and solved a similar problem? Thank you.

Attachments

Last edited: