Hi,

we operate three FlatCar based VMs on top of three Proxmox VE hosts that operate a memory based database.

Hardware is still as described here running currently Proxmox VE 8.3.5. Disks in use are two NVMe as ZFS mirror handing out ZFS Volumes to the VMs with ZFS pools on top of those volumes within the VM.

Problem:

- A few VMs on other hosts push data to these three VMs (400MBit/sec rx traffic)

- We get ping loss in our Zabbix monitoring for these three VMs

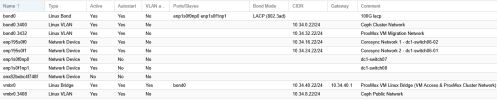

Network interface setup host:

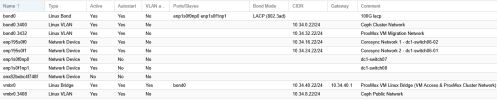

Network interface setup VM:

net0 is the interface experiencing ping loss. So we played around with Multiqueue. But since activating multiqueue we get kernel error messages like this:

Before activating multiqueue we got a higher number of ping losses, with multiqueue we now get the kernel error messages in the VM.

How can we get rid of these tx timeouts?

Best regards

Rainer

we operate three FlatCar based VMs on top of three Proxmox VE hosts that operate a memory based database.

Hardware is still as described here running currently Proxmox VE 8.3.5. Disks in use are two NVMe as ZFS mirror handing out ZFS Volumes to the VMs with ZFS pools on top of those volumes within the VM.

Problem:

- A few VMs on other hosts push data to these three VMs (400MBit/sec rx traffic)

- We get ping loss in our Zabbix monitoring for these three VMs

Network interface setup host:

Network interface setup VM:

net0 is the interface experiencing ping loss. So we played around with Multiqueue. But since activating multiqueue we get kernel error messages like this:

Code:

[Fri May 2 11:13:14 2025] virtio_net virtio0 eth0: NETDEV WATCHDOG: CPU: 5: transmit queue 14 timed out 5037 ms

[Fri May 2 11:13:14 2025] virtio_net virtio0 eth0: TX timeout on queue: 14, sq: output.14, vq: 0x1d, name: output.14, 5041000 usecs agoBefore activating multiqueue we got a higher number of ping losses, with multiqueue we now get the kernel error messages in the VM.

How can we get rid of these tx timeouts?

Best regards

Rainer