Hello,

We are have two Dell Servers and they are running Proxmox v7.2 with replication. The both server has 64Gb RAM. But the memory usage is high. Could you please let me know if it is normal for the Replication in Proxmox? If yes, why it shows high memory usage? Thanks.

Server A

RAM usage 94.82% (59.41 GiB of 62.65 GiB)

VM 1 - 24GB RAM

VM 2 - 2GB RAM

Server B

RAM usage 94.78% (59.38 GiB of 62.65 GiB)

VM 3 - 24GB RAM

VM 4 - 2GB RAM

VM 5 - 4GB RAM

VM 6 - 4GB RAM

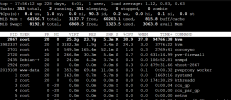

The result of TOP for the Server A

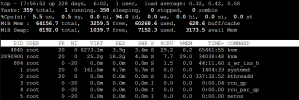

The result of TOP for the Server B

We are have two Dell Servers and they are running Proxmox v7.2 with replication. The both server has 64Gb RAM. But the memory usage is high. Could you please let me know if it is normal for the Replication in Proxmox? If yes, why it shows high memory usage? Thanks.

Server A

RAM usage 94.82% (59.41 GiB of 62.65 GiB)

VM 1 - 24GB RAM

VM 2 - 2GB RAM

Server B

RAM usage 94.78% (59.38 GiB of 62.65 GiB)

VM 3 - 24GB RAM

VM 4 - 2GB RAM

VM 5 - 4GB RAM

VM 6 - 4GB RAM

The result of TOP for the Server A

The result of TOP for the Server B

Last edited: