Greetings to all,

I am seeking assistance with a challenging issue related to Ceph that has significantly impacted the company I work for.

Our company has been operating a cluster with three nodes hosted in a data center for over 10 years. This production environment runs on Proxmox (version 6.3.2) and Ceph (version 14.2.15). From a performance perspective, our applications function adequately.

To address new business requirements, such as the need for additional resources for virtual machines (VMs) and to support the company’s growth, we deployed a new cluster in the same data center. The new cluster also consists of three nodes but is considerably more robust, featuring increased memory, processing power, and a larger Ceph storage capacity.

The goal of this new environment is to migrate VMs from the old cluster to the new one, ensuring it can handle the growing demands of our applications. This new setup operates on more recent versions of Proxmox (8.2.2) and Ceph (18.2.2), which differ significantly from the versions in the old environment.

The Problem

During the gradual migration of VMs to the new cluster, we encountered severe performance issues in our applications—issues that did not occur in the old environment. These performance problems rendered it impractical to keep the VMs in the new cluster.

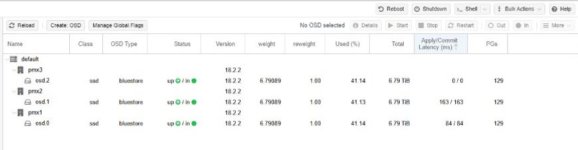

An analysis of Ceph latency in the new environment revealed extremely high and inconsistent latency, as shown in the screenshot below:

<<Ceph latency screenshot - new environment>>

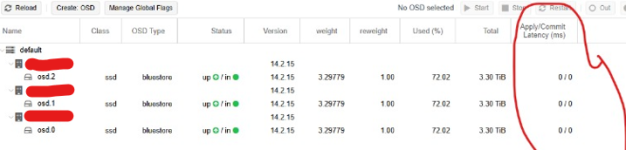

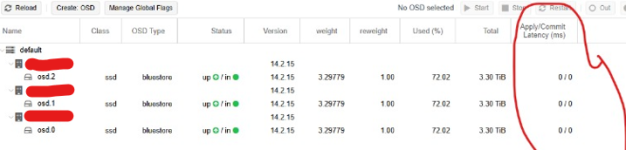

To mitigate operational difficulties, we reverted all VMs back to the old environment. This resolved the performance issues, ensuring our applications functioned as expected without disrupting end-users. After this rollback, Ceph latency in the old cluster returned to its stable and low levels:

<<Ceph latency screenshot - old environment>>

With the new cluster now available for testing, we need to determine the root cause of the high Ceph latency, which we suspect is the primary contributor to the poor application performance.

Tests Performed in the New Environment

-Deleted the Ceph OSD on Node 1. Ceph took over 28 hours to synchronize. We then recreated the OSD on Node 1.

-Deleted the Ceph OSD on Node 2. Ceph also took over 28 hours to synchronize. We then recreated the OSD on Node 2.

-Moved three VMs to the local backup disk of PM1.

-Destroyed the Ceph cluster.

-Created local storage on each server using the virtual disk (RAID 0) previously used by Ceph.

-Migrated VMs to the new environment and conducted a stress test to check for disk-related issues.

Questions and Requests for Input

-Are there any additional tests you would recommend to better understand the performance issues in the new environment?

-Have you experienced similar problems with Ceph when transitioning to a more powerful cluster?

-Could this be caused by a Ceph configuration issue?

-The Ceph storage in the new cluster is larger, but the network interface is limited to 1Gbps. Could this be a bottleneck? Would upgrading to a 10Gbps network interface be necessary for larger Ceph storage?

-Could these issues stem from incompatibilities or changes in the newer versions of Proxmox or Ceph?

-Is there a possibility of hardware problems? Note that hardware tests in the new environment have not revealed any issues.

Thank you in advance for your insights and suggestions.

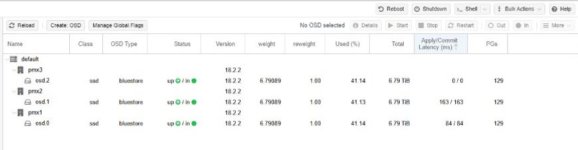

edit: The first screenshot was taken during our disk testing, which is why one of them was in the OUT state. I’ve updated the post with a more recent image

I am seeking assistance with a challenging issue related to Ceph that has significantly impacted the company I work for.

Our company has been operating a cluster with three nodes hosted in a data center for over 10 years. This production environment runs on Proxmox (version 6.3.2) and Ceph (version 14.2.15). From a performance perspective, our applications function adequately.

To address new business requirements, such as the need for additional resources for virtual machines (VMs) and to support the company’s growth, we deployed a new cluster in the same data center. The new cluster also consists of three nodes but is considerably more robust, featuring increased memory, processing power, and a larger Ceph storage capacity.

The goal of this new environment is to migrate VMs from the old cluster to the new one, ensuring it can handle the growing demands of our applications. This new setup operates on more recent versions of Proxmox (8.2.2) and Ceph (18.2.2), which differ significantly from the versions in the old environment.

The Problem

During the gradual migration of VMs to the new cluster, we encountered severe performance issues in our applications—issues that did not occur in the old environment. These performance problems rendered it impractical to keep the VMs in the new cluster.

An analysis of Ceph latency in the new environment revealed extremely high and inconsistent latency, as shown in the screenshot below:

<<Ceph latency screenshot - new environment>>

To mitigate operational difficulties, we reverted all VMs back to the old environment. This resolved the performance issues, ensuring our applications functioned as expected without disrupting end-users. After this rollback, Ceph latency in the old cluster returned to its stable and low levels:

<<Ceph latency screenshot - old environment>>

With the new cluster now available for testing, we need to determine the root cause of the high Ceph latency, which we suspect is the primary contributor to the poor application performance.

Tests Performed in the New Environment

-Deleted the Ceph OSD on Node 1. Ceph took over 28 hours to synchronize. We then recreated the OSD on Node 1.

-Deleted the Ceph OSD on Node 2. Ceph also took over 28 hours to synchronize. We then recreated the OSD on Node 2.

-Moved three VMs to the local backup disk of PM1.

-Destroyed the Ceph cluster.

-Created local storage on each server using the virtual disk (RAID 0) previously used by Ceph.

-Migrated VMs to the new environment and conducted a stress test to check for disk-related issues.

Questions and Requests for Input

-Are there any additional tests you would recommend to better understand the performance issues in the new environment?

-Have you experienced similar problems with Ceph when transitioning to a more powerful cluster?

-Could this be caused by a Ceph configuration issue?

-The Ceph storage in the new cluster is larger, but the network interface is limited to 1Gbps. Could this be a bottleneck? Would upgrading to a 10Gbps network interface be necessary for larger Ceph storage?

-Could these issues stem from incompatibilities or changes in the newer versions of Proxmox or Ceph?

-Is there a possibility of hardware problems? Note that hardware tests in the new environment have not revealed any issues.

Thank you in advance for your insights and suggestions.

edit: The first screenshot was taken during our disk testing, which is why one of them was in the OUT state. I’ve updated the post with a more recent image

Last edited: