Hello!

I am having issue with latency between guest vm. Attached my production topology using proxmox 5.4.3. The proxmox node itself handle routing, so we have 4 router in 1 node (3 in vm, 1 in hypervisor).

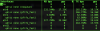

The problem is, VM B, receive all routes from client, and the node interfaces is 10G, but why the latency seems unstable, while the throughput only approx 300Mbps ? (attached using bmon)

Is there any throughput limitation for VM Communication? eth0, eth1, and eth2 using virtio-nic.

My pveversion:

proxmox-ve: 5.4-1 (running kernel: 4.15.18-12-pve)

pve-manager: 5.4-3 (running version: 5.4-3/0a6eaa62)

pve-kernel-4.15: 5.3-3

pve-kernel-4.15.18-12-pve: 4.15.18-35

corosync: 2.4.4-pve1

criu: 2.11.1-1~bpo90

glusterfs-client: 3.8.8-1

ksm-control-daemon: 1.2-2

libjs-extjs: 6.0.1-2

libpve-access-control: 5.1-8

libpve-apiclient-perl: 2.0-5

libpve-common-perl: 5.0-50

libpve-guest-common-perl: 2.0-20

libpve-http-server-perl: 2.0-13

libpve-storage-perl: 5.0-41

libqb0: 1.0.3-1~bpo9

lvm2: 2.02.168-pve6

lxc-pve: 3.1.0-3

lxcfs: 3.0.3-pve1

novnc-pve: 1.0.0-3

proxmox-widget-toolkit: 1.0-25

pve-cluster: 5.0-36

pve-container: 2.0-37

pve-docs: 5.4-2

pve-edk2-firmware: 1.20190312-1

pve-firewall: 3.0-19

pve-firmware: 2.0-6

pve-ha-manager: 2.0-9

pve-i18n: 1.1-4

pve-libspice-server1: 0.14.1-2

pve-qemu-kvm: 2.12.1-3

pve-xtermjs: 3.12.0-1

qemu-server: 5.0-50

smartmontools: 6.5+svn4324-1

spiceterm: 3.0-5

vncterm: 1.5-3

zfsutils-linux: 0.7.13-pve1~bpo2

I am having issue with latency between guest vm. Attached my production topology using proxmox 5.4.3. The proxmox node itself handle routing, so we have 4 router in 1 node (3 in vm, 1 in hypervisor).

The problem is, VM B, receive all routes from client, and the node interfaces is 10G, but why the latency seems unstable, while the throughput only approx 300Mbps ? (attached using bmon)

Is there any throughput limitation for VM Communication? eth0, eth1, and eth2 using virtio-nic.

My pveversion:

proxmox-ve: 5.4-1 (running kernel: 4.15.18-12-pve)

pve-manager: 5.4-3 (running version: 5.4-3/0a6eaa62)

pve-kernel-4.15: 5.3-3

pve-kernel-4.15.18-12-pve: 4.15.18-35

corosync: 2.4.4-pve1

criu: 2.11.1-1~bpo90

glusterfs-client: 3.8.8-1

ksm-control-daemon: 1.2-2

libjs-extjs: 6.0.1-2

libpve-access-control: 5.1-8

libpve-apiclient-perl: 2.0-5

libpve-common-perl: 5.0-50

libpve-guest-common-perl: 2.0-20

libpve-http-server-perl: 2.0-13

libpve-storage-perl: 5.0-41

libqb0: 1.0.3-1~bpo9

lvm2: 2.02.168-pve6

lxc-pve: 3.1.0-3

lxcfs: 3.0.3-pve1

novnc-pve: 1.0.0-3

proxmox-widget-toolkit: 1.0-25

pve-cluster: 5.0-36

pve-container: 2.0-37

pve-docs: 5.4-2

pve-edk2-firmware: 1.20190312-1

pve-firewall: 3.0-19

pve-firmware: 2.0-6

pve-ha-manager: 2.0-9

pve-i18n: 1.1-4

pve-libspice-server1: 0.14.1-2

pve-qemu-kvm: 2.12.1-3

pve-xtermjs: 3.12.0-1

qemu-server: 5.0-50

smartmontools: 6.5+svn4324-1

spiceterm: 3.0-5

vncterm: 1.5-3

zfsutils-linux: 0.7.13-pve1~bpo2