We have 4 HP NVMe drives with the following specs:

Manufacturer: Hewlett Packard

Type: NVMe SSD

Part Number: LO0400KEFJQ

Best Use: Mixed-Use

4KB Random Read: 130000 IOPS

4KB Random Write: 39500 IOPS

Server used for Proxmox: HPE ProLiant DL380 Gen10 - All the NVMe drives are connected directly to the Motherboard's storage controller.

Proxmox VE is installed on 2 x480GB SSD in RAID-1 mode. We have a guest VM running Ubuntu 22.04 LTS edition having 40GB RAM and 14 vCPU.

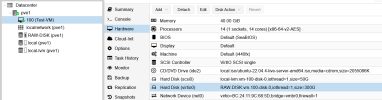

The storage configuration for the VM is:

SCSI Controller: VirtIO SCSI Single

Bust/device: VirtIO Block

IO Thread: Checked

Async IO: io_uring

cache: no cache

We have used the following command for bechmarking:

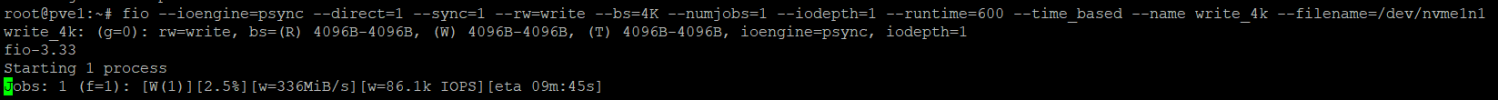

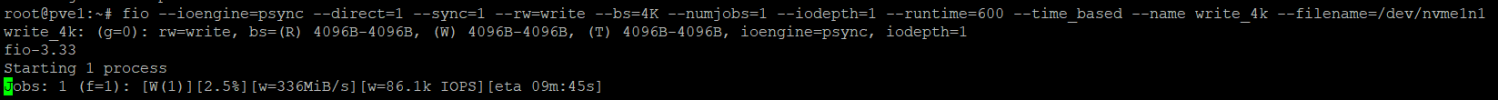

In proxmox:

fio --ioengine=psync --direct=1 --sync=1 --rw=write --bs=4K --numjobs=1 --iodepth=1 --runtime=600 --time_based --name write_4k --filename=/dev/nvme1n1

Write IOPS: 86K

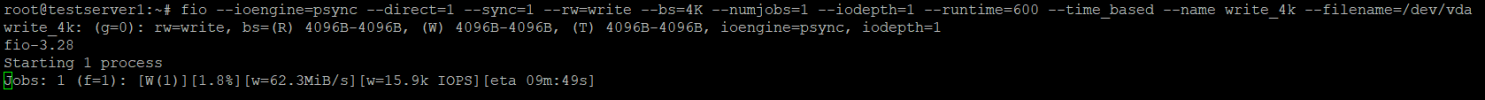

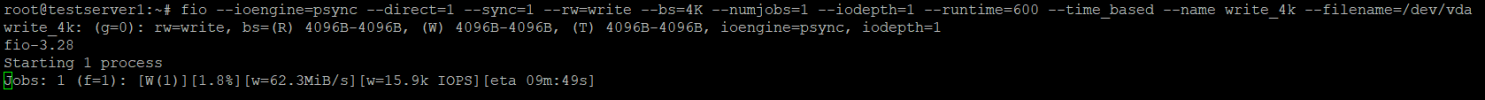

In Guest VM:

fio --ioengine=psync --direct=1 --sync=1 --rw=write --bs=4K --numjobs=1 --iodepth=1 --runtime=600 --time_based --name write_4k --filename=/dev/sdb

Write IOPS: 16K

Note: The drive for the VM is on one of the NVMes and configured as LVM.

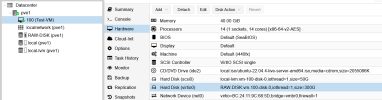

VM Configuration:

What am I really missing here, which is causing this much IOPS difference?

Manufacturer: Hewlett Packard

Type: NVMe SSD

Part Number: LO0400KEFJQ

Best Use: Mixed-Use

4KB Random Read: 130000 IOPS

4KB Random Write: 39500 IOPS

Server used for Proxmox: HPE ProLiant DL380 Gen10 - All the NVMe drives are connected directly to the Motherboard's storage controller.

Proxmox VE is installed on 2 x480GB SSD in RAID-1 mode. We have a guest VM running Ubuntu 22.04 LTS edition having 40GB RAM and 14 vCPU.

The storage configuration for the VM is:

SCSI Controller: VirtIO SCSI Single

Bust/device: VirtIO Block

IO Thread: Checked

Async IO: io_uring

cache: no cache

We have used the following command for bechmarking:

In proxmox:

fio --ioengine=psync --direct=1 --sync=1 --rw=write --bs=4K --numjobs=1 --iodepth=1 --runtime=600 --time_based --name write_4k --filename=/dev/nvme1n1

Write IOPS: 86K

In Guest VM:

fio --ioengine=psync --direct=1 --sync=1 --rw=write --bs=4K --numjobs=1 --iodepth=1 --runtime=600 --time_based --name write_4k --filename=/dev/sdb

Write IOPS: 16K

Note: The drive for the VM is on one of the NVMes and configured as LVM.

VM Configuration:

What am I really missing here, which is causing this much IOPS difference?