For about 2 weeks know I´m having trouble with my server. Everytime I´m uploading Data or using a VM, the IO Delay goes up to 30-80 % and needs a few Minutes to go down. In the meantime, I reinstalled Proxmox because of a few reasons. Sadly, that changed nothing. So I think it could be a hardware Problem.

Currently I have just one Lxc running for Docker.

On that peak I transferred 11,4 GB of Music.

Before I did the new installation, I installed 4 new 3TB HDD´s. Only my 1TB SSD is still in my Homeserver.

While writing this, I uploaded Data to my ZFS Volume, and there where no Problems. 110 GB of Data and it ran flawlessly. So its defenetly my SSD. But is there a way to "repair" it?

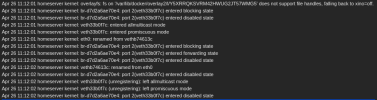

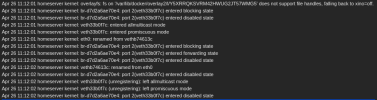

Edit: Also, this minutely shows up in my system Logs:

Currently I have just one Lxc running for Docker.

On that peak I transferred 11,4 GB of Music.

Before I did the new installation, I installed 4 new 3TB HDD´s. Only my 1TB SSD is still in my Homeserver.

While writing this, I uploaded Data to my ZFS Volume, and there where no Problems. 110 GB of Data and it ran flawlessly. So its defenetly my SSD. But is there a way to "repair" it?

Edit: Also, this minutely shows up in my system Logs:

Last edited: