Hi,

a few days ago we upgraded our 5 nodes cluster to Proxmox 6 (from 5.4) and Ceph to Octopus (Luminous to Nautilus and after that Nautilus to Octopus).

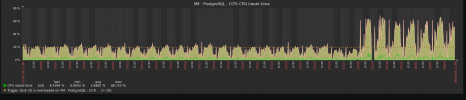

After the upgrade we noticed that all VMs started to raise alerts on our Zabbix monitoring system with reason "Disk I/O is overloaded". This alerts was triggered due to high I/O wait on VMs, this is the graph of a VM to be clear:

It is possible to clearly see the moment where we completed the upgrade: all those spikes with I/O wait are 3 times higher. The situation is the same for all other VMs.

Any idea why? Anyone had the same problem after the upgrade?

Many thanks

a few days ago we upgraded our 5 nodes cluster to Proxmox 6 (from 5.4) and Ceph to Octopus (Luminous to Nautilus and after that Nautilus to Octopus).

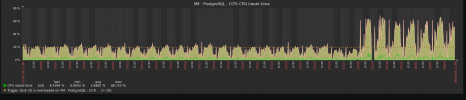

After the upgrade we noticed that all VMs started to raise alerts on our Zabbix monitoring system with reason "Disk I/O is overloaded". This alerts was triggered due to high I/O wait on VMs, this is the graph of a VM to be clear:

It is possible to clearly see the moment where we completed the upgrade: all those spikes with I/O wait are 3 times higher. The situation is the same for all other VMs.

Any idea why? Anyone had the same problem after the upgrade?

Many thanks