Hey guys, Im pretty new to proxmox, installed it a few times to play around with but i am a complete noob so just a heads up, ive just done a fresh install and wanting to get it setup for hosting myself and some friends a few VM's for game servers, such as rust, minecraft & dayz etc

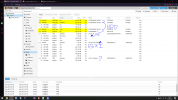

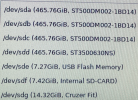

ANYWAY, the system i am using is a HP ProLiant DL160 GEN9, it only has 4 bay drive slots so for now ive just popped in 4x 500gb seagate HDD's and this server has a built in raid controller. Of course just in case of any problems or HDD failure i dont want data loss so im wanting to setup these up in a raid configuration by using the servers built in raid controller (unless you can provide a better method?) i have created 2 arrays. each array contains 2 drives in a RAID1 configuration. So for example there is 4 drive bays. Bay 1 and Bay 4 have been setup in an array and Bay 2 and Bay 3 in another array all in RAID1. now im wanting to add these as LVM storage so i can use these for storing .iso files and also the VM hdd partitions. I have setup this up before but without having them in a RAID configuration and why im needing help.

How would i proceed in adding these to my proxmox installation while also maintaining RAID functionality. For the OS i am hosting it on a 16GB usb so i can use all 4 bays for as much storage as possible. I will include some screenshots of how my proxmox currently looks. These HDD's shouldnt have anything on them and if theres anything i need to do such as formatting them please let me know as im not sure why one is GPT and the others arent. Also ignore my Snipping Tool "skills" lmao

ANYWAY, the system i am using is a HP ProLiant DL160 GEN9, it only has 4 bay drive slots so for now ive just popped in 4x 500gb seagate HDD's and this server has a built in raid controller. Of course just in case of any problems or HDD failure i dont want data loss so im wanting to setup these up in a raid configuration by using the servers built in raid controller (unless you can provide a better method?) i have created 2 arrays. each array contains 2 drives in a RAID1 configuration. So for example there is 4 drive bays. Bay 1 and Bay 4 have been setup in an array and Bay 2 and Bay 3 in another array all in RAID1. now im wanting to add these as LVM storage so i can use these for storing .iso files and also the VM hdd partitions. I have setup this up before but without having them in a RAID configuration and why im needing help.

How would i proceed in adding these to my proxmox installation while also maintaining RAID functionality. For the OS i am hosting it on a 16GB usb so i can use all 4 bays for as much storage as possible. I will include some screenshots of how my proxmox currently looks. These HDD's shouldnt have anything on them and if theres anything i need to do such as formatting them please let me know as im not sure why one is GPT and the others arent. Also ignore my Snipping Tool "skills" lmao