I am trying to setup VXLAN using the SDN feature as outlined here:

https://pve.proxmox.com/pve-docs/chapter-pvesdn.html#pvesdn_zone_plugin_simple

My requirement is to basically use a 10G interface between the two nodes for better VM to VM transfer rates (NAS replica). Maybe there are better ways?

But in my two node cluster I followed the directions outlined in the page and can not get the Node1_vm to ping my Node2_vm.

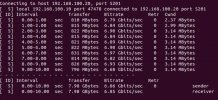

I checked the lower level interfaces and they are both showing good links full duplex at 10Gbits, so I know the physical stuff is good.

The only slight deviation is my test vms are Unbuntu desktops, so the network setup is a little different, but not sure that matters. The static IPs are set, mtu 1450 is set, and there is no gw.

First is VXLAN the way to go? if yes, then what should I look into on this setup to get it working?

https://pve.proxmox.com/pve-docs/chapter-pvesdn.html#pvesdn_zone_plugin_simple

My requirement is to basically use a 10G interface between the two nodes for better VM to VM transfer rates (NAS replica). Maybe there are better ways?

But in my two node cluster I followed the directions outlined in the page and can not get the Node1_vm to ping my Node2_vm.

I checked the lower level interfaces and they are both showing good links full duplex at 10Gbits, so I know the physical stuff is good.

The only slight deviation is my test vms are Unbuntu desktops, so the network setup is a little different, but not sure that matters. The static IPs are set, mtu 1450 is set, and there is no gw.

First is VXLAN the way to go? if yes, then what should I look into on this setup to get it working?