Hi Everyone

I would like to create a ceph cluster, and did this in the past.

Whatever I do in Proxmox 9, I seem to be hitting a roadblock.

The point is that I would like to create a ceph cluster in full-mesh using 3 nodes (cluster without a switch in between).

Hardware used for the 3 times 2U servers:

Supermicro X12

Xeon E-2388G

32Gb of RAM

Additionally we have Melanox fiber cards (1card with two interfaces of 25Gbps/node for the cluster)

256Gb NvMe SSD (for the Proxmox OS)

2 x 240Gb enterprise SSD 2.5" (for the Pool to be created in ceph called VM-Storage)

3 x 3,84TB enterprise SSD 2.5" (for the Pool to be created in ceph called Live)

4 x 20TB enterprise HDD 3.5" (for the Pool to be created in ceph called Archive)

It will be used with two Windows VM's.

The first will be for a management VMS system where only the VM-Storage pool will be connected to and providing 120Gb of data.

The second will be for a recording VMS system where aslo 120Gb will be provided along with the full capacity for the "Live" pool and full capacity for the "Archive" pool.

The idea is to set this up using the following network:

eno1 would be the vmbr0 for the management using

10.75.92.11/24 for node 1

10.75.92.12/24 for node 2

10.75.92.13/24 for node 3

We would like to use LACP for a VM-bond on eno3 and 4 on all these nodes.

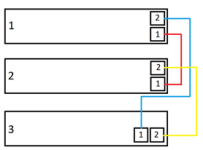

With the fibercard we would like to use a bond using broadcast.

Bonding the nodes fibercard interfaces together using the following IP-scheme:

Node 1 192.168.100.11/24

Node 2 192.168.100.12/24

Node 3 192.168.100.13/24

However, in the setup we would like to see when NIC's interfaces are being pulled out,

that everything keeps running as it should and at high speeds over the cluster.

The bond was created and pingable, but when we pull the top NIC's interface out in node 1 it still works.

Doing this with the bottom one, fails the operation.

I'm assuming this has something to do with ARP and/or ip route.

Am I doing something wrong here, or is this not the best way to proceed?

What would be the best and fastest setup?

I would really appreciate if someone would be able to assist!

Working on this for weeks now and would like to see it finished.

Thank you fo any assistance.

I would like to create a ceph cluster, and did this in the past.

Whatever I do in Proxmox 9, I seem to be hitting a roadblock.

The point is that I would like to create a ceph cluster in full-mesh using 3 nodes (cluster without a switch in between).

Hardware used for the 3 times 2U servers:

Supermicro X12

Xeon E-2388G

32Gb of RAM

Additionally we have Melanox fiber cards (1card with two interfaces of 25Gbps/node for the cluster)

256Gb NvMe SSD (for the Proxmox OS)

2 x 240Gb enterprise SSD 2.5" (for the Pool to be created in ceph called VM-Storage)

3 x 3,84TB enterprise SSD 2.5" (for the Pool to be created in ceph called Live)

4 x 20TB enterprise HDD 3.5" (for the Pool to be created in ceph called Archive)

It will be used with two Windows VM's.

The first will be for a management VMS system where only the VM-Storage pool will be connected to and providing 120Gb of data.

The second will be for a recording VMS system where aslo 120Gb will be provided along with the full capacity for the "Live" pool and full capacity for the "Archive" pool.

The idea is to set this up using the following network:

eno1 would be the vmbr0 for the management using

10.75.92.11/24 for node 1

10.75.92.12/24 for node 2

10.75.92.13/24 for node 3

We would like to use LACP for a VM-bond on eno3 and 4 on all these nodes.

With the fibercard we would like to use a bond using broadcast.

Bonding the nodes fibercard interfaces together using the following IP-scheme:

Node 1 192.168.100.11/24

Node 2 192.168.100.12/24

Node 3 192.168.100.13/24

However, in the setup we would like to see when NIC's interfaces are being pulled out,

that everything keeps running as it should and at high speeds over the cluster.

The bond was created and pingable, but when we pull the top NIC's interface out in node 1 it still works.

Doing this with the bottom one, fails the operation.

I'm assuming this has something to do with ARP and/or ip route.

Am I doing something wrong here, or is this not the best way to proceed?

What would be the best and fastest setup?

I would really appreciate if someone would be able to assist!

Working on this for weeks now and would like to see it finished.

Thank you fo any assistance.