[Newbie useless git alert]

I have been running proxmox for a couple of years, and muddling by. It has become apparent that I haven't got a clue what I'm doing with regard to storage.

I currently have 2 VMs (Home assistant and Blue Iris) and 3 CTs (Plex, Frigate and PiHole)

I want to move to Agent DVR for my surveillance and I seem not to have any available space on my 10TB of disk, even though (to my mind) I'm not using it correctly

I'd like to have 2 large storage areas on my filesystem, one for plex media, and one for CCTV recordings.

I would ideally like to keep my existing HA VM, and the Plex CT, and the BI setup, until I have the AgentDVR setup and running. The Frigate can go, but I want to play with that in the future.

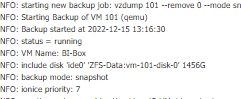

Looking at the Plex CT for example (I keep missing recordings due to "out of space":

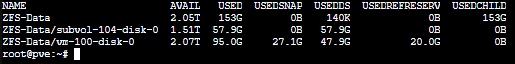

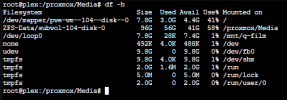

Yet, this is a different size to the ct volume

And in the console:

So, my questions.

What is the "best" way of assigning storage? is it ZFS, or just as Directories? or thin volumes? And can I achieve this without starting again from a bare metal install?

Is it possible to give the containers and VMs access to a big bucket of space, and for them to use what they need?

This is my hardware:

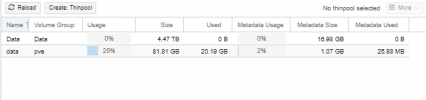

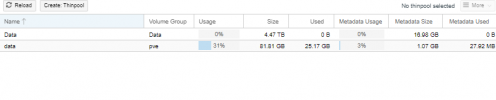

LVM:

LVM-Thin:

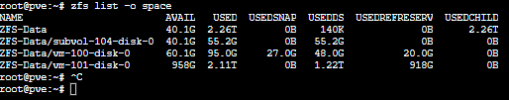

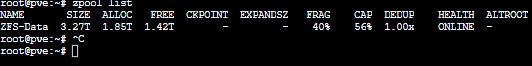

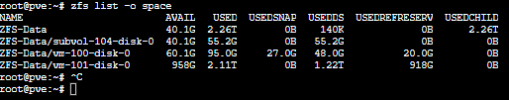

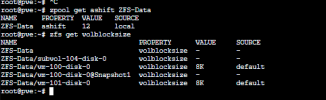

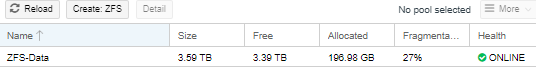

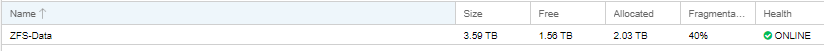

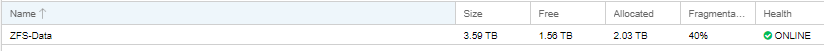

ZFS:

I accept that I should have come here first, so my apologies for trying to do it for myself!

I have been running proxmox for a couple of years, and muddling by. It has become apparent that I haven't got a clue what I'm doing with regard to storage.

I currently have 2 VMs (Home assistant and Blue Iris) and 3 CTs (Plex, Frigate and PiHole)

I want to move to Agent DVR for my surveillance and I seem not to have any available space on my 10TB of disk, even though (to my mind) I'm not using it correctly

I'd like to have 2 large storage areas on my filesystem, one for plex media, and one for CCTV recordings.

I would ideally like to keep my existing HA VM, and the Plex CT, and the BI setup, until I have the AgentDVR setup and running. The Frigate can go, but I want to play with that in the future.

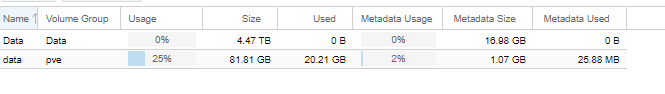

Looking at the Plex CT for example (I keep missing recordings due to "out of space":

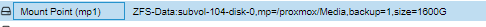

Yet, this is a different size to the ct volume

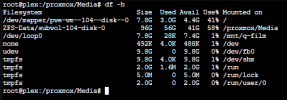

And in the console:

So, my questions.

What is the "best" way of assigning storage? is it ZFS, or just as Directories? or thin volumes? And can I achieve this without starting again from a bare metal install?

Is it possible to give the containers and VMs access to a big bucket of space, and for them to use what they need?

This is my hardware:

LVM:

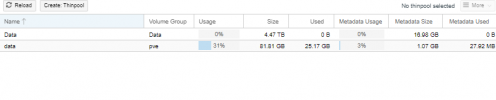

LVM-Thin:

ZFS:

I accept that I should have come here first, so my apologies for trying to do it for myself!