Good morning everyone,

I could use your help in designing the best setup for my current Proxmox environment.

Here's the current scenario:

However, I'm facing issues:

Given this situation, what would be the best approach to using ZFS in this setup? Should I reconsider how I'm allocating virtual disks from the pool, or is it more likely a hardware or configuration issue with ZFS?

Any suggestions or insights would be greatly appreciated.

Thank you in advance!

I could use your help in designing the best setup for my current Proxmox environment.

Here's the current scenario:

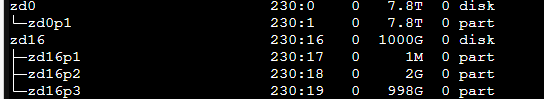

- I'm running Proxmox VE with 4 x 1TB disks.

- I've created a ZFS pool using RAID10, resulting in a pool named VMS with ~2TB of usable space.

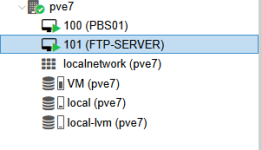

- I'm virtualizing a Proxmox Backup Server (PBS) inside this PVE.

- I’d like to use the VMS pool to allocate virtual disks for PBS and also for other virtual machines, such as one that provides Samba file sharing.

However, I'm facing issues:

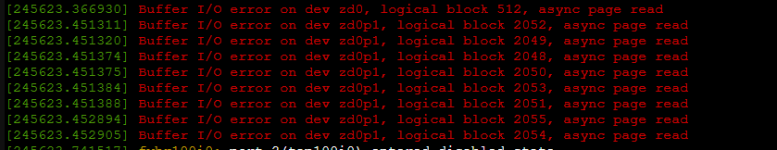

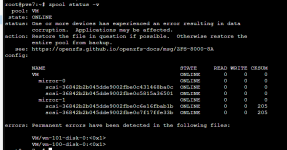

- The PBS virtual disk stored in the VMS pool is showing errors.

- Another VM using the same pool (Samba server) is reporting I/O errors.

- The zpool status command reports the VMS pool is degraded.

Given this situation, what would be the best approach to using ZFS in this setup? Should I reconsider how I'm allocating virtual disks from the pool, or is it more likely a hardware or configuration issue with ZFS?

Any suggestions or insights would be greatly appreciated.

Thank you in advance!