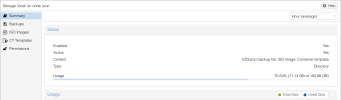

The way the used storage is calculated depends on what kind of storage it is; it is sometimes possible, that e.g.

df -h doesn't report disk usage accurately, because there might be some additional context that the command cannot take into account.

For example, if your storage is managed via an LVM thin pool, it might report false numbers, because it's not aware of LVM's logical volumes, etc. Now if you have - let's say - a storage with type "directory" that is on top of ext4,

which in turn is on top of an LVM LV, the storage will (or rather,

can) usually only see what the ext4 filesystem reports to it.

So, it's not as straightforward or simple as it might seem.

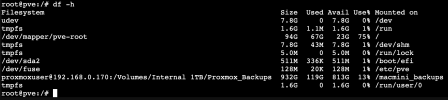

To show a more concrete example, let me demonstrate a similar thing using the ZFS root pool of my workstation:

Code:

# zfs list -r rpool

NAME USED AVAIL REFER MOUNTPOINT

rpool 118G 603G 104K /rpool

rpool/ROOT 118G 603G 96K /rpool/ROOT

rpool/ROOT/pve-1 118G 603G 118G /

rpool/data 96K 603G 96K /rpool/data

Code:

# df -h | head -n 1; df -h | grep rpool

rpool/ROOT/pve-1 721G 118G 604G 17% /

rpool 604G 128K 604G 1% /rpool

rpool/ROOT 604G 128K 604G 1% /rpool/ROOT

rpool/data 604G 128K 604G 1% /rpool/data

As you can see, my pool has approximately 603G of space available. But, comparing the outputs of the two commands, you can see that

df thinks that

rpool isn't actually using any data - the way

zfs list and

df report used and available storage just differs in that regard.

I think you get the point - many technologies intermingling can lead to discrepancies.

Now, in regards to

@ssspinball's case there could be a couple different things going on:

- Mountpoint shadowing. You already checked this from what I understand, but still posting this for completeness's sake:

Maybe something was written to some directory where later a drive or network share was mounted. You can remount your / like this:

Code:

# mkdir -p /root-alt

# mount -o bind / /root-alt

You can then check if there's something in /root-alt/macmini_backups that's been hidden by your network share.

- inode exhaustion. Your filesystem usually has a limited amount of inodes. This is unlikely to happen unless you have lots of little files. You can check this via

df -i.

- As @alexskysilk mentioned, you might share the space of your root partition with something else. If you're on LVM, you can check via

lvs, lvdisplay, vgs, vgdisplay, pvs and pvdisplay. On ZFS you can check your datasets / pools via zfs list. Really depends on what you're using.

- Also unlikely: Something on your system is keeping deleted files in memory that have already been deleted from disk (e.g. large log files). You can check this via

lsof:

Code:

# lsof -n | grep deleted &> lsof.txt

# less lsof.txt

(Piping to a file here because the output may be large.) The 8th column (usually) contains the file size in bytes. These deleted-but-still-present files also count to the "orphaned" inode count mentioned in 2. (IIRC), meaning they're still allocated on the disk and will actually be removed once whatever program keeps them open stops using them.

That's all I can think of OTOH - if anybody has any other ideas, please let me know.

@ssspinball, if you're willing to provide more details on your storage configuration, I could perhaps help you figure out what's going on:

cat /etc/pve/storage.cfg

Also, for the curious:

Here's a great thread on SO that goes in depth about measuring disk usage.