What is the process for adding an HBA Controller to Proxmox? I've read all the docs I could find and can't seem to figure it out.

My Setup: HP DL380p G8 with LSI Logic MegaRAID SAS 9286-8e 8-Port 6Gb/s PCI-E 3.0 SATA+SAS RAID Controller and (2) NetApp DS4246 JBODs.

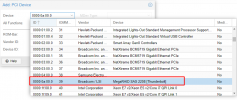

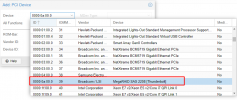

Proxmox will see any logical volumes I have created within the internal HP 420i controller and also no problem seeing the NVME I have in one of the PCIe slots. However when I go to Add the HBA as a PCI device, I see it in the list and can add it but the server will not start. It attempts to start and shuts down.

When I first startup the HP server itself, I can see in the post that the LSI card is seeing the drives in the JBOD. I have made sure there are no arrays configured and no boot device selected within the controller itself.

I have the node using q35 machine type since that is the one that supports PCI Express however it doesn't matter what selections I make when adding the device, the result is the same. Server starts and shuts right back down with this error:

I have immou enabled in /etc/default/grub

If I run # lshw -class disk -class storage I can see the controller:

What am I missing? I do not want the JBODs to be an array. I want the JBODs to be individual drives. How to get Proxmox to acknowledge the drives in the JBODs?

Thanks in advance for any help.

My Setup: HP DL380p G8 with LSI Logic MegaRAID SAS 9286-8e 8-Port 6Gb/s PCI-E 3.0 SATA+SAS RAID Controller and (2) NetApp DS4246 JBODs.

Proxmox will see any logical volumes I have created within the internal HP 420i controller and also no problem seeing the NVME I have in one of the PCIe slots. However when I go to Add the HBA as a PCI device, I see it in the list and can add it but the server will not start. It attempts to start and shuts down.

When I first startup the HP server itself, I can see in the post that the LSI card is seeing the drives in the JBOD. I have made sure there are no arrays configured and no boot device selected within the controller itself.

I have the node using q35 machine type since that is the one that supports PCI Express however it doesn't matter what selections I make when adding the device, the result is the same. Server starts and shuts right back down with this error:

Code:

kvm: -device vfio-pci,host=0000:0a:00.0,id=hostpci0,bus=ich9-pcie-port-1,addr=0x0,rombar=0: vfio 0000:0a:00.0: failed to setup container for group 39: Failed to set iommu for container: Operation not permitted

TASK ERROR: start failed: QEMU exited with code 1

I have immou enabled in /etc/default/grub

Code:

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt"If I run # lshw -class disk -class storage I can see the controller:

*-raid

description: RAID bus controller

product: MegaRAID SAS 2208 [Thunderbolt]

vendor: Broadcom / LSI

physical id: 0

bus info: pci@0000:0a:00.0

version: 05

width: 64 bits

clock: 33MHz

capabilities: raid pm pciexpress vpd msi msix cap_list rom

configuration: driver=vfio-pci latency=0

resources: irq:16 ioport:6000(size=256) memory:f7ff0000-f7ff3fff memory:f7f80000-f7fbffff memory:f7f00000-f7f1ffff

What am I missing? I do not want the JBODs to be an array. I want the JBODs to be individual drives. How to get Proxmox to acknowledge the drives in the JBODs?

Thanks in advance for any help.

Last edited: