Alright, weird log messages from Proxmox 7.3 first in case it's an immediate indication of a problem, then lots of detail about my setup after. This is a hand-picked selection of events, not contiguous. One of the offsets appears numerous times. The 'storage' pool has 3 WD140EDGZ drives; all 3 have messages about extremely long deadman delays. (The longest are over 114 days! The rest are around 5-10 minutes.)

I have a very small homelab setup with a single server running Proxmox 7.3 with 3 VMs. (I can't get more specific than that because I upgraded to 7.4 today...)

One of the VMs is a file server running Windows Server 2022 and the RedHat VirtIO guest driver package v0.1.229. For storage, I have two VM disks, both on the same ZFS RAIDZ1 pool of 3x WD140EDGZ drives. (One of the disks is backed up to the cloud.) The disks are formatted with NTFS.

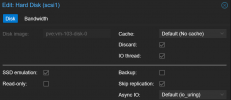

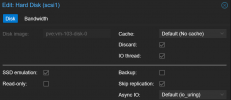

Both VM disks are using VirtIO SCSI with the settings as follows: Cache=Default (No cache), Discard=checked, IO thread=checked, SSD emulation=checked, Read-only=unchecked, Backup=unchecked, Skip replication=checked, Async IO=Default (io_uring).

Performance of this setup is both bad and inconsistent. While transferring a single big file, it fluctuates between around 50-100MB/sec over gigabit ethernet. These drives are fast enough that I originally expected it to saturate the link (112MB/sec or so). I've tried troubleshooting it before and failed. (I don't have enough storage to change the design without losing data or paying to restore from my cloud backup.) Regardless, it's still fast enough for my use case of basic photo/video/document storage, so I decided to just live with it.

Originally, I was using the onboard SATA ports for the storage. In January, I got an LSI 9201-8i pre-flashed in IT mode (courtesy theartofserver) so that I could isolate the H97 chipset as a potential source of speed problems. Speed didn't improve, but seemingly nothing got worse, and I got more ports out of it, so I moved on.

Since then, some files have seemingly-randomly gone missing. I found a hidden and nearly-inaccessible folder called "found.001" with most of the missing files inside (though probably in varying states of completeness). This clearly suggests that the NTFS file table is getting corrupted somehow. Presumably, the writes that take nearly 4 months to complete are related...

My only idea at the moment is maybe I should put a fan on the LSI card, or try different SAS->SATA cables. The Windows file server crashed in the kernel with 0x0000007e several hours after I started a backup while a zfs scrub was running in the background, so I guess I have a way to confirm whether the problem has been fixed, lol.

Code:

Jul 09 07:17:03 pve zed[374088]: eid=100 class=deadman pool='storage' vdev=ata-WDC_WD140EDGZ-11B1PA0_9MGWXR5J-part1 size=323584 offset=2499019612160 priority=0 err=0 flags=0x40080c80 delay=9861900884ms

Jul 09 07:17:03 pve zed[374092]: eid=102 class=deadman pool='storage' vdev=ata-WDC_WD140EDGZ-11B1PA0_Y5KUYX8D-part2 size=270336 offset=2499019608064 priority=0 err=0 flags=0x40080c80 delay=9861900884ms

Jul 09 07:17:03 pve zed[374211]: eid=141 class=deadman pool='storage' vdev=ata-WDC_WD140EDGZ-11B1PA0_Y5KTNW2C-part2 size=4096 offset=2499019603968 priority=0 err=0 flags=0x180880 delay=9861900883ms bookmark=2879:1:0:380361960

Jul 09 07:18:05 pve zed[2663]: Missed 18 events

Jul 09 07:18:05 pve zed[2663]: Bumping queue length to 2048

Jul 09 07:18:09 pve zed[2663]: Missed 59 events

Jul 09 07:18:09 pve zed[2663]: Bumping queue length to 16384

Jul 09 07:18:58 pve zed[374890]: eid=266 class=delay pool='storage' vdev=ata-WDC_WD140EDGZ-11B1PA0_Y5KTNW2C-part2 size=4096 offset=2499019550720 priority=0 err=0 flags=0x180880 delay=427946ms bookmark=2879:1:0:380361950

Jul 09 07:18:58 pve zed[374848]: eid=252 class=delay pool='storage' vdev=ata-WDC_WD140EDGZ-11B1PA0_Y5KUYX8D-part2 size=4096 offset=2499019526144 priority=0 err=0 flags=0x180880 delay=427910ms bookmark=2879:1:0:380361946

Jul 09 07:18:58 pve zed[374869]: eid=259 class=delay pool='storage' vdev=ata-WDC_WD140EDGZ-11B1PA0_9MGWXR5J-part1 size=4096 offset=2499019534336 priority=0 err=0 flags=0x180880 delay=427927ms bookmark=2879:1:0:380361947

Jul 09 07:18:58 pve zed[374845]: eid=251 class=delay pool='storage' vdev=ata-WDC_WD140EDGZ-11B1PA0_Y5KTNW2C-part2 size=1048576 offset=2514958422016 priority=2 err=0 flags=0x40080c80 delay=427938ms

Jul 09 07:18:58 pve zed[374903]: eid=270 class=delay pool='storage' vdev=ata-WDC_WD140EDGZ-11B1PA0_Y5KUYX8D-part2 size=1044480 offset=12997430239232 priority=2 err=0 flags=0x40080c80 delay=427988ms

Jul 09 07:18:58 pve zed[374899]: eid=269 class=delay pool='storage' vdev=ata-WDC_WD140EDGZ-11B1PA0_9MGWXR5J-part1 size=323584 offset=2499019612160 priority=0 err=0 flags=0x40080c80 delay=427956msI have a very small homelab setup with a single server running Proxmox 7.3 with 3 VMs. (I can't get more specific than that because I upgraded to 7.4 today...)

One of the VMs is a file server running Windows Server 2022 and the RedHat VirtIO guest driver package v0.1.229. For storage, I have two VM disks, both on the same ZFS RAIDZ1 pool of 3x WD140EDGZ drives. (One of the disks is backed up to the cloud.) The disks are formatted with NTFS.

Both VM disks are using VirtIO SCSI with the settings as follows: Cache=Default (No cache), Discard=checked, IO thread=checked, SSD emulation=checked, Read-only=unchecked, Backup=unchecked, Skip replication=checked, Async IO=Default (io_uring).

Performance of this setup is both bad and inconsistent. While transferring a single big file, it fluctuates between around 50-100MB/sec over gigabit ethernet. These drives are fast enough that I originally expected it to saturate the link (112MB/sec or so). I've tried troubleshooting it before and failed. (I don't have enough storage to change the design without losing data or paying to restore from my cloud backup.) Regardless, it's still fast enough for my use case of basic photo/video/document storage, so I decided to just live with it.

Originally, I was using the onboard SATA ports for the storage. In January, I got an LSI 9201-8i pre-flashed in IT mode (courtesy theartofserver) so that I could isolate the H97 chipset as a potential source of speed problems. Speed didn't improve, but seemingly nothing got worse, and I got more ports out of it, so I moved on.

Since then, some files have seemingly-randomly gone missing. I found a hidden and nearly-inaccessible folder called "found.001" with most of the missing files inside (though probably in varying states of completeness). This clearly suggests that the NTFS file table is getting corrupted somehow. Presumably, the writes that take nearly 4 months to complete are related...

My only idea at the moment is maybe I should put a fan on the LSI card, or try different SAS->SATA cables. The Windows file server crashed in the kernel with 0x0000007e several hours after I started a backup while a zfs scrub was running in the background, so I guess I have a way to confirm whether the problem has been fixed, lol.