Hello.

I know that could be a shot in the dark, but I will try to clarify as much as I possible.

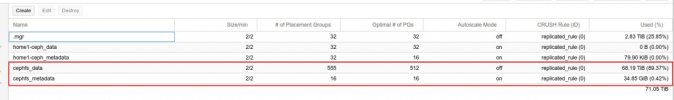

I have a 4 node PVE Cluster with CEPH (Quincy) installed.

All 4 nodes has 3 SATA 8TB. (I know this is bad idea, But that what do I have for the moment)

Nodes 1, 2 and 4, has 1 nvme each.

We have created a pool with this 3 nvme but decided to remove it.

However when try to stop the osd in order to remove it, I get this message:

unsafe to stop osd(s) at this time (1 PGs are or would become offline)

Any clue?

Thanks a lot.

I know that could be a shot in the dark, but I will try to clarify as much as I possible.

I have a 4 node PVE Cluster with CEPH (Quincy) installed.

All 4 nodes has 3 SATA 8TB. (I know this is bad idea, But that what do I have for the moment)

Nodes 1, 2 and 4, has 1 nvme each.

We have created a pool with this 3 nvme but decided to remove it.

However when try to stop the osd in order to remove it, I get this message:

unsafe to stop osd(s) at this time (1 PGs are or would become offline)

Any clue?

Thanks a lot.

Last edited: