Hi All!

There is a problem with the HA since the last update.

I disable all VMs via HA and after them shutdown made the latest update.

Reboot all (three) nodes.

After cluster booting, I began to enable via HA all VMs to start.

A few minutes later I saw that none of the VM were booted.

I tried to manually run the VMs, but to no avail.

I deleted all resources of the HA and added again and all VMs started!

But I rejoiced in vain!

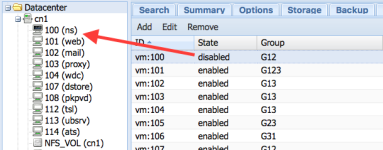

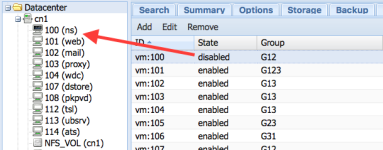

If I disable any VM via HA, then the VM shutdown:

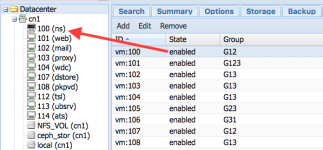

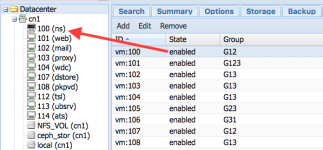

But if I enable in HA this VM again, the booting does not occur:

Only removal of the resource from HA and adding again allows the VM to boot.

P.S.

# pveversion -v

proxmox-ve: 4.1-28 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-2 (running version: 4.1-2/78c5f4a2)

pve-kernel-4.2.6-1-pve: 4.2.6-28

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-29

qemu-server: 4.0-42

pve-firmware: 1.1-7

libpve-common-perl: 4.0-42

libpve-access-control: 4.0-10

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-18

pve-container: 1.0-35

pve-firewall: 2.0-14

pve-ha-manager: 1.0-15

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-5

lxcfs: 0.13-pve2

cgmanager: 0.39-pve1

criu: 1.6.0-1

fence-agents-pve: 4.0.20-1

---

Best regards!

Gosha

There is a problem with the HA since the last update.

I disable all VMs via HA and after them shutdown made the latest update.

Reboot all (three) nodes.

After cluster booting, I began to enable via HA all VMs to start.

A few minutes later I saw that none of the VM were booted.

I tried to manually run the VMs, but to no avail.

I deleted all resources of the HA and added again and all VMs started!

But I rejoiced in vain!

If I disable any VM via HA, then the VM shutdown:

But if I enable in HA this VM again, the booting does not occur:

Only removal of the resource from HA and adding again allows the VM to boot.

P.S.

# pveversion -v

proxmox-ve: 4.1-28 (running kernel: 4.2.6-1-pve)

pve-manager: 4.1-2 (running version: 4.1-2/78c5f4a2)

pve-kernel-4.2.6-1-pve: 4.2.6-28

pve-kernel-4.2.3-2-pve: 4.2.3-22

lvm2: 2.02.116-pve2

corosync-pve: 2.3.5-2

libqb0: 0.17.2-1

pve-cluster: 4.0-29

qemu-server: 4.0-42

pve-firmware: 1.1-7

libpve-common-perl: 4.0-42

libpve-access-control: 4.0-10

libpve-storage-perl: 4.0-38

pve-libspice-server1: 0.12.5-2

vncterm: 1.2-1

pve-qemu-kvm: 2.4-18

pve-container: 1.0-35

pve-firewall: 2.0-14

pve-ha-manager: 1.0-15

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u1

lxc-pve: 1.1.5-5

lxcfs: 0.13-pve2

cgmanager: 0.39-pve1

criu: 1.6.0-1

fence-agents-pve: 4.0.20-1

---

Best regards!

Gosha