Hello All,

I am figuring I am missing something small so I hope someone can help me.

Setup:

2 Dell Optiplex miniPCs with 2 NICs. 1 Nic on standard network and another dedicated to Ceph.

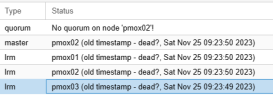

I configured the Cluster and Ceph with 2 nodes, and added my Proxmox Backup server as a qDevice. So I should be keeping quorum. I also configured a VM with HA. I am able to migrate between the 2 very well, although slow with just a 1Gb link. But if I pull the power on one of my nodes, it does start to move the VM to the other node, and tries to start it, but is not able to with the following error: "TASK ERROR: start failed: rbd error: rbd: couldn't connect to the cluster!". It will not start till I bring that other node back online. I am using both as monitors in Ceph as well.

Thanks in advance,

AJ

I am figuring I am missing something small so I hope someone can help me.

Setup:

2 Dell Optiplex miniPCs with 2 NICs. 1 Nic on standard network and another dedicated to Ceph.

I configured the Cluster and Ceph with 2 nodes, and added my Proxmox Backup server as a qDevice. So I should be keeping quorum. I also configured a VM with HA. I am able to migrate between the 2 very well, although slow with just a 1Gb link. But if I pull the power on one of my nodes, it does start to move the VM to the other node, and tries to start it, but is not able to with the following error: "TASK ERROR: start failed: rbd error: rbd: couldn't connect to the cluster!". It will not start till I bring that other node back online. I am using both as monitors in Ceph as well.

Thanks in advance,

AJ