Hi,

Today we tried rebooting our nodes one per one. We used the ha-manager crm-command node-maintenance enable like we did several times before. However, this time it seemed to migrate too many vms to the same node which caused this node to crash due to it's memory usage. I just read some things about the CRS settings, but according to the documentation this should not have happened as it already should check the node resources while migrating vms.

We have the following related settings:

- Cluster Resource Scheduliging: ha-rebalance-on-start=1, ha=static

- PVE version: 8.3.2

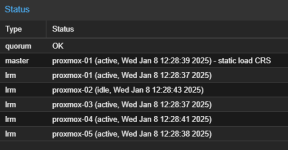

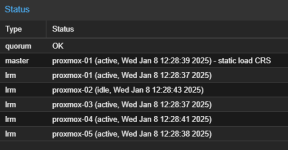

- HA status:

Did anyone perhaps have had the same issue before? Do we need to tweak or recheck any settings?

Please let me know if more information is needed.

Thanks in advance!

Regards,

Demi

Today we tried rebooting our nodes one per one. We used the ha-manager crm-command node-maintenance enable like we did several times before. However, this time it seemed to migrate too many vms to the same node which caused this node to crash due to it's memory usage. I just read some things about the CRS settings, but according to the documentation this should not have happened as it already should check the node resources while migrating vms.

We have the following related settings:

- Cluster Resource Scheduliging: ha-rebalance-on-start=1, ha=static

- PVE version: 8.3.2

- HA status:

Did anyone perhaps have had the same issue before? Do we need to tweak or recheck any settings?

Please let me know if more information is needed.

Thanks in advance!

Regards,

Demi