Hello everyone, sorry for the lengthy post, but i hope it will be helpful for others.

We've been playing around with Proxmox for the past few weeks in hopes to replace our current VMware installation. Here is our current situation :

We have 2 Dell servers and 2 Dell SANs. Our goal is to re-use those with Proxmox in the backend. Luckily we had 2 older Dell servers on our hand to do some proof of concept before going into production. We encountered some issue when it came down to the storage.

Keep in mind that have 8 ethernet connection on both SAN, 4 per cards, and those are also split into 2 subnets. All for redundancy purposes but it will play a role in the configuration.

Here's what we wanted from the PoC :

The idea behind this PoC is to connect each server to a specific SAN, make a zpool with the same name on each of them, then create a Datacenter ZFS Storage with that zpool name.

I will go into more details in a minute.

With this PoC, we were able to :

I will not go into the SAN volume/initiator configuration because it can change from vendor to vendor but if anyone needs help with a Dell SAN, let me know!

The first thing to do is to connect each host to it's SAN via iSCSI :

This tells the node proxmox1 to connect to the SAN-A via those 2 IP. I will do the same on the node proxmox2 but with different IPs :

This will result in this :

You will need to install 2 software on your nodes:

Once you have our connection made, the trick is to blend them together into one single drive. This is where multipath comes into play. In order to get the drive name, you can either use lsblk, but it can become a bit of a mess once you have a lot of connection to a single SAN. This is why we used this command :

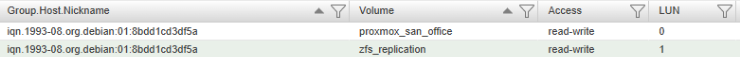

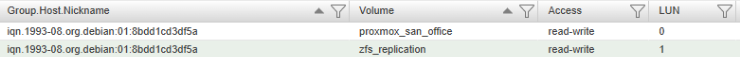

This first part of the info returned shows me which LUNs is selected, in this case [12:0:0:1], i'm using the LUN 1 from this :

Since all of those connection are made to the same LUN on the same SAN, they have a unique identifier, which will be used to get a single path instead of 8. To find this identifier you need to use one of the attributed path, like /dev/sdt from the first line in my previous table :

With this in hand you can ask multipath to create a single one with two simple command :

You can then validate that you have a single path for all those connection :

This gives us a single path: mpathg

Those steps needs to be reproduced on the second node, connecting to the second SAN. In my case, the mpath on the second node was "mpathc"

Once this is done, we can create a zpool on each of the node, with that multipath in mind, using the same name :

Repeat the process on the second node with the mpath attributed earlier:

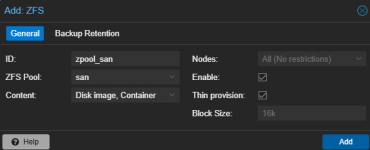

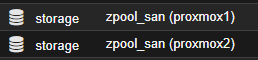

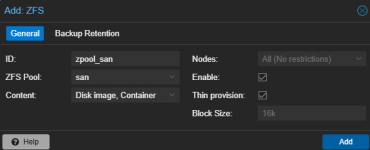

So both pool are currently independent, connecting on a different SAN. The only remaining thing to do is create a Datacenter ZFS Storage :

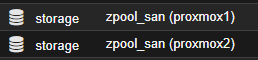

This will end up creating a ZFS pool on both nodes which will allow us to do everything we wanted :

Quick note here, when we tested HA, we disabled the management port of the second node, everything was migrated onto the first node, but when we re-enabled the port, the zpool was greyed out and was giving an error like this :

This is a wrap folks!.. If you have any questions or comments, feel free to post here or send me a private message.

Thanks and have a great day!

We've been playing around with Proxmox for the past few weeks in hopes to replace our current VMware installation. Here is our current situation :

We have 2 Dell servers and 2 Dell SANs. Our goal is to re-use those with Proxmox in the backend. Luckily we had 2 older Dell servers on our hand to do some proof of concept before going into production. We encountered some issue when it came down to the storage.

Keep in mind that have 8 ethernet connection on both SAN, 4 per cards, and those are also split into 2 subnets. All for redundancy purposes but it will play a role in the configuration.

Here's what we wanted from the PoC :

- ZFS storage to benefit from the snapshot

- Granular replication

- HA

- Use current infrastructure

The idea behind this PoC is to connect each server to a specific SAN, make a zpool with the same name on each of them, then create a Datacenter ZFS Storage with that zpool name.

I will go into more details in a minute.

With this PoC, we were able to :

- Set up the replication between the nodes/SAN

- Migrate VM from one node to another within seconds

- Activate HA and have it run from the latest snapshot done via replication

- Take some live Snapshot from those VM

I will not go into the SAN volume/initiator configuration because it can change from vendor to vendor but if anyone needs help with a Dell SAN, let me know!

The first thing to do is to connect each host to it's SAN via iSCSI :

Code:

iscsiadm -m discovery -t sendtargets -p 172.19.3.51 --login

iscsiadm -m discovery -t sendtargets -p 172.19.4.51 --loginThis tells the node proxmox1 to connect to the SAN-A via those 2 IP. I will do the same on the node proxmox2 but with different IPs :

Code:

iscsiadm -m discovery -t sendtargets -p 172.19.3.61 --login

iscsiadm -m discovery -t sendtargets -p 172.19.4.61 --loginThis will result in this :

Code:

root@proxmox1:~# iscsiadm -m node

172.19.3.51:3260,1 iqn.1988-11.com.dell:01.array.bc305bf17fa7

172.19.3.52:3260,5 iqn.1988-11.com.dell:01.array.bc305bf17fa7

172.19.3.53:3260,2 iqn.1988-11.com.dell:01.array.bc305bf17fa7

172.19.3.54:3260,6 iqn.1988-11.com.dell:01.array.bc305bf17fa7

172.19.4.51:3260,3 iqn.1988-11.com.dell:01.array.bc305bf17fa7

172.19.4.52:3260,7 iqn.1988-11.com.dell:01.array.bc305bf17fa7

172.19.4.53:3260,4 iqn.1988-11.com.dell:01.array.bc305bf17fa7

172.19.4.54:3260,8 iqn.1988-11.com.dell:01.array.bc305bf17fa7You will need to install 2 software on your nodes:

Code:

apt install multipath-tools

apt install lsscsiOnce you have our connection made, the trick is to blend them together into one single drive. This is where multipath comes into play. In order to get the drive name, you can either use lsblk, but it can become a bit of a mess once you have a lot of connection to a single SAN. This is why we used this command :

Code:

root@proxmox1:/etc/pve# lsscsi -g --transport

[12:0:0:1] disk iqn.1988-11.com.dell:01.array.bc305bf17fa7,t,0x2 /dev/sdt /dev/sg19

[13:0:0:1] disk iqn.1988-11.com.dell:01.array.bc305bf17fa7,t,0x2 /dev/sdz /dev/sg27

[14:0:0:1] disk iqn.1988-11.com.dell:01.array.bc305bf17fa7,t,0x7 /dev/sdaf /dev/sg37

[15:0:0:1] disk iqn.1988-11.com.dell:01.array.bc305bf17fa7,t,0x7 /dev/sdap /dev/sg49

[16:0:0:1] disk iqn.1988-11.com.dell:01.array.bc305bf17fa7,t,0x1 /dev/sdar /dev/sg53

[17:0:0:1] disk iqn.1988-11.com.dell:01.array.bc305bf17fa7,t,0x1 /dev/sdag /dev/sg29

[18:0:0:1] disk iqn.1988-11.com.dell:01.array.bc305bf17fa7,t,0x8 /dev/sdat /dev/sg52

[19:0:0:1] disk iqn.1988-11.com.dell:01.array.bc305bf17fa7,t,0x8 /dev/sdaq /dev/sg51This first part of the info returned shows me which LUNs is selected, in this case [12:0:0:1], i'm using the LUN 1 from this :

Since all of those connection are made to the same LUN on the same SAN, they have a unique identifier, which will be used to get a single path instead of 8. To find this identifier you need to use one of the attributed path, like /dev/sdt from the first line in my previous table :

Code:

/lib/udev/scsi_id -g -u -d /dev/sdt

3600c0ff000527ab22b5a7f6801000000With this in hand you can ask multipath to create a single one with two simple command :

Code:

multipath -a 3600c0ff000527ab22b5a7f6801000000

multipath -rYou can then validate that you have a single path for all those connection :

Code:

multipath -ll

mpathg (3600c0ff0005278f85c8d8b6801000000)

size=931G features='0' hwhandler='1 alua' wp=rw

|-+- policy='service-time 0' prio=50 status=active

| |- 14:0:0:1 sdaf 65:240 active ready running

| |- 15:0:0:1 sdap 66:144 active ready running

| |- 16:0:0:1 sdar 66:176 active ready running

| |- 17:0:0:1 sdag 66:0 active ready running

`-+- policy='service-time 0' prio=10 status=enabled

|- 12:0:0:1 sdt 65:48 active ready running

|- 13:0:0:1 sdz 65:144 active ready running

|- 18:0:0:1 sdat 66:208 active ready running

|- 19:0:0:1 sdaq 66:160 active ready runningThis gives us a single path: mpathg

Those steps needs to be reproduced on the second node, connecting to the second SAN. In my case, the mpath on the second node was "mpathc"

Once this is done, we can create a zpool on each of the node, with that multipath in mind, using the same name :

Code:

zpool create -f -o ashift=12 san /dev/mapper/mpathgRepeat the process on the second node with the mpath attributed earlier:

Code:

zpool create -f -o ashift=12 san /dev/mapper/mpathcSo both pool are currently independent, connecting on a different SAN. The only remaining thing to do is create a Datacenter ZFS Storage :

This will end up creating a ZFS pool on both nodes which will allow us to do everything we wanted :

Quick note here, when we tested HA, we disabled the management port of the second node, everything was migrated onto the first node, but when we re-enabled the port, the zpool was greyed out and was giving an error like this :

In order to let the second node zpool join back the shared ZFS pool, we had to import it manually with it's identifier :could not activate storage 'zpool_san', zfs error: import by numeric ID instead (500)

Code:

root@proxmox2:~# zpool import

pool: san

id: 5156069359417038629

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and

the '-f' flag.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-EY

config:

san ONLINE

mpathf ONLINE

pool: san

id: 11889099118070538854

state: ONLINE

action: The pool can be imported using its name or numeric identifier.

config:

san ONLINE

mpathc ONLINE

root@proxmox2:~# zpool import 11889099118070538854This is a wrap folks!.. If you have any questions or comments, feel free to post here or send me a private message.

Thanks and have a great day!