2 Issues experienced with LVM guests on Proxmox 4.4.1 Since updating using the Subscription Repository.

1.) On some of our Ubuntu 14.04 LTS Guests we have been finding processes being stopped without warning or any information being logged by both the host and the guest.

Checked the syslog on all of the Hosts and found no indication of the Host stopping any processes. Checked the syslogs on the guests and found no mention of the kernel OOM killing the processes.

Is this perhaps as a result of the QEMU updates?

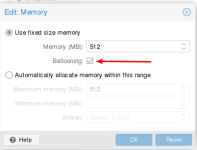

2.) Since the update, there is a "balloon" option for all KVM virtual machines that was not present before, and it was enabled for all VM's. This option does not seem to be documented anywhere or show up on the roadmap or release notes.

Could someone please indicate what this new tickbox does?

pveversion:

proxmox-ve: 4.4-76 (running kernel: 4.4.35-1-pve)

pve-manager: 4.4-1 (running version: 4.4-1/eb2d6f1e)

pve-kernel-4.4.6-1-pve: 4.4.6-48

pve-kernel-4.4.35-1-pve: 4.4.35-76

pve-kernel-4.2.6-1-pve: 4.2.6-36

lvm2: 2.02.116-pve3

corosync-pve: 2.4.0-1

libqb0: 1.0-1

pve-cluster: 4.0-48

qemu-server: 4.0-101

pve-firmware: 1.1-10

libpve-common-perl: 4.0-83

libpve-access-control: 4.0-19

libpve-storage-perl: 4.0-70

pve-libspice-server1: 0.12.8-1

vncterm: 1.2-1

pve-docs: 4.4-1

pve-qemu-kvm: 2.7.0-9

pve-container: 1.0-88

pve-firewall: 2.0-33

pve-ha-manager: 1.0-38

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u2

lxc-pve: 2.0.6-2

lxcfs: 2.0.5-pve1

criu: 1.6.0-1

novnc-pve: 0.5-8

smartmontools: 6.5+svn4324-1~pve80

zfsutils: 0.6.5.8-pve13~bpo80

Regards,

Joe

1.) On some of our Ubuntu 14.04 LTS Guests we have been finding processes being stopped without warning or any information being logged by both the host and the guest.

Checked the syslog on all of the Hosts and found no indication of the Host stopping any processes. Checked the syslogs on the guests and found no mention of the kernel OOM killing the processes.

Is this perhaps as a result of the QEMU updates?

2.) Since the update, there is a "balloon" option for all KVM virtual machines that was not present before, and it was enabled for all VM's. This option does not seem to be documented anywhere or show up on the roadmap or release notes.

Could someone please indicate what this new tickbox does?

pveversion:

proxmox-ve: 4.4-76 (running kernel: 4.4.35-1-pve)

pve-manager: 4.4-1 (running version: 4.4-1/eb2d6f1e)

pve-kernel-4.4.6-1-pve: 4.4.6-48

pve-kernel-4.4.35-1-pve: 4.4.35-76

pve-kernel-4.2.6-1-pve: 4.2.6-36

lvm2: 2.02.116-pve3

corosync-pve: 2.4.0-1

libqb0: 1.0-1

pve-cluster: 4.0-48

qemu-server: 4.0-101

pve-firmware: 1.1-10

libpve-common-perl: 4.0-83

libpve-access-control: 4.0-19

libpve-storage-perl: 4.0-70

pve-libspice-server1: 0.12.8-1

vncterm: 1.2-1

pve-docs: 4.4-1

pve-qemu-kvm: 2.7.0-9

pve-container: 1.0-88

pve-firewall: 2.0-33

pve-ha-manager: 1.0-38

ksm-control-daemon: 1.2-1

glusterfs-client: 3.5.2-2+deb8u2

lxc-pve: 2.0.6-2

lxcfs: 2.0.5-pve1

criu: 1.6.0-1

novnc-pve: 0.5-8

smartmontools: 6.5+svn4324-1~pve80

zfsutils: 0.6.5.8-pve13~bpo80

Regards,

Joe

Attachments

Last edited: