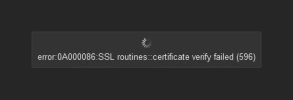

Still, another problem:

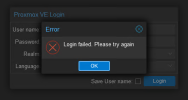

Allthough I am able to access the terminal via the PVE UI of other nodes, I am not able to login directly to node 3 and node 4.

but both nodes are shown green now in the ui of node 1 and node 2.

But also the system tab can't be shown (communication failure (0) )

Maybe another service needs to be restarted?

Allthough I am able to access the terminal via the PVE UI of other nodes, I am not able to login directly to node 3 and node 4.

but both nodes are shown green now in the ui of node 1 and node 2.

But also the system tab can't be shown (communication failure (0) )

Maybe another service needs to be restarted?