Hello dear people!

I managed to install macOS Monterey on my fresh Proxmox 7.1 installation.

Everything went fine, installing macOS through the noVNC console and setting up everything. Now its the last part to my success that does not work: the GPU passthrough.

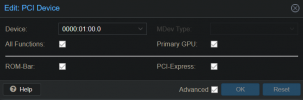

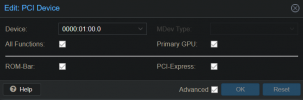

The only things I changed in the configuration in the GUI were adding the PCI Device:

... and setting the display to none. Before, I enabled IOMMU. According to every tutorial I saw, that should be enough.

When I start the VM, this error message appears:

and the whole PVE installation crashes. The PC keeps running for a while and then restarts, then everything works again until I try to start the VM again.

Now I am getting no signal and ROM errors for PCiE.

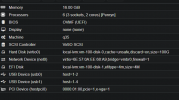

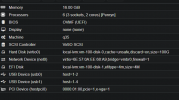

My PC details are the following:

- Acer Predator Orion PO3-600

- Nvidia GTX 1070

- Intel Core i7-8700

- 16 GB Ram

- 120gb WD Green SSD (installed for this project)

I am not sure what data you need for helping me, I assume these could help:

A thought of mine: I know, macOS struggles with Nvidia cards, but at least the Proxmox logo and the OpenCore bootloader should appear, am I right?

As I am new to Proxmox, please forgive me any mistakes. Hit me up if you need any more data to help me. I dont want to give up this exciting piece of software this early

Thank you very much in advance!!

I managed to install macOS Monterey on my fresh Proxmox 7.1 installation.

Everything went fine, installing macOS through the noVNC console and setting up everything. Now its the last part to my success that does not work: the GPU passthrough.

The only things I changed in the configuration in the GUI were adding the PCI Device:

... and setting the display to none. Before, I enabled IOMMU. According to every tutorial I saw, that should be enough.

Code:

TASK ERROR: start failed: command '/usr/bin/kvm -id 100 -name macos-monterey -no-shutdown -chardev 'socket,id=qmp,path=/var/run/qemu-server/100.qmp,server=on,wait=off' -mon 'chardev=qmp,mode=control' -chardev 'socket,id=qmp-event,path=/var/run/qmeventd.sock,reconnect=5' -mon 'chardev=qmp-event,mode=control' -pidfile /var/run/qemu-server/100.pid -daemonize -smbios 'type=1,uuid=8b91a456-73e1-49b1-95d6-dcb633559c8b' -drive 'if=pflash,unit=0,format=raw,readonly=on,file=/usr/share/pve-edk2-firmware//OVMF_CODE_4M.secboot.fd' -drive 'if=pflash,unit=1,format=raw,id=drive-efidisk0,size=540672,file=/dev/pve/vm-100-disk-1' -smp '6,sockets=3,cores=2,maxcpus=6' -nodefaults -boot 'menu=on,strict=on,reboot-timeout=1000,splash=/usr/share/qemu-server/bootsplash.jpg' -vga none -nographic -cpu 'Penryn,enforce,kvm=off,+kvm_pv_eoi,+kvm_pv_unhalt,vendor=GenuineIntel' -m 16384 -readconfig /usr/share/qemu-server/pve-q35-4.0.cfg -device 'vmgenid,guid=4f0987e3-0d2e-482d-8bee-55a05f9b4ffa' -device 'usb-tablet,id=tablet,bus=ehci.0,port=1' -device 'vfio-pci,host=0000:01:00.0,id=hostpci0.0,bus=ich9-pcie-port-1,addr=0x0.0,multifunction=on' -device 'vfio-pci,host=0000:01:00.1,id=hostpci0.1,bus=ich9-pcie-port-1,addr=0x0.1' -device 'usb-host,hostbus=1,hostport=2,id=usb0' -device 'usb-host,hostbus=1,hostport=4,id=usb1' -device 'virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x3' -iscsi 'initiator-name=iqn.1993-08.org.debian:01:4fdef895416a' -drive 'file=/dev/pve/vm-100-disk-0,if=none,id=drive-virtio0,cache=unsafe,discard=on,format=raw,aio=io_uring,detect-zeroes=unmap' -device 'virtio-blk-pci,drive=drive-virtio0,id=virtio0,bus=pci.0,addr=0xa,bootindex=100' -netdev 'type=tap,id=net0,ifname=tap100i0,script=/var/lib/qemu-server/pve-bridge,downscript=/var/lib/qemu-server/pve-bridgedown,vhost=on' -device 'virtio-net-pci,mac=6E:57:0A:EE:68:A9,netdev=net0,bus=pci.0,addr=0x12,id=net0,bootindex=101' -machine 'type=q35+pve0' -device 'isa-applesmc,osk=ourhardworkbythesewordsguardedpleasedontsteal(c)AppleComputerInc' -smbios 'type=2' -device 'usb-kbd,bus=ehci.0,port=2' -global 'nec-usb-xhci.msi=off' -global 'ICH9-LPC.acpi-pci-hotplug-with-bridge-support=off' -cpu 'host,kvm=on,vendor=GenuineIntel,+kvm_pv_unhalt,+kvm_pv_eoi,+hypervisor,+invtsc'' failed: got timeoutand the whole PVE installation crashes. The PC keeps running for a while and then restarts, then everything works again until I try to start the VM again.

Now I am getting no signal and ROM errors for PCiE.

My PC details are the following:

- Acer Predator Orion PO3-600

- Nvidia GTX 1070

- Intel Core i7-8700

- 16 GB Ram

- 120gb WD Green SSD (installed for this project)

I am not sure what data you need for helping me, I assume these could help:

Code:

root@proxmox:~# for d in /sys/kernel/iommu_groups/*/devices/*; do n=${d#*/iommu_groups/*}; n=${n%%/*}; printf 'IOMMU group %s ' "$n"; lspci -nnks "${d##*/}"; done

IOMMU group 0 00:00.0 Host bridge [0600]: Intel Corporation 8th Gen Core Processor Host Bridge/DRAM Registers [8086:3ec2] (rev 07)

DeviceName: Onboard Realtek Ethernet

Subsystem: Acer Incorporated [ALI] 8th Gen Core Processor Host Bridge/DRAM Registers [1025:1289]

Kernel driver in use: skl_uncore

Kernel modules: ie31200_edac

IOMMU group 10 02:00.0 Ethernet controller [0200]: Realtek Semiconductor Co., Ltd. RTL8111/8168/8411 PCI Express Gigabit Ethernet Controller [10ec:8168] (rev 16)

Subsystem: Acer Incorporated [ALI] RTL8111/8168/8411 PCI Express Gigabit Ethernet Controller [1025:1289]

Kernel driver in use: r8169

Kernel modules: r8169

IOMMU group 1 00:01.0 PCI bridge [0604]: Intel Corporation 6th-10th Gen Core Processor PCIe Controller (x16) [8086:1901] (rev 07)

Kernel driver in use: pcieport

IOMMU group 1 01:00.0 VGA compatible controller [0300]: NVIDIA Corporation GP104 [GeForce GTX 1070] [10de:1b81] (rev a1)

Subsystem: PC Partner Limited / Sapphire Technology GP104 [GeForce GTX 1070] [174b:1071]

Kernel driver in use: nouveau

Kernel modules: nvidiafb, nouveau

IOMMU group 1 01:00.1 Audio device [0403]: NVIDIA Corporation GP104 High Definition Audio Controller [10de:10f0] (rev a1)

Subsystem: PC Partner Limited / Sapphire Technology GP104 High Definition Audio Controller [174b:1071]

Kernel driver in use: snd_hda_intel

Kernel modules: snd_hda_intel

IOMMU group 2 00:08.0 System peripheral [0880]: Intel Corporation Xeon E3-1200 v5/v6 / E3-1500 v5 / 6th/7th/8th Gen Core Processor Gaussian Mixture Model [8086:1911]

Subsystem: Acer Incorporated [ALI] Xeon E3-1200 v5/v6 / E3-1500 v5 / 6th/7th/8th Gen Core Processor Gaussian Mixture Model [1025:1289]

IOMMU group 3 00:12.0 Signal processing controller [1180]: Intel Corporation Cannon Lake PCH Thermal Controller [8086:a379] (rev 10)

Subsystem: Acer Incorporated [ALI] Cannon Lake PCH Thermal Controller [1025:1289]

Kernel driver in use: intel_pch_thermal

Kernel modules: intel_pch_thermal

IOMMU group 4 00:14.0 USB controller [0c03]: Intel Corporation Cannon Lake PCH USB 3.1 xHCI Host Controller [8086:a36d] (rev 10)

Subsystem: Acer Incorporated [ALI] Cannon Lake PCH USB 3.1 xHCI Host Controller [1025:1289]

Kernel driver in use: xhci_hcd

Kernel modules: xhci_pci

IOMMU group 4 00:14.2 RAM memory [0500]: Intel Corporation Cannon Lake PCH Shared SRAM [8086:a36f] (rev 10)

Subsystem: Acer Incorporated [ALI] Cannon Lake PCH Shared SRAM [1025:1289]

IOMMU group 5 00:14.3 Network controller [0280]: Intel Corporation Wireless-AC 9560 [Jefferson Peak] [8086:a370] (rev 10)

Subsystem: Intel Corporation Wireless-AC 9560 [Jefferson Peak] [8086:02a4]

Kernel driver in use: iwlwifi

Kernel modules: iwlwifi

IOMMU group 6 00:16.0 Communication controller [0780]: Intel Corporation Cannon Lake PCH HECI Controller [8086:a360] (rev 10)

Subsystem: Acer Incorporated [ALI] Cannon Lake PCH HECI Controller [1025:1289]

Kernel driver in use: mei_me

Kernel modules: mei_me

IOMMU group 7 00:17.0 RAID bus controller [0104]: Intel Corporation SATA Controller [RAID mode] [8086:2822] (rev 10)

DeviceName: Onboard Intel SATA Controller

Subsystem: Acer Incorporated [ALI] SATA Controller [RAID mode] [1025:1289]

Kernel driver in use: ahci

Kernel modules: ahci

IOMMU group 8 00:1c.0 PCI bridge [0604]: Intel Corporation Cannon Lake PCH PCI Express Root Port #6 [8086:a33d] (rev f0)

Kernel driver in use: pcieport

IOMMU group 9 00:1f.0 ISA bridge [0601]: Intel Corporation Device [8086:a308] (rev 10)

Subsystem: Acer Incorporated [ALI] Device [1025:1289]

IOMMU group 9 00:1f.3 Audio device [0403]: Intel Corporation Cannon Lake PCH cAVS [8086:a348] (rev 10)

Subsystem: Acer Incorporated [ALI] Cannon Lake PCH cAVS [1025:1289]

Kernel driver in use: snd_hda_intel

Kernel modules: snd_hda_intel, snd_sof_pci_intel_cnl

IOMMU group 9 00:1f.4 SMBus [0c05]: Intel Corporation Cannon Lake PCH SMBus Controller [8086:a323] (rev 10)

Subsystem: Acer Incorporated [ALI] Cannon Lake PCH SMBus Controller [1025:1289]

Kernel driver in use: i801_smbus

Kernel modules: i2c_i801

IOMMU group 9 00:1f.5 Serial bus controller [0c80]: Intel Corporation Cannon Lake PCH SPI Controller [8086:a324] (rev 10)

Subsystem: Acer Incorporated [ALI] Cannon Lake PCH SPI Controller [1025:1289]

Code:

BOOT_IMAGE=/boot/vmlinuz-5.13.19-2-pve root=/dev/mapper/pve-root ro quiet intel_iommu=on

Code:

GRUB_DEFAULT=0

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR=`lsb_release -i -s 2> /dev/null || echo Debian`

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on"

GRUB_CMDLINE_LINUX=""

Code:

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

Code:

/sys/kernel/iommu_groups/7/devices/0000:00:17.0

/sys/kernel/iommu_groups/5/devices/0000:00:14.3

/sys/kernel/iommu_groups/3/devices/0000:00:12.0

/sys/kernel/iommu_groups/1/devices/0000:00:01.0

/sys/kernel/iommu_groups/1/devices/0000:01:00.0

/sys/kernel/iommu_groups/1/devices/0000:01:00.1

/sys/kernel/iommu_groups/8/devices/0000:00:1c.0

/sys/kernel/iommu_groups/6/devices/0000:00:16.0

/sys/kernel/iommu_groups/4/devices/0000:00:14.2

/sys/kernel/iommu_groups/4/devices/0000:00:14.0

/sys/kernel/iommu_groups/2/devices/0000:00:08.0

/sys/kernel/iommu_groups/10/devices/0000:02:00.0

/sys/kernel/iommu_groups/0/devices/0000:00:00.0

/sys/kernel/iommu_groups/9/devices/0000:00:1f.0

/sys/kernel/iommu_groups/9/devices/0000:00:1f.5

/sys/kernel/iommu_groups/9/devices/0000:00:1f.3

/sys/kernel/iommu_groups/9/devices/0000:00:1f.4A thought of mine: I know, macOS struggles with Nvidia cards, but at least the Proxmox logo and the OpenCore bootloader should appear, am I right?

As I am new to Proxmox, please forgive me any mistakes. Hit me up if you need any more data to help me. I dont want to give up this exciting piece of software this early

Thank you very much in advance!!

Last edited: