So, I have my solution and will share it for anyone else running into the same roadblock.

I have dual gpu's in this system so the files reflect that but I can confirm the solution works for both of them so a single gpu system should

be able to employ this work-around.

Some of the details of my system setup may not matter (ie kernel version). I did not fully re-test on the stock kernel after finding success. If you end up changing your kernel to 6.0.9-edge, be sure to also install kernel headers and be sure to add vendor-reset modules to new kernel (dkms). If you are unsure you have the module loaded, 'uname -a' and then 'dkms status' to confirm it's loaded for your current kernel (see my 'dkms status' below showing module installed for all kernels)

SUMMARY

I installed and set everything up in various ways based on tons of scouring of this and other forums. I was finally able to get gpu pass through working for a Windows 11 VM and a MacOS VM. My fun was stopped when I realized vendor-reset was not working for my gpu's.

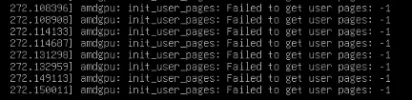

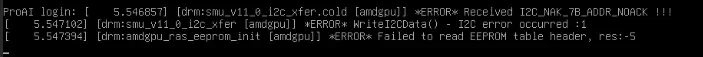

The VM would run perfectly after a fresh host boot and a single VM run, but if the VM was stopped and then re-started, the gpu pass through was broken due to the gpu refusing to reset power states. 'dmesg -w' would reveal multiple errors stating the reset failed 'reset result = 0' and the gpu 'refused to change power state from D0 to D3Hot'.

The system is still employing the 'vendor-reset' kernel module and adding the 'device-specific' option needed for newer kernels. I don't know if this is actually key to things working with the manual reset script. I haven't tried removing it to test and I likely will not bother for now.

The manual reset solution was presented elsewhere but the exact script I saw did not work for me. The order of the commands had to be modified. My version does not require me pressing the power button to wake the system, it comes back automatically. This isn't an ideal solution but I believe it's the only one due to an actual hardware/firmware flaw in the Radeon VII. The good news is that this reset works great! I can even employ it while another TrueNas VM is active and running and it doesn't seem to crash it or result in any problems. However, it will affect access to that VM for a few seconds so don't run the 'gpu-reset' if anything important is currently being accessed from another running VM. Network connectivity and disk activity is halted for 8-12 seconds, so data loss will occur if you aren't careful about other running VM's

Small bonus: I have openRGB installed on this server (a dual gpu, fully water cooled beast, retired from ETH mining) and use the openRGB server/client feature to control the lighting. This gpu-reset process does not reset the RGB lighting on the system! I know, it's a little corny but the PC is very visible and quite good to look at and that default rainbow cycling makes me nauseous lol.

I hope this helps someone else out there! The Radeon VII's have a special place in my heart, as they really cranked out the Ethereum hash rates for me and since these have water blocks, changing gpu's just to play with VM's isn't an option.

Neofetch output:

OS: Proxmox VE 7.4-3 x86_64

Kernel: 6.0.9-edge

Uptime: 20 hours, 58 mins

Packages: 1040 (dpkg)

Shell: bash 5.1.4

Terminal: /dev/pts/0

CPU: AMD Ryzen 7 3800XT (16) @ 3.900GHz

GPU: AMD ATI Radeon VII

GPU: AMD ATI Radeon VII

Memory: 13671MiB / 64211MiB

Motherboard: Asus Crosshair VII Hero (bios 5003) (x470 platform/chipset)

Important MB settings:

Advanced\CPU:

NX Mode - Enabled

SVM Mode - Enabled

Advanced\PCI Subsystem Settings:

Above 4G Decoding - Enabled *

Resize Bar Support - Disabled *

SR-IOV Support - Enabled *

Advanced\AMD CBS\NBIO Common Options:

IOMMU - Enabled

ACS Enable - Enable

PCIe ARI Support - Enable *

PCIe ARI Enumeration - Enable *

PCIe Ten Bit Tag Support - Enable *

* This setting may or may not be critical but was at this setting with a fully functioning system

Important PVE files and settings:

root@bde-waterserver:~# cat /etc/default/grub

# If you change this file, run 'update-grub' afterwards to update

# /boot/grub/grub.cfg.

# For full documentation of the options in this file, see:

# info -f grub -n 'Simple configuration'

GRUB_DEFAULT=0

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR=`lsb_release -i -s 2> /dev/null || echo Debian`

GRUB_CMDLINE_LINUX_DEFAULT="amd_iommu=on iomme=pt"

GRUB_CMDLINE_LINUX=""

# Uncomment to enable BadRAM filtering, modify to suit your needs

# This works with Linux (no patch required) and with any kernel that obtains

# the memory map information from GRUB (GNU Mach, kernel of FreeBSD ...)

#GRUB_BADRAM="0x01234567,0xfefefefe,0x89abcdef,0xefefefef"

# Uncomment to disable graphical terminal (grub-pc only)

#GRUB_TERMINAL=console

# The resolution used on graphical terminal

# note that you can use only modes which your graphic card supports via VBE

# you can see them in real GRUB with the command `vbeinfo'

#GRUB_GFXMODE=640x480

# Uncomment if you don't want GRUB to pass "root=UUID=xxx" parameter to Linux

#GRUB_DISABLE_LINUX_UUID=true

# Uncomment to disable generation of recovery mode menu entries

#GRUB_DISABLE_RECOVERY="true"

# Uncomment to get a beep at grub start

#GRUB_INIT_TUNE="480 440 1"

root@bde-waterserver:/etc/modprobe.d# cat blacklist.conf

blacklist amdgpu

blacklist radeon

blacklist nouveau

blacklist nvidia

root@bde-waterserver:/etc/modprobe.d# cat dkms.conf

# modprobe information used for DKMS modules

#

# This is a stub file, should be edited when needed,

# used by default by DKMS.

root@bde-waterserver:/etc/modprobe.d# cat iommu_unsafe_interrupts.conf

options vfio_iommu_type1 allow_unsafe_interrupts=1

root@bde-waterserver:/etc/modprobe.d# cat kvm.conf

options kvm ignore_msrs=1

root@bde-waterserver:/etc/modprobe.d# cat pve-blacklist.conf

# This file contains a list of modules which are not supported by Proxmox VE

# nidiafb see bugreport

https://bugzilla.proxmox.com/show_bug.cgi?id=701

blacklist nvidiafb

root@bde-waterserver:/etc/modprobe.d# cat snd-hda-intel.conf

root@bde-waterserver:/etc/modprobe.d# cat vfio.conf

options vfio-pci ids=1002:66af,1002:ab20 disable_vga=1

softdep amdgpu pre: vfio vfio_pci

**Vendor-reset installed using this guide: https://www.nicksherlock.com/2020/11/working-around-the-amd-gpu-reset-bug-on-proxmox/

root@bde-waterserver:/etc/modprobe.d# dkms status

vendor-reset, 0.1.1, 5.13.9-1-edge, x86_64: installed

vendor-reset, 0.1.1, 5.15.104-1-pve, x86_64: installed

vendor-reset, 0.1.1, 6.0.9-edge, x86_64: installed

**Systemd service created to set reset_method to make sure vendor-reset module loaded and echo 'device_specific' to gpu variable 'reset_method'. Create file at /etc/systemd/system/vendor-specific.service. Then 'systemctl enable vendor-specific.service' 'systemctl start vendor-specific.service'

root@bde-waterserver:/etc/systemd/system# cat vendor-specific.service

[Unit]

Description=Set the AMD GPU reset method to 'device_specific'

After=multi-user.target

[Service]

ExecStart=/usr/bin/bash -c '/usr/sbin/modprobe vendor-reset && /usr/bin/echo device_specific > /sys/bus/pci/devices/0000:0f:00.0/reset_method && /usr/bin/echo device_specific

> /sys/bus/pci/devices/0000:0c:00.0/reset_method'

[Install]

WantedBy=multi-user.target

**Create bash script to manually reset gpu(s) after VM is shut down. IMPORTANT: VM seems to require a 'Hard Stop' command from the console to fully unload from host. I may look for a way to automate this later. Shutdown from within VM or a 'Shutdown' command is not sufficient for reset to work. I usually shutdown within VM and then 'Hard Stop' from PVE console.

**Place script at /usr/local/bin/gpu-reset. After VM is shut down correctly, enter command 'gpu-reset'. Script pauses briefly between commands just for safety/caution - a system wake up timer is set for 8 seconds - system is sent into suspend state - after wake up gpu(s) are removed from bus/pci devices (I believe unloaded from driver) - rescan pci bus devices and gpu's are added back - a power_state reset has occured.

root@bde-waterserver:~# cat /usr/local/bin/gpu-reset

#!/bin/bash

# Remove gpus 0f:00, 0c:00 from driver, set wake timer of 8 seconds, suspend system, rescan pci bus devices

sleep 2

rtcwake -m no -s 8 && systemctl suspend

sleep 3

echo 1 > /sys/bus/pci/devices/0000:0f:00.0/remove

echo 1 > /sys/bus/pci/devices/0000:0f:00.1/remove

echo 1 > /sys/bus/pci/devices/0000:0c:00.0/remove

echo 1 > /sys/bus/pci/devices/0000:0c:00.1/remove

sleep 3

echo 1 > /sys/bus/pci/rescan

**GPU's attached to VM's as PCI Device with different settings for W11 and MacOS. This may work with different settings than I have here but 'All Functions' and 'PCI-Express' set to on, seemed to be mandatory for me. The W11 machine has 'Primary GPU' on but 'Rom-Bar' off. The MacOS has 'Rom-Bar' on but 'Primary GPU' off.

Windows 11 VM:

root@bde-waterserver:/etc/modprobe.d# cat /etc/pve/qemu-server/103.conf

agent: 1

args: -cpu 'host,+kvm_pv_unhalt,+kvm_pv_eoi,hv_vendor_id=proxmox,hv_spinlocks=0x1fff,hv_vapic,hv_time,hv_reset,hv_vpindex,hv_runtime,hv_relaxed,hv_synic,hv_stimer,hv_tlbflush

,hv_ipi,kvm=off'

balloon: 0

bios: ovmf

boot: order=virtio0

cores: 8

cpu: host

efidisk0: local-lvm:vm-103-disk-0,efitype=4m,pre-enrolled-keys=1,size=4M

hostpci0: 0000:0f:00,pcie=1,rombar=0,romfile=R7-105-UEFI.rom,x-vga=1

ide0: ext4-storage:iso/virtio-win-0.1.229.iso,media=cdrom,size=522284K

machine: pc-q35-7.2

memory: 8192

meta: creation-qemu=7.2.0,ctime=1681232752

name: Win11-02

net0: e1000=0A:AE:B6

E:65:0C,bridge=vmbr0,firewall=1

numa: 0

ostype: win11

scsihw: virtio-scsi-single

smbios1: uuid=ba8e2087-da2b-4fa7-bb75-a29096423deb

sockets: 1

tpmstate0: local-lvm:vm-103-disk-1,size=4M,version=v2.0

usb0: host=1b1c:1b35

usb1: host=320f:5000

vga: none

virtio0: local-lvm:vm-103-disk-2,discard=on,iothread=1,size=80G

vmgenid: 78701035-a047-4d35-83f7-65152d3b3679

Mac OS Monterey VM:

root@bde-waterserver:/etc/modprobe.d# cat /etc/pve/qemu-server/104.conf

agent: 1

args: -device isa-applesmc,osk="ourhardworkbythesewordsguardedpleasedontsteal(c)AppleComputerInc" -smbios type=2 -device usb-kbd,bus=ehci.0,port=2 -global nec-usb-xhci.msi=of

f -global ICH9-LPC.acpi-pci-hotplug-with-bridge-support=off -cpu Penryn,vendor=GenuineIntel,+invtsc,+hypervisor,kvm=on,vmware-cpuid-freq=on

balloon: 0

bios: ovmf

boot: order=virtio0;net0

cores: 8

cpu: Penryn

efidisk0: local-lvm:vm-104-disk-0,efitype=4m,size=4M

hostpci0: 0000:0f:00,pcie=1

machine: q35

memory: 8192

meta: creation-qemu=7.2.0,ctime=1681331224

name: MacOSMonterey

net0: vmxnet3=0A:87:67:5F:A1:29,bridge=vmbr0,firewall=1

numa: 0

ostype: other

scsihw: virtio-scsi-pci

smbios1: uuid=ad50f489-e055-47f5-9fcb-ce7ee62bfc15

sockets: 1

usb0: host=1b1c:1b35,usb3=1

usb1: host=320f:5000,usb3=1

vga: none

virtio0: local-lvm:vm-104-disk-1,cache=unsafe,discard=on,size=64G

vmgenid: 59a24833-92e8-4e30-bc9f-4199f90c113d

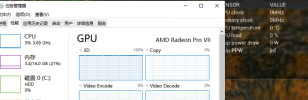

View attachment 49181View attachment 49182