Hi All,

I have finally diagnosed the root of long running persistent nightly reboots - it seems that my GPU pass-through is the culprit.

My server worked fine with my GPU pass through for months however I think upgrading to 7.x is when the issue started to appear?

I have a 1660S that is passed through to a VM for use by docker containers. The actual pass-through works perfectly (excluding the reboots) however upon disabling it and changing settings back to stock, the server has stayed up for two days (woah) without issue.

One odd thing is that the server still crashes/reboots even when the GPU is not in use by the VM (VM not booted).

I don't get any error messages in syslog or dmesg that I am aware of.

My config is as follows (I have tried every combination in-between here)

Hardware:

CPU: Intel 7700k

Mobo: ASRock Fatal1ty Z270 Gaming K6

GPU: MSI Nvidia 1660S

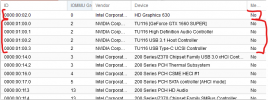

lspci -s 01:00

Vendor ID's

/etc/default/grub (I have tried minimal configs here also)

/etc/modprobe.d/vfio.conf

/etc/modprobe.d/pve-blacklist.conf

Any help or advice would be hugely appreciated.

I have finally diagnosed the root of long running persistent nightly reboots - it seems that my GPU pass-through is the culprit.

My server worked fine with my GPU pass through for months however I think upgrading to 7.x is when the issue started to appear?

I have a 1660S that is passed through to a VM for use by docker containers. The actual pass-through works perfectly (excluding the reboots) however upon disabling it and changing settings back to stock, the server has stayed up for two days (woah) without issue.

One odd thing is that the server still crashes/reboots even when the GPU is not in use by the VM (VM not booted).

I don't get any error messages in syslog or dmesg that I am aware of.

My config is as follows (I have tried every combination in-between here)

Hardware:

CPU: Intel 7700k

Mobo: ASRock Fatal1ty Z270 Gaming K6

GPU: MSI Nvidia 1660S

lspci -s 01:00

Code:

01:00.0 VGA compatible controller: NVIDIA Corporation TU116 [GeForce GTX 1660 SUPER] (rev a1) -

01:00.1 Audio device: NVIDIA Corporation TU116 High Definition Audio Controller (rev a1)

01:00.2 USB controller: NVIDIA Corporation TU116 USB 3.1 Host Controller (rev a1)

01:00.3 Serial bus controller [0c80]: NVIDIA Corporation TU116 USB Type-C UCSI Controller (rev a1)Vendor ID's

Code:

10de:21c4,10de:1aeb,10de:1aec,10de:1aed

Code:

01:00.0 0300: 10de:21c4 (rev a1)

01:00.1 0403: 10de:1aeb (rev a1)

01:00.2 0c03: 10de:1aec (rev a1)

01:00.3 0c80: 10de:1aed (rev a1)/etc/default/grub (I have tried minimal configs here also)

Code:

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt video=efifb:off video=vesa:off"/etc/modprobe.d/vfio.conf

Code:

options vfio-pci ids=1b4b:9230,1b21:2142,10de:21c4,10de:1aeb,10de:1aec,10de:1aed disable_vga=1/etc/modprobe.d/pve-blacklist.conf

Code:

blacklist nvidiafb

blacklist radeon

blacklist nouveau

blacklist nvidia

blacklist i2c_nvidia_gpuAny help or advice would be hugely appreciated.

Last edited: