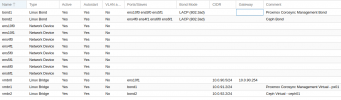

I'm reading up on using proxmox and Ceph in a HA cluster. One thing I'm getting confused about is the different networks, their bandwidth requirements and traffic that runs trough them, as well as which are nice to have seperated and which are usualy combined in one nic. Most manual's and threads I find talk about the network's of proxmox and ceph seperately. But I'm having a tough time understanding what their purpouses are in an intergrated enviroment and where they overlap. Thinking of setting up a 3 node proxmox+ceph HA cluster.

As far as I understand:

Ceph needs:

Heartbeat - Is this the same as corosync? Or is this different? should be seperated?

Private - Used for Ceph OSD syncing. Proxmox VM's don't have acces to this? Preferably 10GbE

Public - Where proxmox VM's are accessing their local storage. Is this used at all if VM's are on the same servers as the OSD's? Is this also the LAN? Is this also where proxmox's UI is? What bandwidth should this need?

Proxmox Cluster:

Corosync: Low latency, low bandwidth, Preferably redundant.

LAN with vlans: "normal" LAN with VLAN's for seperation of certain VM's. Is this the same Proxmox VM's talk to eachother here? High bandwith?

proxmox UI: Probally on the LAN with VLANS, on the management VLAN?

So would this mean 5-6 NIC's in the ideal scenario? and douled for redundancy? Seems like a bit much.

Sorry if it sounds a bit confusing, but I'm getting confused with all similar but different terms. If I'm reading ceph documentation I'm left wondering how does this integrate to proxmox and vice versa

As far as I understand:

Ceph needs:

Heartbeat - Is this the same as corosync? Or is this different? should be seperated?

Private - Used for Ceph OSD syncing. Proxmox VM's don't have acces to this? Preferably 10GbE

Public - Where proxmox VM's are accessing their local storage. Is this used at all if VM's are on the same servers as the OSD's? Is this also the LAN? Is this also where proxmox's UI is? What bandwidth should this need?

Proxmox Cluster:

Corosync: Low latency, low bandwidth, Preferably redundant.

LAN with vlans: "normal" LAN with VLAN's for seperation of certain VM's. Is this the same Proxmox VM's talk to eachother here? High bandwith?

proxmox UI: Probally on the LAN with VLANS, on the management VLAN?

So would this mean 5-6 NIC's in the ideal scenario? and douled for redundancy? Seems like a bit much.

Sorry if it sounds a bit confusing, but I'm getting confused with all similar but different terms. If I'm reading ceph documentation I'm left wondering how does this integrate to proxmox and vice versa