Garbage Collection Warning

- Thread starter Jarvar

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hello,

the error means that one or more parts of your backup snapshot is missing. The most likely causes:

Where is your store located? Local disk, RAID, network share?

Are there any failed verification jobs in the task log?

Can you find any

the error means that one or more parts of your backup snapshot is missing. The most likely causes:

- User/Admin error: Files in the underlying chunk store (

/path/to/datastore/.chunkshave been accidentally deleted - Filesystem corruption/hardware failure - A verify job will append the

.badsuffix to a chunk if the checksum is not valid

Where is your store located? Local disk, RAID, network share?

Are there any failed verification jobs in the task log?

Can you find any

.bad files in the datastore? find /path/to/store/.chunks -name "*.bad"@l.wagner Thank you for getting back to me. The datastore is located on an external USB drive with zfs raid0. I was originally syncing it from an old PBS, however with this particular case I rsync -azHxP the datastore from the old PBS instead since I was running running into errors previously.

Maybe this was the cause of the issue?

I've run find /path/to/store/.chunks -name "*.bad" but it doesn't show anything as of yet. It does have a lot of files to go through though.

Maybe this was the cause of the issue?

I've run find /path/to/store/.chunks -name "*.bad" but it doesn't show anything as of yet. It does have a lot of files to go through though.

Hi again,

if

if

find cannot find any *.bad files, the chunks appear to missing completly. I assume that something must have gone wrong when you synced the old datastore. Is the old data store still available to you?Hello, yes it is, I still have the old datastore but I see that it's also have errors with the garbage collection.Hi again,

iffindcannot find any *.bad files, the chunks appear to missing completly. I assume that something must have gone wrong when you synced the old datastore. Is the old data store still available to you?

The old datastore is running a verification job with a lot of these errors.

'27db42a5e9bfa9f65cd96f323607dffdf0fc4a4d25c259c90e6f925b26bcca2e' - No such file or directory (os error 2)

2022-12-27T14:27:56-05:00: can't verify chunk, load failed - store 'store007', unable to load chunk 'd7fc52ab62ce19020c0f9a2c8103ee371d8f0e2cebaabeabc6336aa4291ae1a6' - No such file or directory (os error 2)

2022-12-27T14:27:56-05:00: can't verify chunk, load failed - store 'store007', unable to load chunk 'e28e067e7cceae54a85233b8665e31eddffcf34a4ffaa785e5dcc22b5548bcf6' - No such file or directory (os error 2)

2022-12-27T14:27:56-05:00:

If that other store passes verification jobs, I would just then restore the data-store from there. I really seems that something went wrong along the way when you copied the store the first time...@l.wagner

I do have access to the datastore kind of. I have the datastore also synced to another store on a NFS Server which doesn't seem to have any errors at the present moment.

... which might be because the old store is corrupted for some reason. The error messages also imply that chunks are missing from the store.Hello, yes it is, I still have the old datastore but I see that it's also have errors with the garbage collection.

The old datastore is running a verification job with a lot of these errors.

@l.wagner Thank you. I am in the process of doing that. My main issue is that syncing from the remote is very slow. When I used rsync it took roughly 24 hours. When syncing from remote it takes a lot longer. For example it's been 15 Hours so far and only 6 out 76 backups have completed so far. I know that the first backup probably takes the longest and then each one is shorter after, but I can only assume it will still take a long time.If that other store passes verification jobs, I would just then restore the data-store from there. I really seems that something went wrong along the way when you copied the store the first time...

... which might be because the old store is corrupted for some reason. The error messages also imply that chunks are missing from the store.

Is there any other method which would be faster?

Also if I wanted to backup the datastore somewhere else like on an Object Storage platform or on another location without Proxmox Backup Server, what would be the best and most efficient method that is also reliable?

Would rsync? zfs send, or rclone work or something else is recommended?

Thank you.

I'm afraid there isn't a faster way. How large is the data store that you want to sync? I'd imagine the main bottleneck is probably your network.Is there any other method which would be faster?

Syncing to object storage like S3 directly from PBS is on our roadmap, however there is no ETA yet. Until then, I'd probably use rclone for that.Also if I wanted to backup the datastore somewhere else like on an Object Storage platform or on another location without Proxmox Backup Server, what would be the best and most efficient method that is also reliable?

Would rsync? zfs send, or rclone work or something else is recommended?

For sync locations other than object stores, I'd preferably zfs send/receive so that atomic snapshots of the datastore can be synced.

If you want to make sure that your synced datastore is always consistent, you could use the

maintenance-mode read-only, see [1,2].It ensures that there are no writing operations on the data store while you sync/take a snapshot.

For instance:

Code:

proxmox-backup-manager datastore update <datastore> --maintenance-mode read-only

# take snapshot/sync using rsync

proxmox-backup-manager datastore update <datastore> --delete maintenance-mode[1] https://pbs.proxmox.com/docs/proxmox-backup-manager/synopsis.html?highlight=maintenance mode

[2] https://pbs.proxmox.com/docs/maintenance.html#maintenance-mode

Is there an error or conflict if garbage collection, verification and or a sync job running at the same time on the same datastore? Would this cause errors? Is there a recommended procedure as to which should go first?I'm afraid there isn't a faster way. How large is the data store that you want to sync? I'd imagine the main bottleneck is probably your network.

Syncing to object storage like S3 directly from PBS is on our roadmap, however there is no ETA yet. Until then, I'd probably use rclone for that.

For sync locations other than object stores, I'd preferably zfs send/receive so that atomic snapshots of the datastore can be synced.

If you want to make sure that your synced datastore is always consistent, you could use themaintenance-mode read-only, see [1,2].

It ensures that there are no writing operations on the data store while you sync/take a snapshot.

For instance:

Code:proxmox-backup-manager datastore update <datastore> --maintenance-mode read-only # take snapshot/sync using rsync proxmox-backup-manager datastore update <datastore> --delete maintenance-mode

[1] https://pbs.proxmox.com/docs/proxmox-backup-manager/synopsis.html?highlight=maintenance mode

[2] https://pbs.proxmox.com/docs/maintenance.html#maintenance-mode

All operations that are performed by PBS should be appropriately locked, so that e.g. it should not be possible to corrupt a data store by e.g. starting a GC at the same time as some other operation. By sync job I assume you mean manually syncing to S3? In this case, I would make sure that no other operation is running at the same time - this is why I mentioned the maintenance mode above. PBS has no way of knowing that some other tool is currently operating on the data store.Is there an error or conflict if garbage collection, verification and or a sync job running at the same time on the same datastore? Would this cause errors? Is there a recommended procedure as to which should go first?

I would say the order of jobs does not matter too much, as long as you execute them regularly. I would make sense though to run a GC job before syncing to S3, otherwise you might store chunks that are not needed anymore.

Hello Lukas, I was just going back over this thread. In order to get my datastore onto some type of cloud object storage, would you recommend rclone? Or restic? I'd prefer Restic because it has snapshots, chunking, integrity checks and security. I'm just wondering if it takes considerably longer than using rclone alone.All operations that are performed by PBS should be appropriately locked, so that e.g. it should not be possible to corrupt a data store by e.g. starting a GC at the same time as some other operation. By sync job I assume you mean manually syncing to S3? In this case, I would make sure that no other operation is running at the same time - this is why I mentioned the maintenance mode above. PBS has no way of knowing that some other tool is currently operating on the data store.

I would say the order of jobs does not matter too much, as long as you execute them regularly. I would make sense though to run a GC job before syncing to S3, otherwise you might store chunks that are not needed anymore.

I have used restic before and I was quite happy with it. However, consider that many of Restic's features are "wasted".Hello Lukas, I was just going back over this thread. In order to get my datastore onto some type of cloud object storage, would you recommend rclone? Or restic? I'd prefer Restic because it has snapshots, chunking, integrity checks and security. I'm just wondering if it takes considerably longer than using rclone alone.

Datastores are generally already chunked, Proxmox Backup Server has verification jobs for ensuring integrity, Proxmox Backup Server can also encrypt chunks (security), etc.

Keep in mind, that restic's integrity checks have to download *all* data to verify it's correctness.

I guess both tools would work equally well for that case.

Are they encrypted by default? Is there anything I need to do to encrypt or decrypt them aside from the encryption key? How secure is it?I have used restic before and I was quite happy with it. However, consider that many of Restic's features are "wasted".

Datastores are generally already chunked, Proxmox Backup Server has verification jobs for ensuring integrity, Proxmox Backup Server can also encrypt chunks (security), etc.

Keep in mind, that restic's integrity checks have to download *all* data to verify it's correctness.

I guess both tools would work equally well for that case.

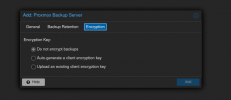

I can see a partial key when I hover over the VM -> Encryption. I also have one of my initial VMs from 2020 which is unencrypted, is there any way to go back and encrypt it? are we able to change the encryption key

I think because my store is getting quite large, the restic integrity check is what is taking up a lot of time and resources. It does a whole scan and then finally just makes changes.

Are they encrypted by default? Is there anything I need to do to encrypt or decrypt them aside from the encryption key? How secure is it?

Not by default, you have to set an encryption key in in the storage configuration for the backup server in Proxmox VE. Please note that adding an encryption key to an existing backup server does not encrypt existing backups, it's only for new content. We use the AES 256 GCM cipher, that should be pretty solid in terms of security.

AFAIK there is nothing built in to encrypt existing backups. The encryption key can be changed in the same dialog window as shown above, however then you will not be able to restore backups that were encrypted with the old key. In other words, old content will not be re-encrypted with thew new key.I also have one of my initial VMs from 2020 which is unencrypted, is there any way to go back and encrypt it? are we able to change the encryption key