Hello!

Please help me, I can not find where is the problem. Software: Backup Server 3.0-3

There are two, identical datastores. Let's say: DATASTORE-OKAY; DATASTORE-SLOW.

They are mounted from a QNAP NAS with NFS. The NAS has an NVMe SSD cache on a top of ZFS.

DATASTORE-OKAY:

Index file count: 60

Original Data usage: 722.906 GiB

On-Disk usage: 20.307 GiB (2.81%)

On-Disk chunks: 31985

Deduplication Factor: 35.60

The garbage collect starts at 0:00; finishes at 0:09. This is okay, I think.

DATASTORE-SLOW:

Index file count: 31

Original Data usage: 30.289 TiB

On-Disk usage: 11.008 GiB (0.04%)

On-Disk chunks: 14075

Deduplication Factor: 2817.61

The garbage collection starts at 0:30, and today finished at 7:15 (!!!), takes 6 hours and 45 minutes (!!!)...

The interesting thing is that the amount of data does not increase, but the process takes 20 minutes longer every day.

This is how the garbage collection running time looks like for the past few days, copied from "Longest tasks (30 days)":

2023.10.26.: garbage collection takes 6:45:21

2023.10.25.: garbage collection takes 6:18:18

2023.10.24.: garbage collection takes 5:56:36

2023.10.23.: garbage collection takes 5:36:10

2023.10.22.: garbage collection takes 5:16:28

2023.10.21.: garbage collection takes 4:56:58

2023.10.20.: garbage collection takes 4:37:17

2023.10.19.: garbage collection takes 4:17:37

2023.10.18.: garbage collection takes 3:57:54

From day to day, is slower with ~20 minutes. In practice, it is exactly 20 minutes longer, every day.

There are no parallel tasks running, neither saving nor checking.

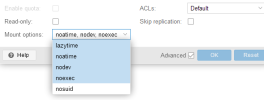

/etc/fstab:

[NAS-IP-ADDRESS]:/DATASTORE-OKAY /mnt/NAS/DATASTORE-OKAY nfs defaults 0 0

[NAS-IP-ADDRESS]:/DATASTORE-SLOW /mnt/NAS/DATASTORE-SLOW nfs defaults 0 0

mount:

[NAS-IP-ADDRESS]:/DATASTORE-SLOW on /mnt/NAS/DATASTORE-SLOW type nfs4 (rw,relatime,vers=4.2,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=172.21.111.220,local_lock=none,addr=[NAS-IP-ADDRESS])

[NAS-IP-ADDRESS]:/DATASTORE-OKAY on /mnt/NAS/DATASTORE-OKAY type nfs4 (rw,relatime,vers=4.2,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=172.21.111.220,local_lock=none,addr=[NAS-IP-ADDRESS])

Could you please help me, where should I look for the error?

Please help me, I can not find where is the problem. Software: Backup Server 3.0-3

There are two, identical datastores. Let's say: DATASTORE-OKAY; DATASTORE-SLOW.

They are mounted from a QNAP NAS with NFS. The NAS has an NVMe SSD cache on a top of ZFS.

DATASTORE-OKAY:

Index file count: 60

Original Data usage: 722.906 GiB

On-Disk usage: 20.307 GiB (2.81%)

On-Disk chunks: 31985

Deduplication Factor: 35.60

The garbage collect starts at 0:00; finishes at 0:09. This is okay, I think.

DATASTORE-SLOW:

Index file count: 31

Original Data usage: 30.289 TiB

On-Disk usage: 11.008 GiB (0.04%)

On-Disk chunks: 14075

Deduplication Factor: 2817.61

The garbage collection starts at 0:30, and today finished at 7:15 (!!!), takes 6 hours and 45 minutes (!!!)...

The interesting thing is that the amount of data does not increase, but the process takes 20 minutes longer every day.

This is how the garbage collection running time looks like for the past few days, copied from "Longest tasks (30 days)":

2023.10.26.: garbage collection takes 6:45:21

2023.10.25.: garbage collection takes 6:18:18

2023.10.24.: garbage collection takes 5:56:36

2023.10.23.: garbage collection takes 5:36:10

2023.10.22.: garbage collection takes 5:16:28

2023.10.21.: garbage collection takes 4:56:58

2023.10.20.: garbage collection takes 4:37:17

2023.10.19.: garbage collection takes 4:17:37

2023.10.18.: garbage collection takes 3:57:54

From day to day, is slower with ~20 minutes. In practice, it is exactly 20 minutes longer, every day.

There are no parallel tasks running, neither saving nor checking.

/etc/fstab:

[NAS-IP-ADDRESS]:/DATASTORE-OKAY /mnt/NAS/DATASTORE-OKAY nfs defaults 0 0

[NAS-IP-ADDRESS]:/DATASTORE-SLOW /mnt/NAS/DATASTORE-SLOW nfs defaults 0 0

mount:

[NAS-IP-ADDRESS]:/DATASTORE-SLOW on /mnt/NAS/DATASTORE-SLOW type nfs4 (rw,relatime,vers=4.2,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=172.21.111.220,local_lock=none,addr=[NAS-IP-ADDRESS])

[NAS-IP-ADDRESS]:/DATASTORE-OKAY on /mnt/NAS/DATASTORE-OKAY type nfs4 (rw,relatime,vers=4.2,rsize=1048576,wsize=1048576,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=172.21.111.220,local_lock=none,addr=[NAS-IP-ADDRESS])

Could you please help me, where should I look for the error?

Last edited: