I am following the guide

https://pve.proxmox.com/wiki/Full_Mesh_Network_for_Ceph_Server#Broadcast_setup

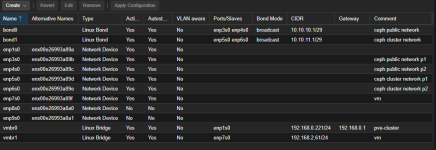

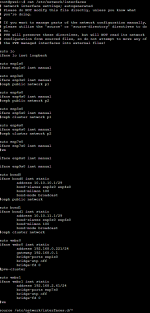

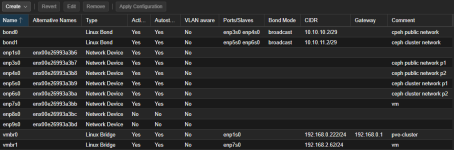

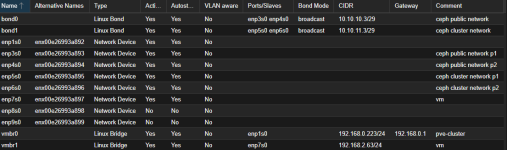

In the last paragraph describing the BROADCAST SETUP configuration, I set up two network cards for each host to be used with ceph.

When I install and configure ceph, there are two fields to fill in the SETUP -> CONFIGURATION window:

- Public Network

- Cluster Network

Should I put the same bond in both, or would it be better to create two bonds, one for each setting?

https://pve.proxmox.com/wiki/Full_Mesh_Network_for_Ceph_Server#Broadcast_setup

In the last paragraph describing the BROADCAST SETUP configuration, I set up two network cards for each host to be used with ceph.

When I install and configure ceph, there are two fields to fill in the SETUP -> CONFIGURATION window:

- Public Network

- Cluster Network

Should I put the same bond in both, or would it be better to create two bonds, one for each setting?