Hello,

I've tried may times.

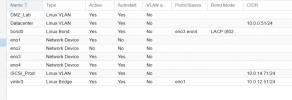

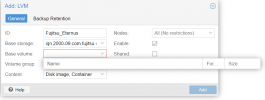

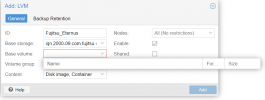

I've added the iSCSI target, iqn.2000-09.com.fujitsu (iSCSI), I can see four fujtisu target, I choosed one, but when I try to create a LVM disk, I can't go futher because base volume is empty:

Datacenter -> Storage -> Add -> LVM

I've tried to remove iSCSI target and add again on the host without results. It's a 12TB Thin LUN.

ls -l /dev/disk/by-id

iscsiadm --mode discovery --type sendtargets --portal 10.0.14.61

Any suggestion?

I've tried may times.

I've added the iSCSI target, iqn.2000-09.com.fujitsu (iSCSI), I can see four fujtisu target, I choosed one, but when I try to create a LVM disk, I can't go futher because base volume is empty:

Datacenter -> Storage -> Add -> LVM

I've tried to remove iSCSI target and add again on the host without results. It's a 12TB Thin LUN.

ls -l /dev/disk/by-id

Bash:

total 0

lrwxrwxrwx 1 root root 10 Apr 3 16:41 dm-name-pve-root -> ../../dm-1

lrwxrwxrwx 1 root root 10 Apr 3 16:41 dm-name-pve-swap -> ../../dm-0

lrwxrwxrwx 1 root root 10 Apr 3 16:41 dm-uuid-LVM-5zWj58ww4Elzf8UHnCdTfTeZlpBmaFxE9dcn9xuCr6G1eI8wSy5B5O185nSmHm56 -> ../../dm-1

lrwxrwxrwx 1 root root 10 Apr 3 16:41 dm-uuid-LVM-5zWj58ww4Elzf8UHnCdTfTeZlpBmaFxEqrml41GxQJObaYEJGykmfSuPn1KL1pzS -> ../../dm-0

lrwxrwxrwx 1 root root 10 Apr 4 15:47 lvm-pv-uuid-tNZX3Q-PyTX-rBd5-ti47-zQCe-1Edy-4rc2qz -> ../../sda3

lrwxrwxrwx 1 root root 9 Apr 4 15:44 scsi-3600000e00d3200000032121000060000 -> ../../sdc

lrwxrwxrwx 1 root root 9 Apr 4 15:44 scsi-SFUJITSU_ETERNUS_DXL_320484 -> ../../sdc

lrwxrwxrwx 1 root root 9 Apr 3 16:41 usb-FUJITSU_Dual_microSD_012345678901-0:0 -> ../../sda

lrwxrwxrwx 1 root root 10 Apr 3 16:41 usb-FUJITSU_Dual_microSD_012345678901-0:0-part1 -> ../../sda1

lrwxrwxrwx 1 root root 10 Apr 3 16:41 usb-FUJITSU_Dual_microSD_012345678901-0:0-part2 -> ../../sda2

lrwxrwxrwx 1 root root 10 Apr 4 15:47 usb-FUJITSU_Dual_microSD_012345678901-0:0-part3 -> ../../sda3

lrwxrwxrwx 1 root root 9 Apr 4 15:44 wwn-0x600000e00d3200000032121000060000 -> ../../sdciscsiadm --mode discovery --type sendtargets --portal 10.0.14.61

Bash:

[fe80::200:e50:dc84:8400]:3260,1 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0000

10.0.12.61:3260,1 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0000

10.0.14.61:3260,1 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0000

[fe80::200:e50:dc84:8401]:3260,2 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0001

10.0.14.62:3260,2 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0001

10.0.12.62:3260,2 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0001

[fe80::200:e50:dc84:8410]:3260,3 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0100

10.0.12.63:3260,3 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0100

10.0.14.63:3260,3 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0100

[fe80::200:e50:dc84:8411]:3260,4 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0101

10.0.12.64:3260,4 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0101

10.0.14.64:3260,4 iqn.2000-09.com.fujitsu:storage-system.eternus-dxl:00321210:0101Any suggestion?

Last edited: