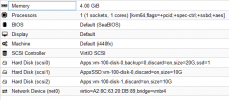

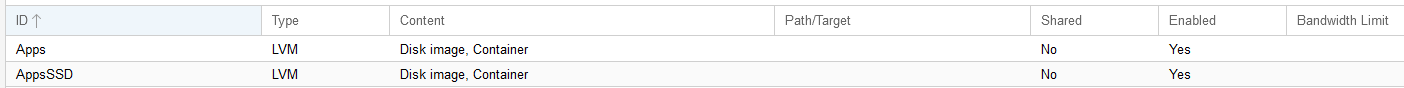

Hello, I am running Proxmox 6.2 with few VMs stored on LVM storage backed by HDD raid.

I have one guest with 1TB scsi with Discard disabled (do not want a discard on every delete).

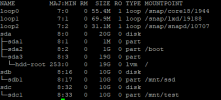

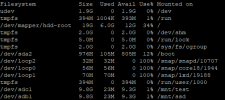

Inside the guest i have LVM partition with currently used 112G of 983GB total size.

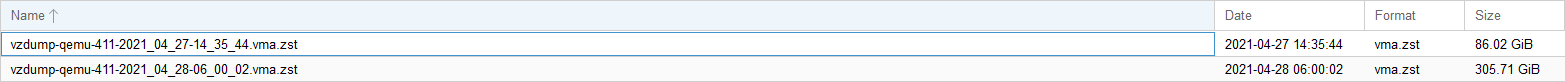

In last days i had some data on this partition that was removed and proxmox backup shows:

INFO: backup is sparse: 537.12 GiB (53%) total zero data

INFO: transferred 1010.00 GiB in 6329 seconds (163.4 MiB/s)

Backup compression is set to ZSTD and result is 297GB.

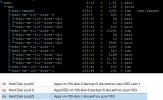

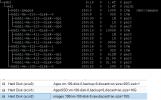

Running fstrim / -v displays some data being trimmed but backup size does not reduce.

Could you tell me how to reduce the backup size ?

I have one guest with 1TB scsi with Discard disabled (do not want a discard on every delete).

Inside the guest i have LVM partition with currently used 112G of 983GB total size.

In last days i had some data on this partition that was removed and proxmox backup shows:

INFO: backup is sparse: 537.12 GiB (53%) total zero data

INFO: transferred 1010.00 GiB in 6329 seconds (163.4 MiB/s)

Backup compression is set to ZSTD and result is 297GB.

Running fstrim / -v displays some data being trimmed but backup size does not reduce.

Could you tell me how to reduce the backup size ?