Eh, Hynix seems better, honestly. But no matter, i need to get the stuff asap so i can start doing something with it

[SOLVED] From ESXi to PROXMOX

- Thread starter GazdaJezda

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Ok, i can say that some 85% of road to fully working server is done  EVO 970 for system is now plugged, right now i'm downloading PM VE 6.3-1 ISO to install. Are there any special tricks? I'm not gonna use a whole space for system, since i read that it is better to leave some unpartitioned space which can help later in wearing out process. Any special hints, please?

EVO 970 for system is now plugged, right now i'm downloading PM VE 6.3-1 ISO to install. Are there any special tricks? I'm not gonna use a whole space for system, since i read that it is better to leave some unpartitioned space which can help later in wearing out process. Any special hints, please?

Attachments

Ok, i (hopefully) successfully installed ProxMoxVE 6.3-2 on new combo with default settings. Install create LVM & LVM-Thin volumes with enough free space that even proper hard drives will come only next year (AMAZON said on 7.1.2021) i still have wish to try how virtualization works on PMOX. So i would like to convert some of my current ESXi VM's but i do not find upload option on PMOX WEB UI to upload OVF files and convert them.

Do i need to copy files via shell (scp) or i cannot do that since there is only one disk in server (the one where system reside)? Thanks for any usable info how can i upload OVA files to "data" volume so i can convert & start VM. Thanks!

Do i need to copy files via shell (scp) or i cannot do that since there is only one disk in server (the one where system reside)? Thanks for any usable info how can i upload OVA files to "data" volume so i can convert & start VM. Thanks!

Attachments

maybe check this out:

https://pve.proxmox.com/wiki/Migration_of_servers_to_Proxmox_VE

And if you want to have an option to move into ZFS-based storage, then maybe the approach I have used back then might help:

https://forum.proxmox.com/threads/migrate-vms-from-proxmox-hyper-v.48682/post-228123

All the best

https://pve.proxmox.com/wiki/Migration_of_servers_to_Proxmox_VE

And if you want to have an option to move into ZFS-based storage, then maybe the approach I have used back then might help:

https://forum.proxmox.com/threads/migrate-vms-from-proxmox-hyper-v.48682/post-228123

All the best

tburger: many thanx for answer. I will try that tommorow. And yes, there are few things i'm not familiar with (in Pmox) but with help from you guys here, i will handle it, no doubt. Thanx again.

Quick update: SSD's are still not here, neither additional 64 Gigs of RAM. But just for test i managed to succesufully transfer and start 2 of my VM's (1 Linux - Ubuntu and 1 FreeBSD) from home ESX-i box. First will run from now on ProxBox until i get all components and migrate all other VM's also. Migration process is quite easy. When that disks will come so i will see how ZFS will perform, damn you Amazon?!!

Also, new server is quite funny stuff (little and quite powerfull). With vents on 3K rpm, max. temp. i see was 47 °C, which is great. So far everything running great and i only have positive things to say about PMox

Also, new server is quite funny stuff (little and quite powerfull). With vents on 3K rpm, max. temp. i see was 47 °C, which is great. So far everything running great and i only have positive things to say about PMox

Attachments

Ok, now let's get real  Everything is in place now, so please i just need a hint from someone who knows ZFS better than me (it's not so hard, hehe)

Everything is in place now, so please i just need a hint from someone who knows ZFS better than me (it's not so hard, hehe)

This is now final configuration:

Since i need space little bit more than speed, this is the reason why i choose RAIDZ over mirror / RAID10. Ok, i honestly hope that still there will be some speed since drives are SSD's. If it will be faster than current Areca IDE drives RAID5 volume, that's perfectly fine with me - will be happy, really. So please, which ashift, compression option shall i use? And of course, thank you in advance!!!

This is now final configuration:

- Samsung Evo970 - system resides here

- 4 x Samsung Evo 860 2TB which i want to use as ZFS RAIDZ (need space).

- 128 GB ECC RAM

Since i need space little bit more than speed, this is the reason why i choose RAIDZ over mirror / RAID10. Ok, i honestly hope that still there will be some speed since drives are SSD's. If it will be faster than current Areca IDE drives RAID5 volume, that's perfectly fine with me - will be happy, really. So please, which ashift, compression option shall i use? And of course, thank you in advance!!!

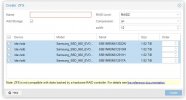

Attachments

Not much I can advise:

- Use ASHIFT12 (for 4k allignment IIRC)

- Make sure that if you using ZFS ZVOLS (so place your VMs on ZFS) to use a volume blocksize of 64kb or 128kb. Otherwise you will have a lot of overhead in terms of space usage which you might not realize in time. Later on things are pretty tough to fix usually.

I personally dont use compression because my data is DM-encrypted - so no need for that.

Last thing: take a look to your wear-out of the SSDs. I have no experience with those specific ones but better safe than sorry.

- Use ASHIFT12 (for 4k allignment IIRC)

- Make sure that if you using ZFS ZVOLS (so place your VMs on ZFS) to use a volume blocksize of 64kb or 128kb. Otherwise you will have a lot of overhead in terms of space usage which you might not realize in time. Later on things are pretty tough to fix usually.

I personally dont use compression because my data is DM-encrypted - so no need for that.

Last thing: take a look to your wear-out of the SSDs. I have no experience with those specific ones but better safe than sorry.

Ok. Will try to do that way. I already have that blocksize on VM's i think. But anyway i think that i will need to made a fresh install of Linux VM's and replace current FreeBSD based ones. Linux seems much better supported in KVM as BSD (no shit). Also, majority of my VM's are quite small and will be migrated quickly. Beside one, which is our data warehouse, this one will last. But hey, step after step and i think it will be done

Hmm. Just create a ZFS volume via ProxMox webui where i select RAIDZ1, all 4 SSD drives, ASHIFT12 and after confirm error spawn. But system said that volume is created and OK. I clone one VM to it and it seems that everything is ok. Shall i use it or delete and create it again? Thank you.

Attachments

be carefull with evo drives, they sucks with sync writes, so with ceph but maybe with zfs too.

I intend to usr ZFS, i hope it will be fine. At the moment there are 2 VM's running (both Linux based).

Hi,Hmm. Just create a ZFS volume via ProxMox webui where i select RAIDZ1, all 4 SSD drives, ASHIFT12 and after confirm error spawn. But system said that volume is created and OK. I clone one VM to it and it seems that everything is ok. Shall i use it or delete and create it again? Thank you.

I think you should be OK. There was a problem where the API returned an error even though ZFS storage creation was successful some weeks ago. As you have been able to migrate VMs to it already, I'd assume it's fine.

Could you please post the output of the following command?

Code:

pveversion -vAlso, I failed to reproduce this on

Code:

pve-manager: 6.3-3 (running version: 6.3-3/eee5f901)

libpve-storage-perl: 6.3-4

Last edited:

Dominic: thank you! I think you are completely right. Because I only yesterday run update of my Proxmox system (there was some 25 packet waiting to install). And since i create a ZFS pool day before it was generated with the older version, which generate that output.

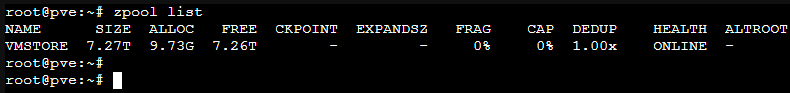

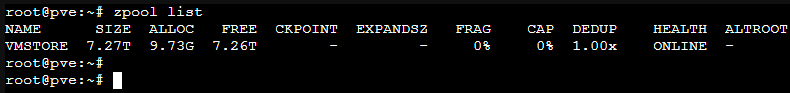

"zpool list" ouput is ok i believe:

Also, since there are 2 VM's running without any problem (for now) i will leave it as it is. When i will found time, i'm gonna transfer my other VMs.

Pveversion output is:

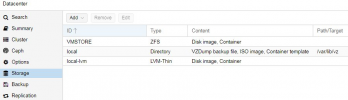

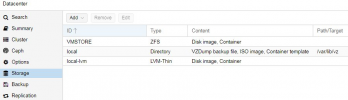

The only problem (i mean not a problem, i better call it obstacle to cross) is that if i try to backup VM i only get a smallest drive / volume on select list:

Is this related with

Can i somehow tag "local-lvm" or "VMSTORE" volume to also be a backup device? Then i can rsync it to other location, that would be great.

There are few things which are different as in ESX, but so far i'm quite happy with what i get. Next step is buying a license (community) to get rid of login "You do not have a license" msgbox

"zpool list" ouput is ok i believe:

Also, since there are 2 VM's running without any problem (for now) i will leave it as it is. When i will found time, i'm gonna transfer my other VMs.

Pveversion output is:

The only problem (i mean not a problem, i better call it obstacle to cross) is that if i try to backup VM i only get a smallest drive / volume on select list:

Is this related with

Can i somehow tag "local-lvm" or "VMSTORE" volume to also be a backup device? Then i can rsync it to other location, that would be great.

There are few things which are different as in ESX, but so far i'm quite happy with what i get. Next step is buying a license (community) to get rid of login "You do not have a license" msgbox

Not directly. At the bottom of the Storage Wiki article are links to details about all storages and none of LVM, LVM thin or ZFS have "backup" as available content type. You could do one of the followingCan i somehow tag "local-lvm" or "VMSTORE" volume to also be a backup device?

- Create a directory storage on your zpool. Something like the following:

zfs create VMSTORE/backup

pvesm add dir backupstorage --path /VMSTORE/backup --content backup

vzdump 202 --storage backupstorage - Create a PBS VM use all the features and rsync from there to some remote location

I can confirm that with zfs they sucks .... and:be carefull with evo drives, they sucks with sync writes, so with ceph but maybe with zfs too.

From data sheet, your SSDs have 1,200 TBW Limited Warranty =>

1200 / 5 years / 365 days = 0,657 TBW / day

Going next(raidz1), for 1 TB that you write in the pool you will write on 4 x SSD aprox. 1.25 TB(at minimum), so be aware about this!

Last edited:

Not directly. At the bottom of the Storage Wiki article are links to details about all storages and none of LVM, LVM thin or ZFS have "backup" as available content type. You could do one of the following

- Create a directory storage on your zpool. Something like the following:

- Create a PBS VM use all the features and rsync from there to some remote location

Aha. Ok, i will take a dive onto that and try doing that. Thanky you!

I can confirm that with zfs they sucks .... and:

From data sheet, your SSDs have 1,200 TBW Limited Warranty =>

1200 / 5 years / 365 days = 0,657 TBW / day

Going next(raidz1), for 1 TB that you write in the pool you will write on 4 x SSD aprox. 1.25 TB(at minimum), so be aware about this!

Ok, since this server is for my personal usage it won't have plenty of writing, mainly it will be used for reading (movies, music...) and for some webpage serving. It is not for some heavy usage. I hope discs will survive at least 4 years (hopefully).

But at the moment i have some other problem which bangs my head: i converted my OPENVPN appliance VM and also installed qemu-agent into it. It works ok, but after 2 or 3 days it hangs with:

Only hard reset (STOP + START) of VM helps. I think that VMWare drivers (which came with VM) can be a reason:

but unfortunatelly i don't know how to disable them. Blacklisting or something... i need to figure it how. Will try and if that issue will repeat i will post again here.

With best regards! Stay well!

But at the moment i have some other problem which bangs my head: i converted my OPENVPN appliance VM and also installed qemu-agent into it. It works ok, but after 2 or 3 days it hangs with:

Hi @GazdaJezda

Im not a person who know kernel stuff, but from what I can guess, is about some kind of tool who try to stop you virtual HDD(spin down?). Maybe other forum member can have more experience with kernel ??

Good luck / Bafta !

Hello @GazdaJezda ,

I am glad that I can help to solve your problem(I hope this ....). When you are sure that your problem is solved, maybe you will find the time and mark this post as [solved] !!!

Good luck /Bafta ... and stay safe!

I am glad that I can help to solve your problem(I hope this ....). When you are sure that your problem is solved, maybe you will find the time and mark this post as [solved] !!!

Good luck /Bafta ... and stay safe!