[SOLVED] From ESXi to PROXMOX

- Thread starter GazdaJezda

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GazdajezdaHi!

Before i jump into completely new world of virtualization (for me of course) please a need a hint that my choosen hardware will play fine with Proxmox. I need to replace my 6 year old homelab (ESXi) server (i5, 32 gigs of ram, hw raid with 6 drives..). Nothing special, but it runs well for all that time. I wann't to replace it with this new sexy small combo which seems almost ideal for me:

That would be nice replacement for my current system and to replace ESXi with PROXMOX. But ... MB and chasis are so small that i cannot put any decent RAID card into (because of overheating problems, which may arise). Instead of that, i will user M.2 drive for system and use ZFS (RAIDZ) for SSD drives for their entire capacity. I choose RAIDZ because of smalest space tax - on MB there is room only for 4 SATA drives. So please anyone who can help me with tips & strategy of how to prepare / format SSD's (best alignment options for that particular SSD drives) and how / where to store ZFS ZIL & logs. Can system M.2 drive be used for ZIL? Since that is home server i do not need any form of heavy logging. I just need a stable system. And yes, i hope that i will end with SSD drive speed better that of current system (old Areca 1231ML RAID card) which is from 150 to 230 Mb/s. I know ZFS is software 'raid' system, but it is real to expect that? Since drives are SSD and whole system is newer i hope that is not so unrealistic.

- https://www.supermicro.com/en/products/motherboard/M11SDV-8C-LN4F (AMD EPYC 3251 soc - small but powerfull)

- https://www.supermicro.com/en/products/chassis/1u/505/SC505-203B

- 64Gb of RAM

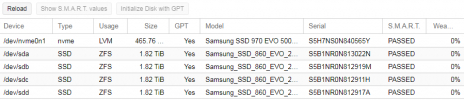

- 4 x Samsung EVO860 2Tb

- 1 x M.2 drive

Thanks to anyone who will find time to reply meStay safe & healty!

This all will work! The only item that is your Limited on is CPU / Compute. 8 cores and 16 threads so can run maybe 4 System VM or 8 LXC containers and this my be a issue if you need more?. I build all my home system for decades (Have a hardware background) and rather build my own save money and expand easily.

Current server is older AMD Opteron 6276 16 core x 2 =32 Cores found on Ebay brand new, no hours on them. So built the rest around that platforms with

- Supermicro BM E-full size ( Ebay) rebuild $170.00

- AMD Opteron 6276 16 core x 2 =32 Cores $100 each at that time. Found on Ebay brand new, no hours on them

- 128GB Ecc ddr3 ram - had 64GB and added 64GB later at about total costs $200.00

- LSI Raid 1 GB ddr4 cache (so no ZFS) 16 Ports $161.00

- 8 Bay SSD with 1 x 4 TB SSD (had 3 added on other and can add 4 more) 107.00 x 4 $428.00

- 4 - 2TB SATA with 4 other bays $37 each Enterprise rebuilds and Cert 5 year Warr.

- 2 x 1 Gigabit Ethernet Build in Intels

- Can run 12 Full VM at the same time and or 12 LXC

- This all in a ATX- full size Desktop / Server Case with insulation and up to 6 180MM Fans (very quite) and bonus 850Watt P/S Platinum Case $150.00 P/S $109.00

All in was about 1300.00 US Dollars and well well worth it & Can run 12 Full VM at the same time and or 12 LXC's all at the same time.

I would also recommend looking to see if you can do something like this with AMD Ryzen or older Threadripper Family There higher clock speeds over Epyc family cost vs Performance. This will allow you add change, upgrade , etc later if you need too

Either way good luck.

| AMD Ryzen™ 9 12/core/24 therds | AMD Ryzen™ 7 16/core /32 Core |

|---|

Attachments

Last edited:

Hello!

At the moment my box is running fine, i'm happy. It hosts all my home VM's, which i needed to re-install / convert from being FreeBSD powered to Alpine Linux, 6-of them goes through re-incarnation Except PFSense VM, which come's bundled with FreeBSD and OpenVPN appliance, which is Ubuntu powered anyway. Among that i also installed / configured & successfully tested NUT (UPS support), so now major problems which was threat to me not such a big deal anymore. System works and also is very low on consumption (30W), which is great. Also disk speed is ok for me (160-200Mb/s) inside VM which is ok for me. Directly on PVE host, it's faster of course, so it's ok. Much than enough for this server's purpose.

Except PFSense VM, which come's bundled with FreeBSD and OpenVPN appliance, which is Ubuntu powered anyway. Among that i also installed / configured & successfully tested NUT (UPS support), so now major problems which was threat to me not such a big deal anymore. System works and also is very low on consumption (30W), which is great. Also disk speed is ok for me (160-200Mb/s) inside VM which is ok for me. Directly on PVE host, it's faster of course, so it's ok. Much than enough for this server's purpose.

But there is something else what i don't understand:

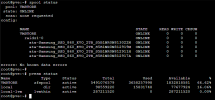

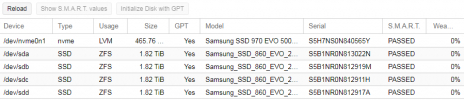

As is already wrote, i have 4 drives mounted in RAIDz1 volume:

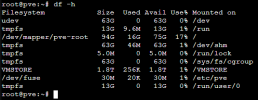

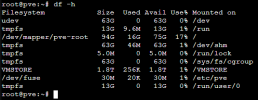

There are 4 2Tb drives in RAID5 kind of (VMSTORE volume), so one drive is for redundancy - approx. 6Tb is left for data. So far so good, but i don't not understand why in PVE console i see that:

As you can see, there is VMSTORE, which is only 1.8 Tb big and i can copy / delete files onto, but i cannot see any other file from shell (console). There supposed to be a VM files (discs, configs...). Also, if there is space reported (1.8Tb) it means that this VMSTORE volume is different that VMSTORE from WEBGUI? I know i'm not familiar with ZFS enough, but this is so confusing to me, that i'm afraid to do anything, since i don't know what it can do to my system (at the moment very well working and i don't wanna spoil that).

So, really thanks to anyone who can answer what i have do wrong to have a different volume sizes & content reported.

@Dominic: i will proceed with that, but at the moment i'm afraid doing anything (see below)

Best regards to anyone!

At the moment my box is running fine, i'm happy. It hosts all my home VM's, which i needed to re-install / convert from being FreeBSD powered to Alpine Linux, 6-of them goes through re-incarnation

But there is something else what i don't understand:

As is already wrote, i have 4 drives mounted in RAIDz1 volume:

There are 4 2Tb drives in RAID5 kind of (VMSTORE volume), so one drive is for redundancy - approx. 6Tb is left for data. So far so good, but i don't not understand why in PVE console i see that:

As you can see, there is VMSTORE, which is only 1.8 Tb big and i can copy / delete files onto, but i cannot see any other file from shell (console). There supposed to be a VM files (discs, configs...). Also, if there is space reported (1.8Tb) it means that this VMSTORE volume is different that VMSTORE from WEBGUI? I know i'm not familiar with ZFS enough, but this is so confusing to me, that i'm afraid to do anything, since i don't know what it can do to my system (at the moment very well working and i don't wanna spoil that).

So, really thanks to anyone who can answer what i have do wrong to have a different volume sizes & content reported.

Not directly. At the bottom of the Storage Wiki article are links to details about all storages and none of LVM, LVM thin or ZFS have "backup" as available content type. You could do one of the following

- Create a directory storage on your zpool. Something like the following:

- Create a PBS VM use all the features and rsync from there to some remote location

@Dominic: i will proceed with that, but at the moment i'm afraid doing anything (see below)

Best regards to anyone!

Last edited:

Reporting of free and used storage can be confusing at first. For example, some commands take parity into account and some don't and with RAIDZ1 this alone can lead to hundreds of GB of difference.

Consider also these two commands:

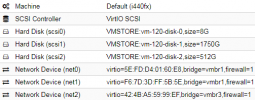

the config of the VM looks like this

Consider also these two commands:

Code:

zpool list

zfs listDisks yes, configs no. Configuration files for VMs are available atThere supposed to be a VM files (discs, configs...).

/etc/pve/qemu-server/<vmid>.conf. If you create a VM and choose a storage of type zfspool then the disks will be created on that pool. In the following code snippet the last entry is from a VM disk.

Code:

root@pve:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

tank 1.74T 3.75T 140K /tank

tank/testvol 297G 4.04T 81.4K -

tank/testvol2 1.16T 4.91T 81.4K -

tank/vm-101-disk-0 297G 4.04T 81.4K -

Code:

root@pve:~# qm config 101

bootdisk: scsi0

scsi0: tank:vm-101-disk-0,size=200G

Last edited:

Yes, it is confusing. Thank you for explanation, now it's a bit clearer. If i understand you correctly, every VM disc is in fact a ZFS dataset?

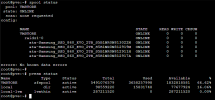

For instance, i get this zfs list output:

As you say, there really is a big difference between actual VM disc space alocated for that VM disc (i mean vm-120-*) and what zfs reports / manage:

Defined size of vm-120-disk-1 is 1750 Gb, zfs handles it like 2120 Gb.

But ok, i can live that. As i say, system works quite well so that's it for now! Mission acomplished Thread closed!

Thread closed!

For instance, i get this zfs list output:

Code:

NAME USED AVAIL REFER MOUNTPOINT

VMSTORE 3.41T 1.71T 140K /VMSTORE

VMSTORE/vm-100-disk-0 11.9G 1.72T 1.73G -

VMSTORE/vm-110-disk-0 11.9G 1.71T 6.74G -

VMSTORE/vm-120-disk-0 11.9G 1.71T 8.63G -

VMSTORE/vm-120-disk-1 2.54T 2.12T 2.12T -

VMSTORE/vm-120-disk-2 761G 1.83T 633G -

VMSTORE/vm-160-disk-0 14.9G 1.72T 1.38G -

VMSTORE/vm-165-disk-0 29.7G 1.71T 23.5G -

VMSTORE/vm-170-disk-0 29.7G 1.73T 2.65G -

VMSTORE/vm-181-disk-0 11.9G 1.72T 694M -

VMSTORE/vm-190-disk-0 5.95G 1.71T 341M -

root@pve:~#As you say, there really is a big difference between actual VM disc space alocated for that VM disc (i mean vm-120-*) and what zfs reports / manage:

Defined size of vm-120-disk-1 is 1750 Gb, zfs handles it like 2120 Gb.

But ok, i can live that. As i say, system works quite well so that's it for now! Mission acomplished

Great that it works!

By the way you can also list all content of a certain type on your Proxmox VE storage as follows

Yes.If i understand you correctly, every VM disc is in fact a ZFS dataset?

By the way you can also list all content of a certain type on your Proxmox VE storage as follows

Code:

pvesm list VMSTORE --content images